How to Build Guest Clusters on Hyper Converged Infrastructure (HCI)

With Hyper Converged Infrastructure (HCI) solutions becoming more common, many customers are now concerned with building clusters on top of those environments. In this article, I’ll explain how to build a guest HCI cluster and why traditional approaches don’t work.

In the past, customers built redundancy and resilience based on virtualization and Storage Area Networks (SAN), which were fully redundant and resilient. So, you had one virtual machine (VM) hosting your application and then hoped that your virtualization cluster and SAN kept it running during outages.

As those solutions have become cost-intensive in recent years, there has been a move towards HCI. Because most HCI solutions don’t support or leverage classic SAN technologies, like Fiber Channel Storage Area Networks, sharing storage infrastructure with your application is not possible because the storage used for the HCI and virtualization layer is exclusively for the hypervisor and it no longer supports additional workloads.

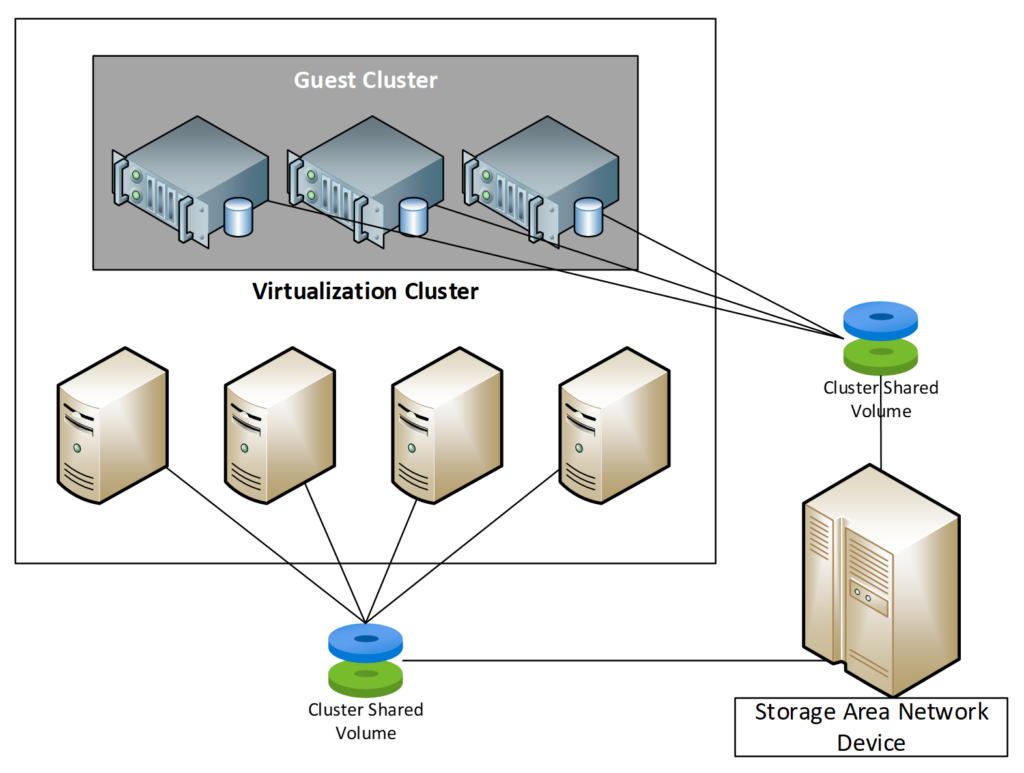

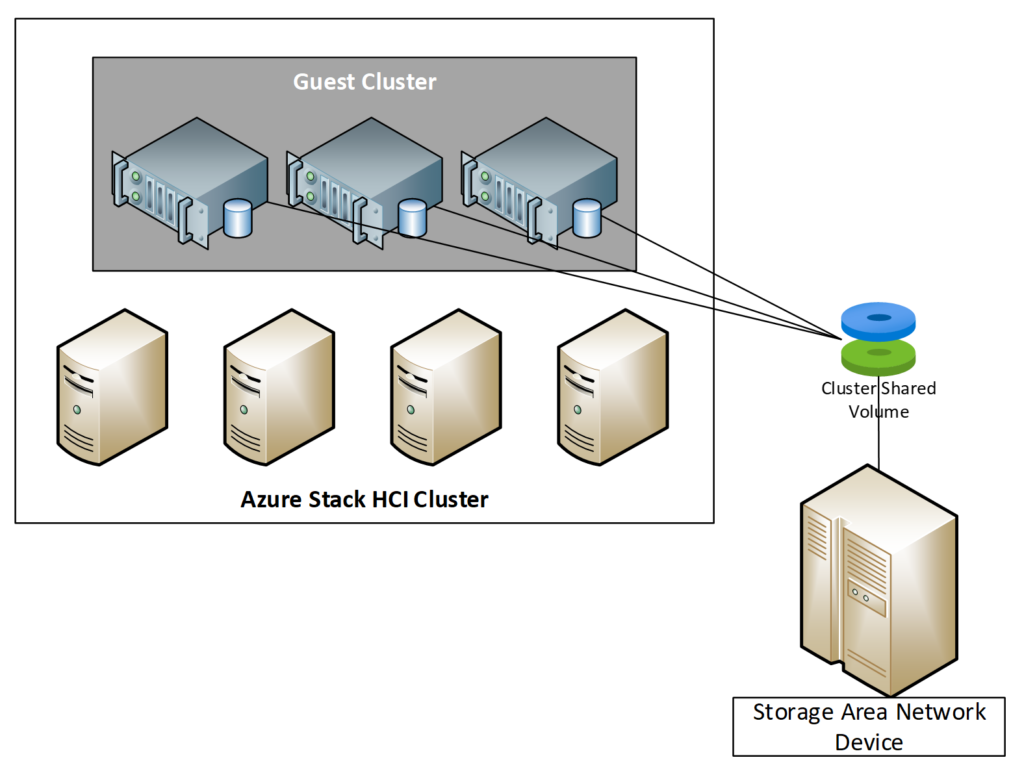

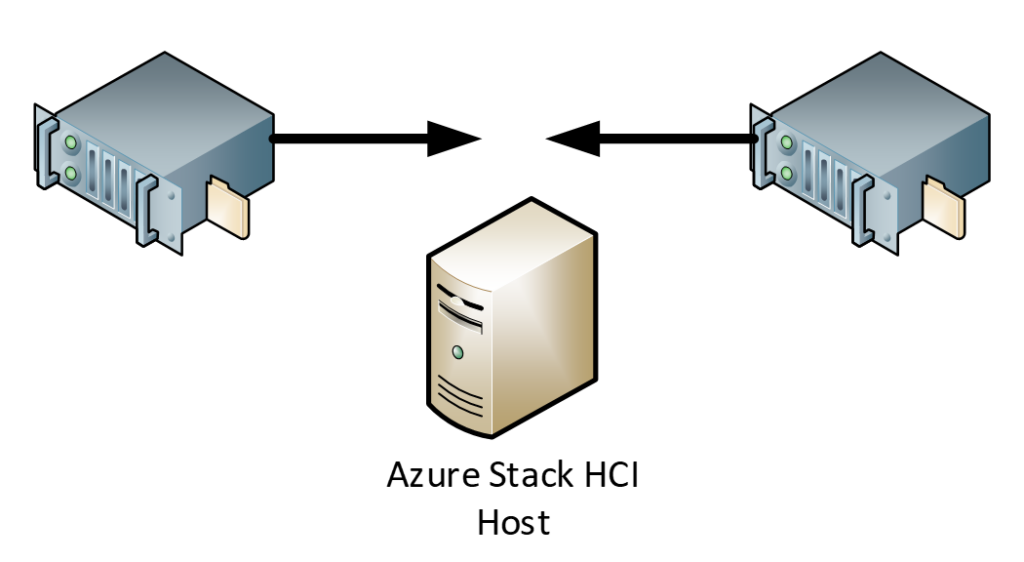

When using legacy solutions, you often have VMs that are acting as a clustered environment on top of your fabric infrastructure. Normally, or with a more classic converged approach, these clusters would share the same cluster storage as your virtualization infrastructure, so they would directly connect to your SAN. The diagram shown below illustrates the classic application and virtualization cluster approach.

What is a guest HCI cluster?

To solve this problem, a solution and strategy came in the form of guest clusters and infrastructure independent applications like Kubernetes and SQL Always On. A guest cluster is a failover cluster in which all of the cluster nodes are virtual machines.

In this article, I will show you some concepts based on Azure Stack HCI and customer scenarios I have encountered over the last few years.

3 types of guest clusters

When working with applications, you may encounter clusters that are acting and built differently but mainly there are three different types of clustered systems and applications.

- Application clusters

- Shared storage clusters

- Non-clustered services

1. Application Clusters

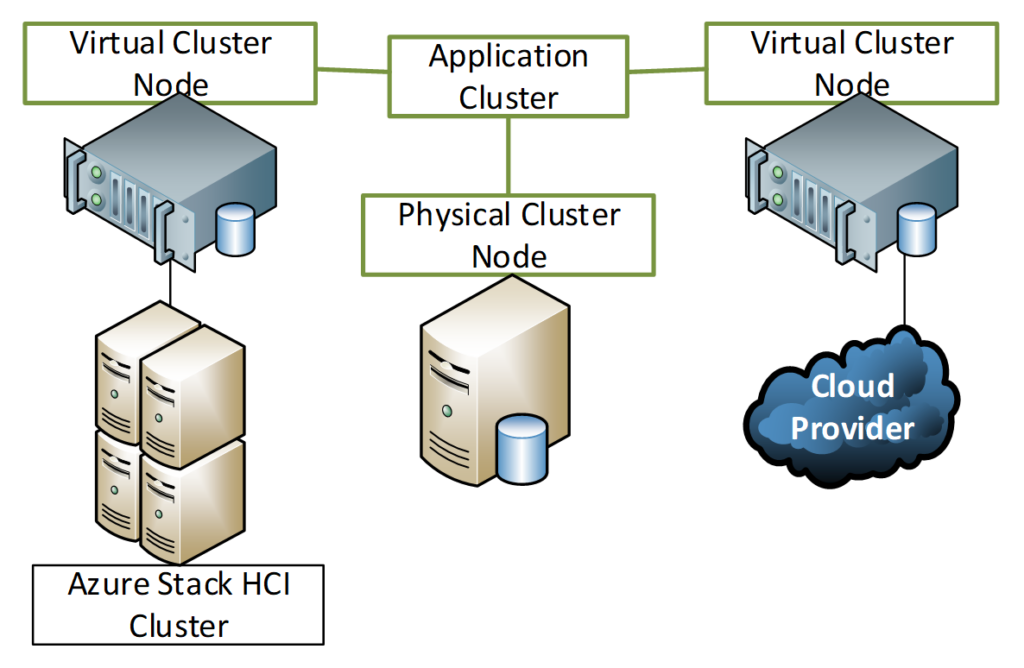

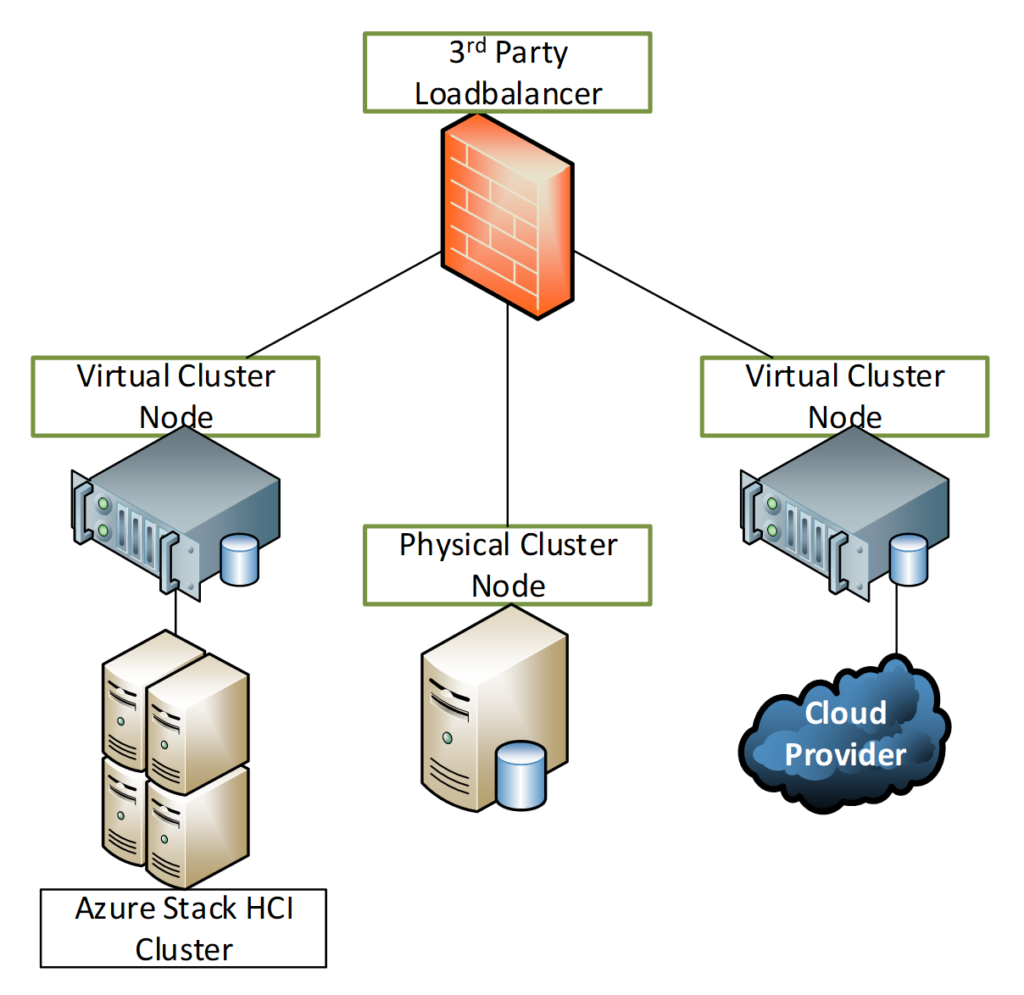

Application clusters use local storage within the application environment and replicate data between the different systems. The cluster is dependent on the underlaying infrastructure and it can be hosted on different platforms, for example different hypervisors, clouds, or bare metal. The schema below illustrates such clusters.

Azure Stack HCI and Nutanix for example are also by that definition application clusters.

Application clusters are normally accessed using third-party publishing, for example using load balancers or cluster IPs. A cluster IP is a proxy that represents a cluster with a single IP address.

Application clusters are often very flexible and better for maintenance as you can replace the infrastructure beneath on demand or when it fails.

Good examples for applications clusters are Microsoft Exchange, using Database Availability Groups (DAG), or modern Kubernetes applications. Both are built for application clustering.

Application clusters are now the most reliable and flexible option to deploy a cluster.

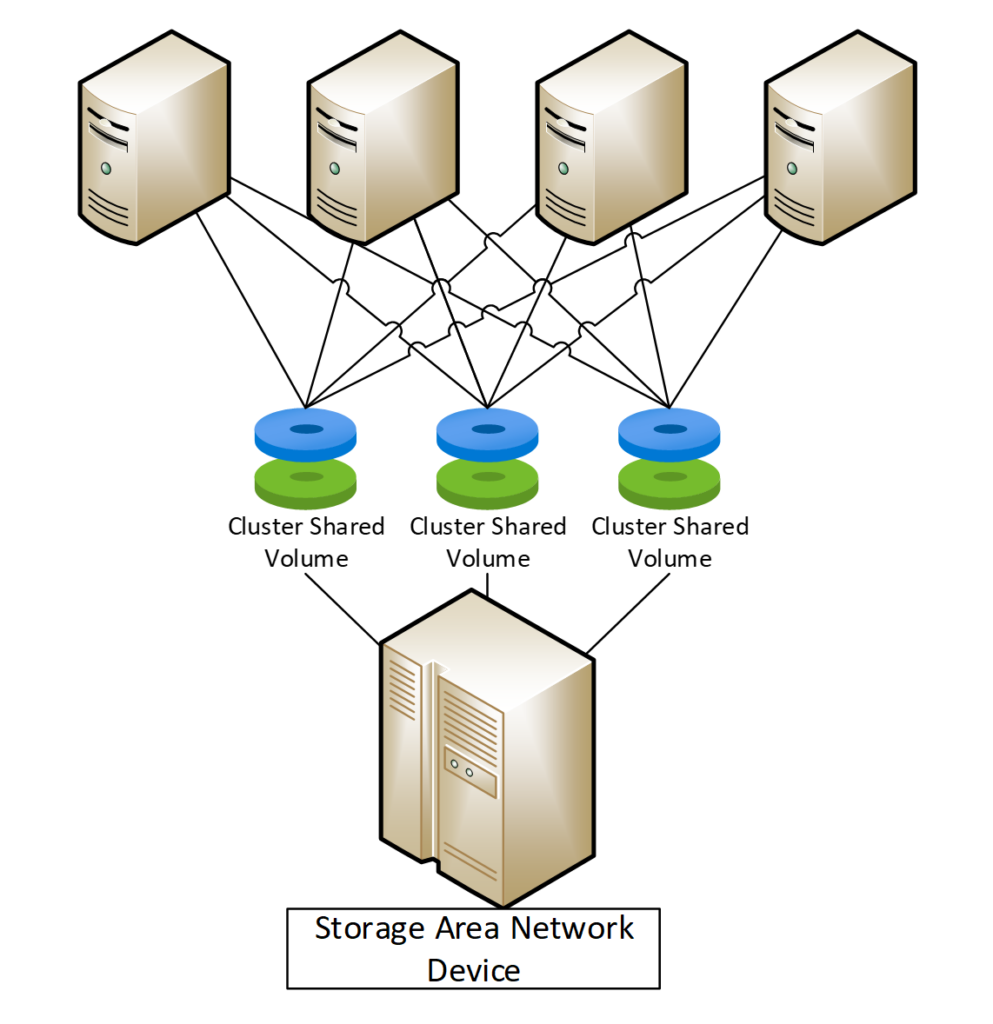

Another kind of cluster, and the most classic example, is those sharing cluster storage hosted outside of the cluster itself. The most common cluster targets are either iSCSI, Fiber Channel, and sometimes Server Message Block (SMB) or Network File System (NFS) shares.

Cluster targets often require a SAN infrastructure and additional hardware in your location. But with the current movement towards HCI and IT optimization, SANs are becoming obsolete for most customers and environments.

As most HCI and some hypervisors don’t support Fiber Channel, you often end up with alternative architectures. We will go through those options during the next steps of the article.

3. Non-clustered services

There are services that don’t look like a cluster but act as one to keep services up and running. One of the oldest services of this type is Windows Server Active Directory (AD) and LDAP.

AD and LDAP are not clusters per se, but they are work as global applications. They don’t require shared storage and don’t use common application cluster replication. Especially Windows Server Active Directory, which uses Windows Server Distributed File System (DFS) replication to distribute and update AD’s Global Catalog (GC).

For non-clustered services, like AD, the same availability rules as for clustered services apply.

The most challenging aspect of building a guest cluster is the shared storage. There are different ways to achieve clustering with shared storage. With Azure Stack HCI, there is only one option that is not available at the time of writing: you cannot use Fiber Channel for guest clusters, as Fiber Channel adapters are not supported in Azure Stack HCI host systems.

iSCSI, SMB, or NFS passthrough

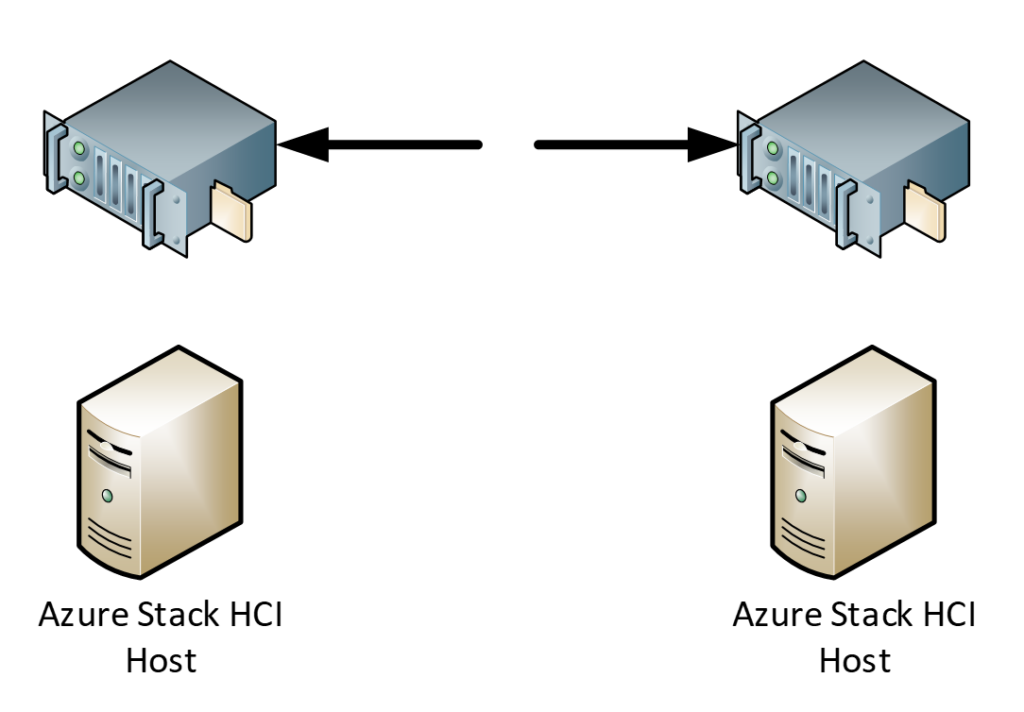

When using IP based storage or SANs like shown below, you can pass traffic through the regular network interfaces.

In this example, you mount the volumes directly to your cluster as you would normally would.

In Azure Stack HCI, I suggest adding additional network interfaces to the virtual machine that can be used for storage traffic. This kind of separation gives you additional control over storage traffic and you can apply quality of service (Qos) rules to the network interfaces.

If you don’t have a SAN environment, you may not be able to use this option. But you can still create an iSCSI target virtualized on Azure Stack HCI.

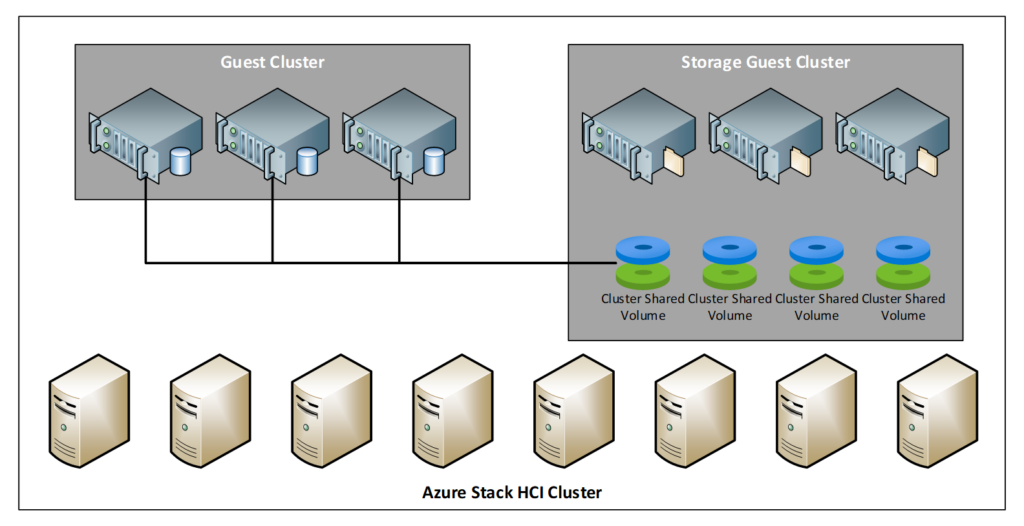

Virtual storage servers

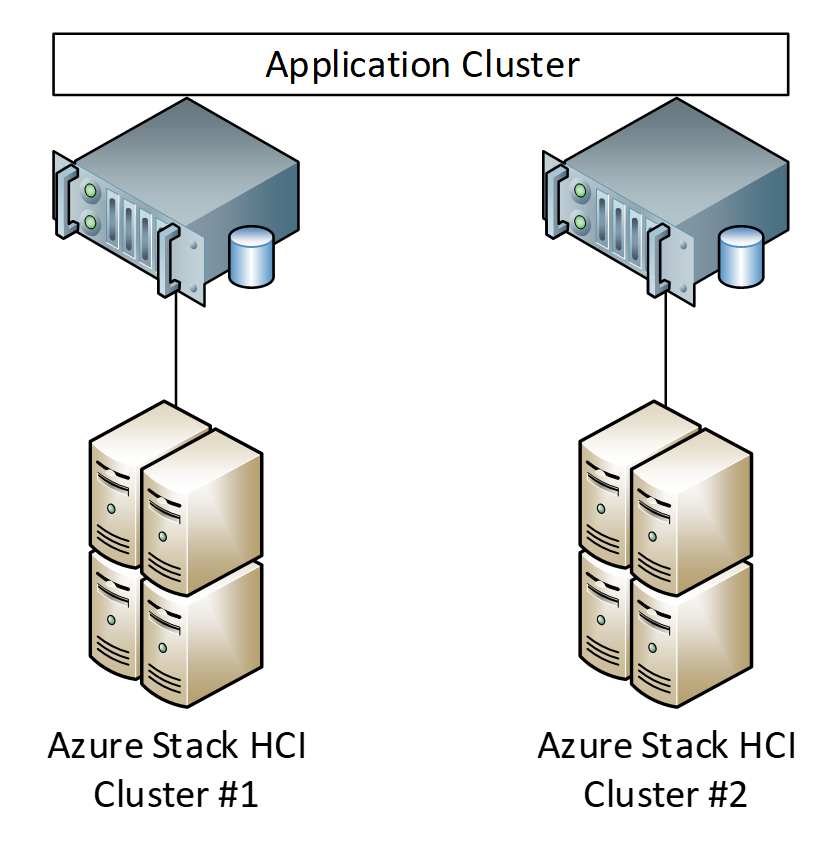

If you are running a guest cluster that requires shared storage, and you do not have a SAN in your location, there is still the option to create a SAN environment for your clusters. You would create another cluster for storage on top of your Azure Stack HCI fabric. If you take this approach, I recommend using Windows Server because you can use Storage Spaces Direct to replicate storage between the storage nodes.

The architecture would look like shown below.

Windows Server virtual machines on top of Azure Stack HCI are free of charge according to the Azure Stack HCI licensing model.

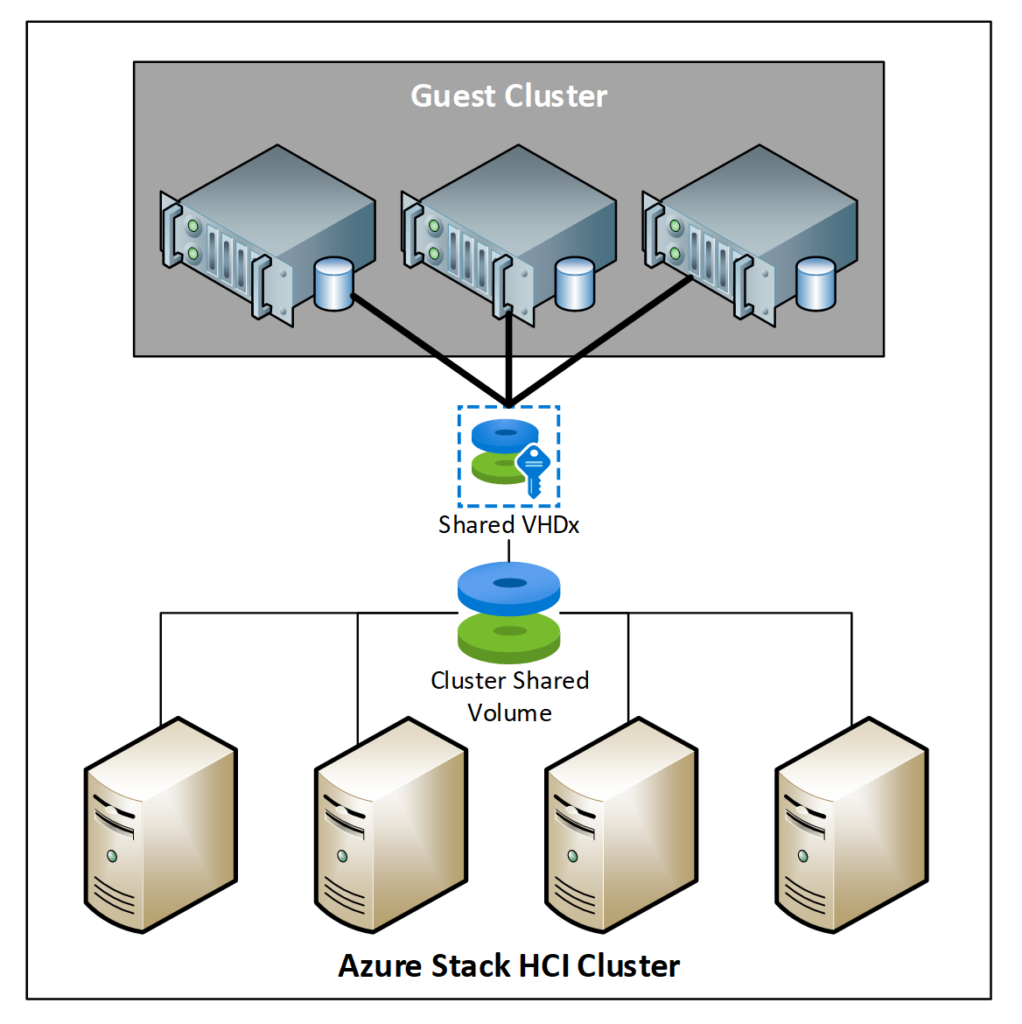

When running on Azure Stack HCI or Windows Server Hyper-V, you have another option for clustering: VHDx as a cluster volume.

The VHDx is stored on the cluster shared volume and shared between both virtual machines.

One thing you need to be aware of, not every backup vendor supports backing up shared VHDx. So, please check with your backup vendor first before deploying guest clusters using a shared virtual hard disk.

Best practices for deploying a guest HCI cluster

When building clusters on Azure Stack HCI, you should follow a few best practices to ensure that your workload is distributed in a way that a node or cluster outage can’t bring down your workloads.

Host and cluster distribution

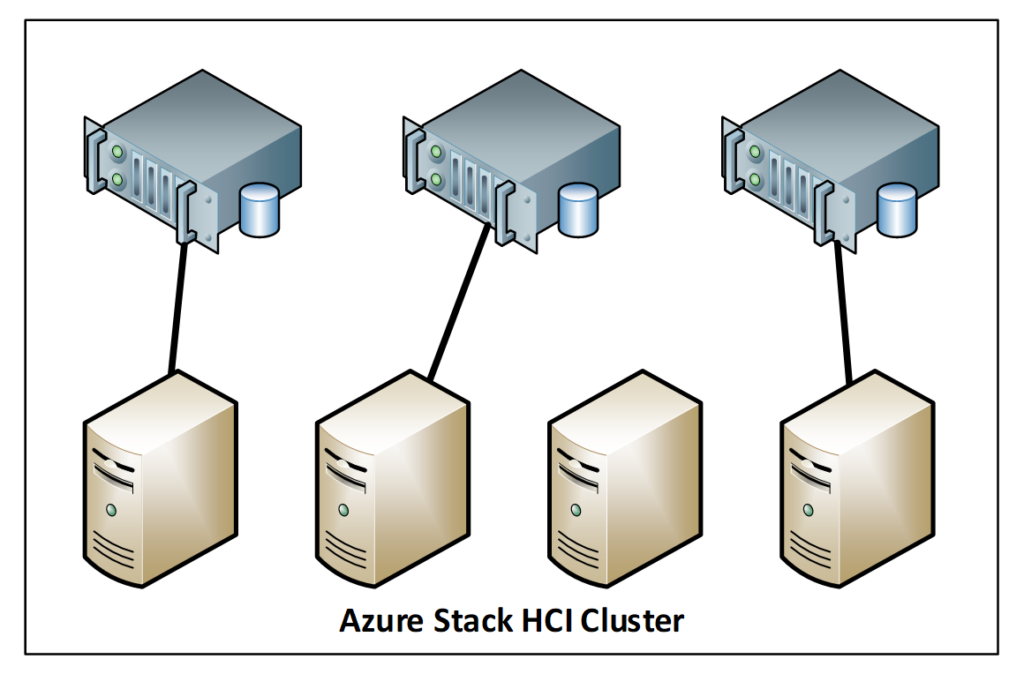

When using workloads in one cluster, you need to ensure that your guest cluster nodes are spread across different nodes.

Therefore, you can use so called Affinity and Anti-Affinity Rules. You can use PowerShell to set up VM affinity rules.

Affinity rules mean that a virtual machine will be placed on the same host as another VM. You may want to follow such a rule when you want to reduce latency.

Anti-Affinity rules prevent virtual machines from being placed on the same host. For your cluster nodes, you would use anti-affinity to ensure cluster availability.

When using two Azure Stack HCI clusters and an application cluster, you need put the nodes of your application cluster on different clusters.

For Azure Stack HCI, you can use Azure Stack HCI Cluster Sets to make virtual machines highly available, but the support is fairly limited at the moment.

Use Cluster Aware Updates

When updating an Azure Stack HCI cluster, it is highly recommended to use Cluster Aware Updates. Cluster Aware Updates ensure that your workloads stay available during the update process. With supported Azure Stack HCI integrated solutions, like Dell OpenManage, Cluster Aware Updates include hardware drivers and firmware. The example below shows the integration from Dell.

Currently, Cluster Aware Updates is the only supported way to update Azure Stack HCI clusters. To configure the cluster aware update, please follow the guide below.

Securing and hardening guest clusters

Before you deploy your first production workload, you should invest some time in securing your Azure Stack HCI cluster and Azure Arc environment.

Most people only think about outages. But even a highly-available VM needs an update from time to time. Plan for regular maintenance and not only for the outage when designing an application. Today, security patches, driver and firmware updates, and operating system upgrades can be applied at any time to protect your environment from vulnerabilities and possible exploits.

Use guest and application clusters for highly critical workloads

Most IT departments think that a cluster provides enough resiliency for your workloads. But if you run guest clusters, you need to ensure that workloads are protected against both host and cluster outages. Following the guidance I provided in this article, you can ensure that your guest cluster stays available.

Also ensure that you are using guest and application clusters for highly critical workloads. Even if you are running a single virtual machine on a cluster, you can only achieve 95% availability.