When planning for an Azure Stack HCI environment, many organizations may struggle to decide if they should go for a large Azure Stack HCI cluster or plan for smaller, use-case-specific clusters. Today, I would like to detail the ups and downs of both solutions. To do that, I’ll be discussing the required initial investments, maintenance costs, workload-specific needs, and more for large clusters vs. small specialized clusters.

Azure Stack HCI deployment: Initial investments

If you decide to invest resources in an Azure Stack HCI environment, a large cluster can often make more sense from an investment point of view. You normally plan for a stamp – a group of servers within a cluster – that has a specified number of active nodes plus one or two nodes for redundancy and maintenance purposes.

If you start with a big stamp, the initial investment can be lower due to the smaller required number of cluster nodes. In the table below, you can see that a large cluster can be created with just one unified cluster with 12 nodes plus two additional ones for redundancy and maintenance purposes.

| Cluster deployment | Large Cluster | Smaller Clusters |

| Unified Cluster | 12 + 2 nodes | 4 + 1 nodes |

| Database cluster | 2 + 1 nodes | |

| Stretched cluster | 4 nodes | |

| Test Cluster | 2 + 1 nodes | |

| Total number of nodes | 14 Nodes | 15 nodes |

If you chose to deploy smaller specialized clusters, however, the initial number of nodes is superior because we have 4 different clusters. If you need to use more clusters, you’ll also need more nodes for redundancy purposes.

If you want to deploy hardware-hungry applications such as SAP in a mixed environment, you will need to have a larger hardware stamp and larger nodes. That means investing more resources into faster CPUs, more memory, more storage, and better network connectivity.

Smaller stamps, however, can be better specialized:

- You could have a small number of nodes to run SAP and they would be based on a high-end hardware tier.

- Other stamps could be powered by more affordable hardware if they’re dedicated to running general services like file- or web servers.

With smaller specialized clusters, you may have much more nodes to manage, but the monetary investment could stay the same. The only additional costs to consider are power draw and cooling, which will add additional operating costs. Depending on your data center regions, those can be quite significant.

Upgrade flexibility for your Azure Stack HCI deployment

Smaller Azure Stack HCI clusters can help you avoid vendor lock-in more easily. If you build a large cluster and you want to add similar additional nodes, you’ll often have to keep relying on the same vendor, even if they make you pay more than competitors. However, you could still mix different vendors and configurations in one cluster or buy used server hardware on the second or third market.

If you chose to go with smaller clusters in your environment and want to upgrade their capabilities, you can do that with newer-generation hardware. In practice, you will migrate your virtual machines to the newer cluster and keep your old hardware for other purposes. You could also sell it or just free up space for additional use cases.

If we take all these cost factors into account, here how the comparison table looks like.

| Criteria | Large Cluster | Smaller Cluster |

| Number of nodes | + | . |

| Initial investment | + | (+ with different hardware) |

| Flexibility | – | + |

| Compatibility and long-term use cases | – | + |

| Vendor and market flexibility | – | + |

Maintenance needs for your Azure Stack HCI deployment

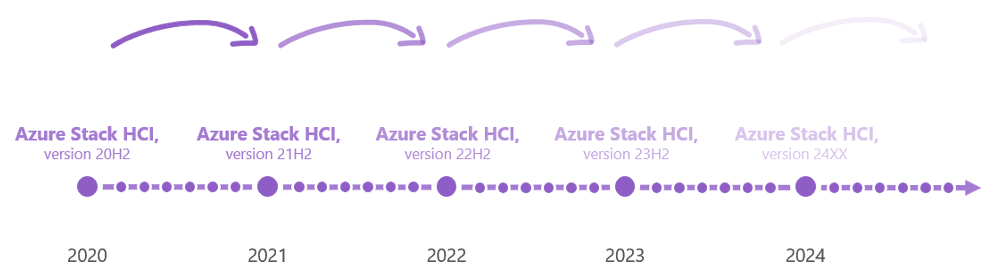

During the lifecycle of your Azure Stack HCI environment, you’ll need to apply patches, driver and firmware updates, and even new versions of the Azure Stack HCI operating system. In case of an operating system update, you’ll need to apply it around six months after release, otherwise, you’ll lose the support of Microsoft for your cluster.

Depending on your server vendor, you may lose a few months of support until they catch up with the latest release of Azure Stack HCI. But here comes the issue: When installing operating system, firmware, driver updates for your clusters, you need consistency across all nodes.

Disadvantages of large clusters

Here, the large cluster option has a major downside. Depending on the number of spare nodes you have, you can only update up to two nodes at a time. While these two nodes are updating or upgrading, your cluster will be running in a critical state. That means, if you happen to lose another node due to a hardware or power outage, you may not be able to maintain and run your workload, or you may encounter a major impact on performance.

Another key downside for the large cluster option is the time the maintenance process takes. Let’s say that during an upgrade cycle, you have 14 cluster notes to upgrade. You’ll be updating two nodes at a time and this process requires 30 minutes. Overall, this maintenance process will take at least seven hours during which your infrastructure will be in a critical state.

If one of your nodes fails during that cycle, the upgrade process will stop. You will end up in a “halfway-through” mode, which means that half (more or less) of your nodes are updated while the other half is still untouched and you’ll have to troubleshoot the issue to update these remaining nodes. In addition, if the installation of a software or firmware update fails, this will result in significant issues for your cluster, and all your workloads may be impacted or go down.

Advantages of smaller clusters

With smaller clusters, you can stretch out the update process more easily: You can do maintenance on uncritical clusters first before proceeding with more crucial clusters. If one of your clusters goes down, you could migrate its workload to another cluster and run it there while you’re troubleshooting the issue.

With smaller clusters, you can also run updates in parallel and update more than one cluster at a time. There’s no limit as every cluster is operating independently from the other. That helps to shorten the maintenance cycle by several hours and if a node fails to update on one cluster, the other ones won’t be impacted and can proceed with the maintenance.

Another benefit of small clusters, especially when you use hardware from different vendors, is that you may have the opportunity to update clusters early. It may often happen that one vendor adds support for the new version of Azure Stack HCI earlier than the others. As an example, that would allow you to start the update process of your Lenovo or HPE cluster a month prior to other clusters running on different hardware.

One downside I see with small clusters during maintenance is that you must invest more time into planning your maintenance cycle. However, with good planning, your overall maintenance should become much smoother and with lower overall risks.

| Criteria | Large Cluster | Small Cluster |

| Overall time for updates/upgrades | – | + |

| Time running in critical state | – | + |

| Update/Upgrade parallelization | – | + |

| Risk reduction | – | + |

| Additional planning required | + | – |

Managing large and small Azure Stack HCI clusters

When it comes to cluster management, larger clusters have much better standing. In the Azure portal, keeping an overview of resources and issues in large clusters is much easier than doing the same homework for multiple smaller clusters. You can also have a much better view of your infrastructure by using Azure Arc or other integrated components such as the Azure Resource Bridge.

With smaller clusters, you need to manage more machines and connectors. You also need to have separate views and filters for every cluster. That can produce some overhead in management.

Splitting up a cluster into much smaller chunks also brings no benefit in costs etc. when it comes to support. Because every Azure Stack HCI node and cluster that’s connected to a subscription will inherit the support agreement of the subscription, you will have access to the best available support in any case.

Supportability and outages

When you need to engage with support and perform support tasks on your cluster, a large cluster has a higher risk of outages than a smaller one. For example, if you need to perform some reconfigurations because of performance issues, your entire workload in the large cluster may be impacted, and it could happen that you bring down all these workloads by accident. You may even struggle to perform those tasks because of the high impact on your production environment.

When using smaller clusters, however, you can evacuate the cluster where you need to perform the support tasks. That minimizes the overall risk of downtime for your various workloads. Here, small clusters have a major advantage over large clusters.

Stretched clustering

If you want to mix workloads and use solutions like Kubernetes or Azure Virtual Desktop, then you need to use a stretched cluster deployment. This is best for disaster recovery as you get automatic failover to restore your production environment.

Here, a small cluster deployment would make more sense as you can deploy workloads that do not support stretched clustering on a regular cluster. Other workloads that need that kind of disaster and failover solution could be deployed within a stretched cluster.

Choosing the best solution for your Azure Stack HCI deployment

Depending on your needs and budget for an Azure Stack HCI deployment, you may decide to go with either large or smaller clusters. It often comes down to pricing and the type of workload deployed on the clusters.

Personally, and with the correct budget, I prefer to use smaller clusters instead of a larger one. In general, I found out that the management overhead of a large cluster does not outperform the overall benefits of smaller clusters.