What is Microsoft’s Storage Spaces Direct?

Microsoft started discussing a new feature of Windows Server 2016 called Storage Spaces Direct at Microsoft Ignite 2015. I’ll explain this new technology in detail, along steps on how you will be able to use it when Windows Server 2016 is released.

Windows Server 2012 R2 Scale-Out File Server

Windows Server 2012 and Windows Server 2012 R2 introduced us to an improved new form of Software-Defined Storage from Microsoft called a Scale-Out File Server (SOFS). Typically used with Hyper-V, the SOFS is a tier of storage, separated from the compute tier (Hyper-V). SOFS is typically made up of:

- A set of clustered file servers that make up a transparent failover file server cluster. The compute tier connects to the SOFS using SMB 3.0 networking. The virtual machine files are stored in files shares that are stored in physical disks in the SOFS.

- One or more JBODs that each SOFS cluster node is connected to using SAS cables. Storage Spaces is used to aggregate the SAS disks of the JBODs. Virtual disks are created from the aggregated disks, providing resiliency against disk or enclosure failure, as well as enabling SSD/HDD tiered storage and performance enhancing write-back caching.

When implemented correctly, a SOFS is a fantastic way to deploy small and affordable clustered storage, and it’s also a great way to deploy huge amounts of Hyper-V storage for public or private cloud installations. Some experiences, however, were not so good:

- Some JBOD/disk manufacturers are better than others

- There is cost and complexity to the SAS tier

- Disk support is limited to SAS HDDs and SSDs

Microsoft has invested a lot of effort to develop a new way to deliver storage, that is more scalable, easier to deploy, and offers more disk options. This has resulted in a new architecture called Storage Spaces Direct (S2D).

The Concept of Storage Spaces Direct

We are probably a year away from the release of Windows Server 2016 so a lot of what is written in this article is subject to change. However, the concept of S2D has been well explained by Microsoft. Instead of having a SOFS that is comprised of a cluster servers and a SAS network, S2D is a cluster that’s made of up servers with direct-attached storage (DAS). The supported disks include SAS HDDs and SSDs of the past, but S2D also supports:

- SATA disks: HHDs and SSDs can be very affordable

- NVMe: Non-Volatile Memory Express disks PCIe connected SSDs that offer huge amounts of IOPS, where one model I checked out offers up to 122,000 IOPS.

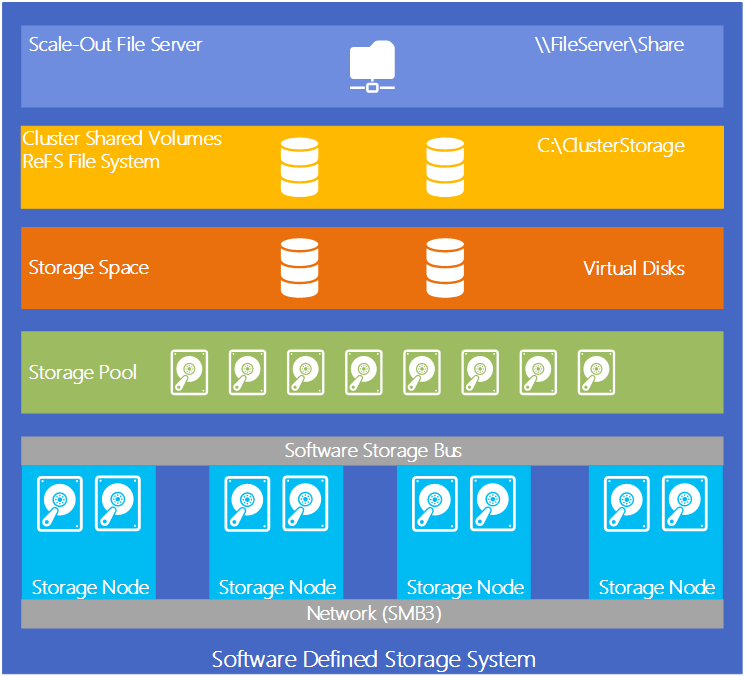

The conceptual architecture of S2D looks like this:

- At least four servers each supplies DAS disks that will be pooled using Storage Spaces. Up to 240 disks can be in a single pool, shared up to 12 servers. The servers are clustered.

- A Software Storage Bus replaces the SAS layer of a shared-JBOD SOFS. This bus uses SMB 3.0 networking with RDMA (SMB Direct) between the S2D cluster nodes for communications.

- Storage Spaces aggregates the disks into a pool.

- One or more virtual disks (LUNs) is created from the pool, and a resiliency model is chosen.

- The virtual disks are formatted with ReFS and converted into cluster shared volumes (CSVs), making them active across the entire cluster.

- One of the two deployment choices is employed.

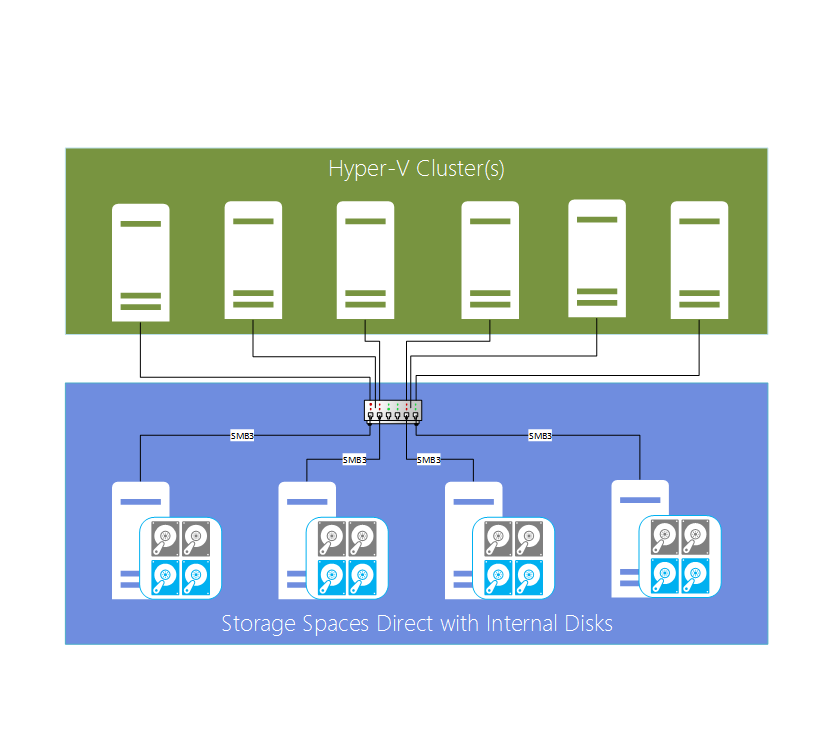

Converged Architecture for Private Clouds

Microsoft originally envisioned S2D as being a new way to deploy scalable and cost-effective storage for private clouds. Stated differently, the intention is to use S2D as an SMB 3.0 connected storage tier (SOFS) for Hyper-V hosts and clusters. File shares are created on the S2Ds virtual disks and the Hyper-V hosts store their virtual machine files on these shares, which means that the extents making up the files are actually stored in a fault tolerant manner across the servers of the S2D cluster.

Hyper-Converged Architecture for Small and Mid-Sized Businesses

You might have read an article that I wrote late last year where I discussed Microsoft’s view on hyper-convergence, as it was publicly stated at TechEd Europe 2014. Microsoft believed that hyper-convergence limited scalability. However, Microsoft has realized that they can use software (S2D) to create a hyper-converged solutions for small to mid-sized businesses. In this design:

- A cluster is created from servers, each supplying DAS storage

- S2D is deployed on this cluster to the point of creating CSVs

- Hyper-V is enabled on each node in the S2D cluster

- Virtual machines are stored on the CSVs, meaning that the extents that make up the files are stored in a fault tolerance manner across each node of the S2D cluster

There is no SMB loopback in this deployment model. Virtual machine files are stored on the CSVs that each host is mounting. Under the covers, the extents of the files are scattered all over the cluster members, and a virtual machine could be running on one node while CSV ownership is on another, where fast and low latency networking is required.

Fault Tolerance

There are several layers of fault tolerance in S2D:

- Storage Spaces virtual disks: You choose the resilience of a virtual disk at the time of creation. If you chose three-way mirroring that’s commonly used in larger installations, then the 1 GB extents that make up each file are stored on three different servers. If a disk fails, then there is automated recovery to the remaining disks.

- S2D nodes: If one of the servers is permanently lost, then data is available via mirroring on the other nodes. A manual task is done to rebalance the data from the lost node to the remaining nodes. This process is not automated because servers are more likely to be offline for a short amount of time due to maintenance or a crash. If a server recovers, then an automated resync occurs to bring its storage back up to date.

- ReFS: One of the benefits of ReFS is that it offers many layers to protect against data and metadata corruption via checksums and backups. Bit rot can be prevented using these mechanisms.

Note that a lower priority is used for recovery actions so that the performance foreground tasks, such as running virtual machines, is not impacted.

Performance

SMB Direct (RDMA) is required to network the nodes of a S2D cluster. RDMA provides:

- High bandwidth

- Low latency

- Minimized CPU utilization

One might question the cost of something like 40 Gbps ROCE v2, but this cost will be more than absorbed by the low cost of the storage system that is using SATA disks. This form of networking also allows hosts to make the most of SSD or NVMe disks.

Storage performance is enabled with tiered storage. A heat map tracks the popularity of data and moves hot data to the NVMe/SSD tier and colder data to the HDD tier. This offers the affordable scalability of SATA HDDs at the lower end and the speed of NVMe at the higher end, thus targeting spending in a very granular way. A write-back cache is enabled in tiered virtual disks to absorb spikes in write activity.

WS2016 ReFS also offers improves performance:

- The zeroing out of creating fixed VHD/X files or extending dynamic VHD/X files has been turned into a near instant metadata operation.

- The merging of checkpoints (formerly AKA snapshots) is a zero data move operation that improves the performance of file-based backups.

Scaling Out and In

It is easy to add capacity to an S2D cluster. Simply add a new server with DAS storage to the cluster and rebalance the extents across each node, and note that this is a low priority IO task. You can also remove a server from an S2D cluster.

A few companies have invested in Storage Spaces with shared JBODs, and those that picked their hardware wisely realized great savings with awesome performance. Microsoft continues to invest in that architecture but S2D in combination with SMB 3.0 networking offers a new way to deploy interesting designs, both for large scale environments and small to mid-sized organizations.