When you virtualize an Azure Stack HCI cluster, you may want to load balance applications running on it to optimize server utilization. While there are various built-in options to do that, they may be quite limited depending on your usage scenarios. In this article, I’ll discuss the various options you have to implement Azure Stack HCI load balancing, and I’ll also detail all the opportunities and drawbacks they may come with.

Using an external load balancer with Azure Stack HCI

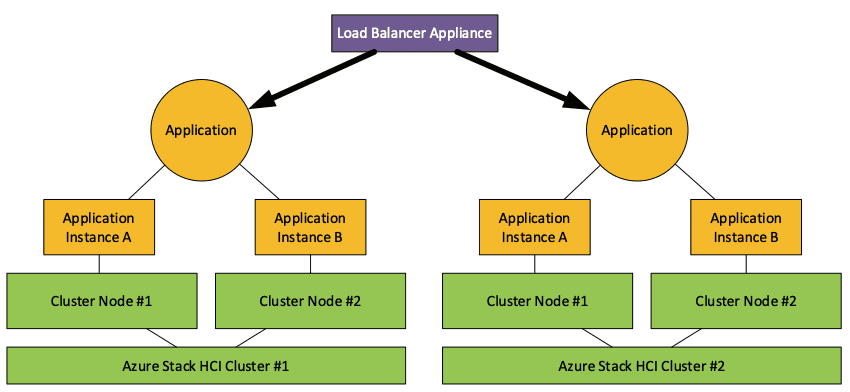

Using external load balancer appliances with your Azure Stack HCI cluster is a very classic way to evenly distribute incoming traffic across your resources. These external load balancer appliances can be either hardware-based or virtualized.

The purpose of these appliances is to load balance your applications and forward traffic to the fabric infrastructure and into the virtualization environment. You can load balance applications running in different clusters and in different locations, and you can see how such an infrastructure works in the image below.

Using external load balancers with your Azure Stack HCI cluster can really help to improve the overall reliability and availability of your infrastructure. However, this solution also comes with some drawbacks: You’ll always need some external components, and you’ll also need to make additional investments in hardware and/or licenses.

External load balancers can also include a cloud-based load balancer such as Azure Traffic Manager, which is a DNS-based traffic load balancer. Azure Load Balancer is another alternative that offers various fully-managed load balancing solutions.

However, cloud-based load balancers are rather inefficient for load balancing data center internal traffic as they’re sending all traffic up to the cloud and back to your infrastructure. Cloud-based load balancers are much more efficient for load balancing geo-distributed workloads.

Using a virtual load balancer on Azure Stack HCI

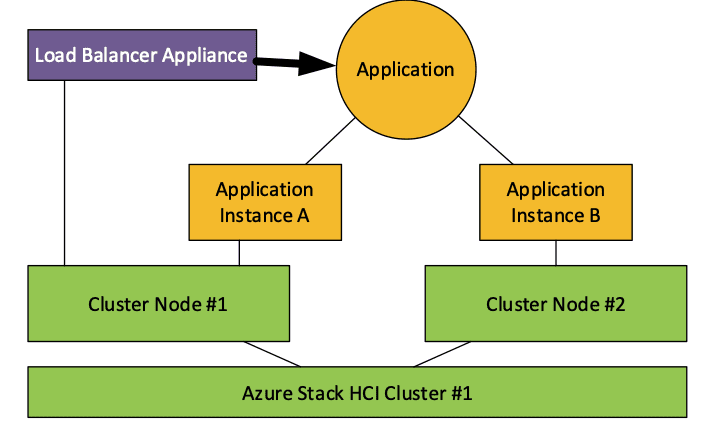

Another feasible load balancer option for Azure Stack HCI is to use Network Virtual appliances or Network Function Virtualization (NFV) appliances. These appliances can be hosted on Azure Stack HCI within a virtual machine and provide the same experience as external virtual appliances.

Having a virtual load balancer on Azure Stack HCI offers a good “everything in one box” solution. However, there is still one caveat you need to consider: if your cluster goes down, so will your load balancer.

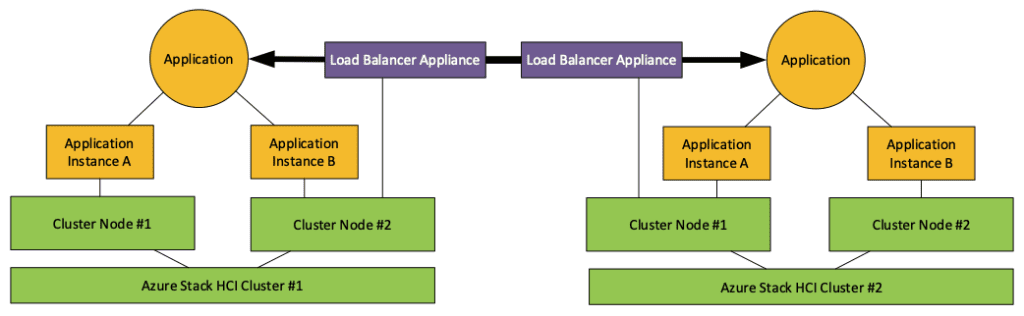

In the end, the best way to deploy virtual load balancers on Azure Stack HCI is to use them as multi-cluster load balancers to distribute your applications on different clusters.

With such a solution, you will improve the reliability and overall availability of your workloads. However, this isn’t an “everything in one box” solution and you may need to invest in additional hardware to implement it.

Using Software-Defined Networking with Azure Stack HCI

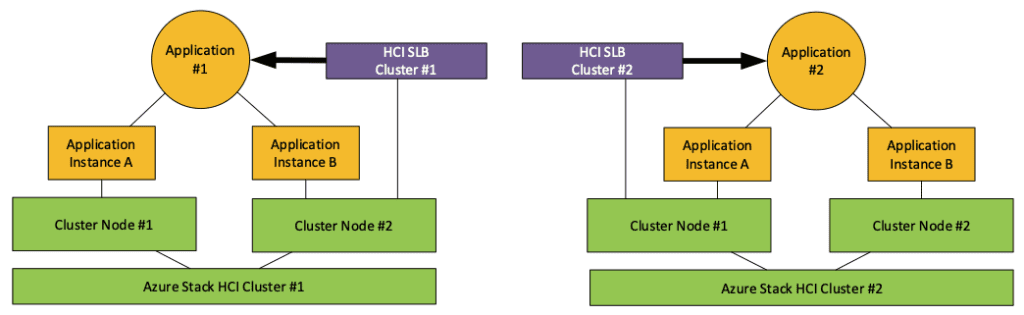

Software-Defined Networking (SDN) in Azure Stack HCI allows organizations to centrally manage network services and load balancing in their data center. This solution also offers a Software Load Balancer (SLB) to distribute network traffic across virtual network resources.

Using a Software Load Balancer for Software-Defined Networking can help to improve availability and scalability for your workloads. However, it does add another layer of complexity and requires additional resources for Network Controller, the server role used to manage your virtual network infrastructure.

Using Software Defined Networking with Azure Stack HCI also comes with some limitations: Firstly, Multitenancy for virtual local area networks (VLANs) isn’t supported by the Network Controller feature. Moreover, as you can see below, the Software Load Balancer for SDN is bound to one cluster, so there is no option to load balance applications across different clusters.

Application-dependent load balancing options

Some applications come with an integrated load balancing mechanism. A good example of that is Kubernetes, as the container orchestration tool offers load distribution as the most basic type of load balancing.

When load balancing is built into an application, you should benefit from a combination of reliability and performance optimization. Of course, the caveat here is that not every application has an integrated load balancer mechanism.

Personally, I prefer to deploy cluster-independent load balancing solutions. Here are the main benefits of that option:

- You can more easily adapt to changes regarding (geo)redundancy requirements.

- You can reduce fabric complexity.

- Application owners can adjust load balancing depending on workload requirements.

- You can leverage other technologies and cloud services to make application owners less reliant on specific environments.

- You can add your applications to another cluster when you run out of resources on your original Azure Stack HCI cluster

Conclusion

After understanding all the different ways to implement Azure Stack HCI Load Balancing described in this article, you should find the best solution that fits the needs of your organization. And even though Azure Stack HCI is a rather new cloud-integrated product, it’s just another hypervisor, and using it shouldn’t result in more complexity for your environment.

Lastly, you should also aim to keep your applications as independent as possible from fabric, location, or cloud environments. That way, you’ll avoid the risk of being locked into a certain technology or ecosystem.