An Introduction to Parallel PowerShell Processing

- Blog

- PowerShell

- Post

Because we use PowerShell to manage things in the enterprise, we want to be as efficient as possible. Looking for ways to eke out more performance is important, especially as our tasks scale. What you do for 10 Active Directory user accounts, might be different for 10,000 accounts. And before I get too far into this, understand that accessing native .NET class methods and properties in PowerShell is usually faster than their cmdlet counterparts.

If you want true performance, you could write your own C# application. If you have the skills to do either of these, you probably aren’t reading this article. I also am of the opinion that if your task is so performance sensitive, PowerShell may not be the right tool for the job. There is always going to be a little overhead when using PowerShell cmdlets, but that’s the trade-off we get for ease of use. My plan is to look at a variety of techniques for doing something in parallel. This article is an extension of PowerShell Management at Scale.

For my scenario, I want to use some Active Directory cmdlets and search for items in parallel. I’m testing from a Windows 8.1 domain member desktop. My test network is probably more resource constrained than yours so your results might vary.

I want to use Get-ADUser to retrieve a list of user names.

$names = 'jfrost','adeco','jeff','rgbiv','ashowers','mflowers'

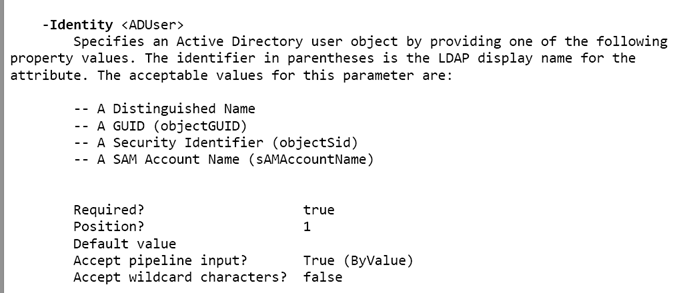

If you look at help for Get-ADUser, you’ll see that the -identity parameter does not accept an array of items.

So a command like this will fail.

Get-ADUSer –identity $names

We can see that identity accepts pipeline input, however. Running this with Measure-Command took 311 ms. The ActiveDirectory module was already loaded. I could use a ForEach enumeration and loop through each name.

foreach ($name in $names) {

Get-ADUser -identity $name

}

This took 113 ms for me. I know we’re not talking much of a difference, but my set of names is small. Still, you can see that even a simple pipeline involves some overhead.

Let’s up the ante, and test with a list of 1,000 names.

Measure-Command {

$a=@()

$a+= $list | Get-ADUser

}

This took 4.53 seconds using the pipeline.

Measure-Command {

$a=@()

foreach ($name in $list) {

$a+= Get-ADUser -identity $name

}

}

This took a tad longer at 5.34 seconds, but that’s not too far off from our previous technique. Now that we’ve tried using the cmdlet a few ways, let’s see about wrapping it up in something like a workflow.

Workflow DemoAD {

Param([string[]]$Name)

foreach -parallel ($user in $name) {

Get-ADuser -Identity $user

}

}

The –parallel parameter, valid only in a workflow, will throttle at 32 items at a time by default. Let’s invoke this locally and measure how long it takes to complete.

measure-command { $x = demoad $list}

Workflows also incur an overhead price. This took almost seven minutes to complete! In this situation, a workflow is probably not a good idea.

But, I was running the workflow locally, and it still had to make multiple network connections to the domain controller. Perhaps I’ll get better results invoking the workflow on the domain controller.

measure-command { $y = demoad $list -PSComputerName chi-dc04}

That’s better. That took 1 minute 50 seconds. Knocking out a lot of network overhead is my guess. Since running this remotely appears to be an improvement, let’s try with remoting.

I already know that using a PSSession will improve performance, so let’s jump right to that.

$sess = New-pssession -ComputerName chi-dc04

There are a few ways we could run this in parallel.

Measure-Command {

foreach ($name in $list) {

$z+= invoke-command { Get-ADUser -Identity $using:name} -session $sess -HideComputerName

}

}

This wasn’t too bad, where the process took 24 seconds. Although remember each result is getting deserialized back across the wire and essentially this is still getting the user accounts sequentially. Since I started out with good results piping the list of names to Get-ADUser, let’s see if running it in the PSSession is any better.

Measure-Command {

$z+= invoke-command -scriptblock {

param([string[]]$names)

$a = ""

$a = $names | Get-ADUser

$a

} -session $sess -HideComputerName -ArgumentList @(,$list)

}

Completed all 1,001 names in 4.42 seconds. As a side note, you may be wondering why I didn’t use the Get-ADUser command.

$using:list | Get-ADUser

It appears that PowerShell doesn’t like doing that unless the variable is being used as an explicit parameter value. I had to resort to a parameterized scriptblock and pass $list as a parameter value. Due to another quirk with Invoke-Command, I had to define the –ArgumentList value as an array of values even though it is the only argument.

When I tried using the following line of code:

-ArgumentList @($list)

PowerShell only sent the first name from $list.

What did we learn? In terms of doing something in parallel, just all about all of the techniques were no better than using the pipeline and letting Get-ADUser do its thing. That’s not to say every cmdlet will give the same result. Active Directory is probably optimized for finding single user accounts by identity. Filtering might be a different story. Next time, we’ll look at Get-ADComputer and see what is involved in searching multiple locations in parallel. More than likely if we get good results there, we can apply the same techniques to Get-ADUser.