Hashing it Out in PowerShell: Using Get-FileHash

- Blog

- PowerShell

- Post

PowerShell 4.0 introduced a new cmdlet, Get-FileHash, primarily for use with Desired State Configuration (DSC). In a pull server configuration, you need to provide file hashes so that servers can recognize changes. That is the primary purpose of a file hash as far as I am concerned is for file integrity. Windows supports several different hashing algorithms, which you should not confuse with encryption.

All a hashing algorithm does is calculate a hash value, also usually referred to as a checksum. If the file changes in any way, even with the addition or removal of a single character, then the next time the hash is calculated it will be different.

The hash can’t tell you what changed, only that the current version of the file is different than the original based on the hash. Let’s look at some ways of using file hashes in PowerShell, outside of DSC.

To create a hash, all you need is a file.

get-filehash C:\work\x.zip

The default hashing algorithm in SHA256, but you can use any of these:

- SHA1

- SHA256

- SHA384

- SHA512

- MACTripleDES

- MD5

- RIPEMD160

I’m not going to explain each algorithm, as I don’t think it really matters. All we are doing is calculating a file hash, and as long as you use the same algorithm to compare two files I don’t think it makes a difference. For the most part all of these perform reasonably well, especially on small files. Once you start getting into gigabyte size files, then you might notice a difference. In fact, I put together a simple performance testing script.

#requires -version 4.0

#compare hashing performance

[cmdletbinding()]

Param(

[Parameter(Position=0,ValueFromPipeline,ValueFromPipelineByPropertyName)]

[ValidateScript({Test-Path $_})]

[Alias("PSPath")]

[string]$Path = 'C:\scripts\worksample.xml')

Process {

Write-verbose "Testing hashing with $(Convert-Path $Path)"

$filesize = (Get-item $path).Length

$algorithms = "SHA1","SHA256","SHA384","SHA512","MACTripleDES","MD5","RIPEMD160"

foreach ($item in $algorithms) {

[pscustomobject]@{

Algorithm = $item

HashTime = Measure-Command { Get-FileHash -Path $Path -Algorithm $item}

FileSize = $filesize

}

} #foreach

} #process

My script takes a file as input and measures how long it takes to generate a hash using each algorithm.

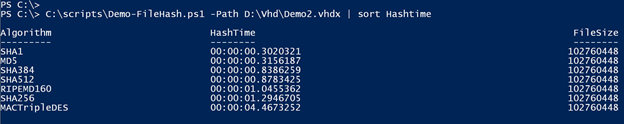

C:\scripts\Demo-FileHash.ps1 -Path D:\Vhd\Demo2.vhdx | sort Hashtime

Even on smaller files you will most likely see hashing performance like this. The default SHA256 definitely requires a bit of time to calculate. Personally, I see no reason not to use MD5 for file hashes. It is fast and ubiquitous.

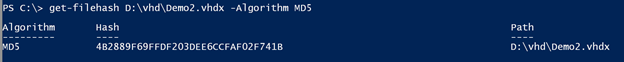

get-filehash D:\vhd\Demo2.vhdx -Algorithm MD5

If you want to use this as a default, add it to $PSDefaultParameterValues.

$PSDefaultParameterValues.add("Get-FileHash:Algorithm","MD5")

You would insert this command into your PowerShell profile script. Now Get-FileHash will use this algorithm by default.

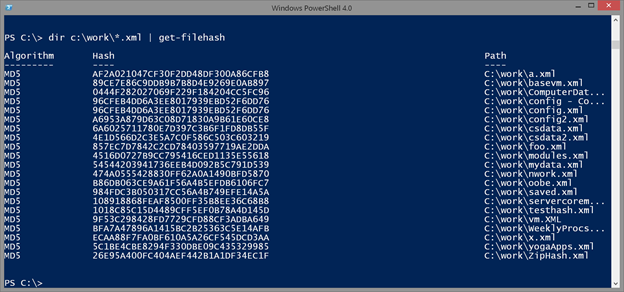

dir c:\work\*.xml | get-filehash

Next, let’s see how you might use file hashes. First, I want to calculate file hashes for a group of files and export the results to XML.

dir c:\scripts\*.zip | Get-FileHash -Algorithm MD5 | Export-Clixml -Path C:\work\ZipHash.xml

I prefer to keep hash information in separate directory on the off chance that a bad actor might try to fudge the original file hashes. At some later date, I import the XML file to access the original file hashes.

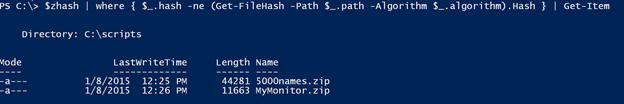

$zHash = Import-Clixml -Path c:\work\ziphash.xml

At some point after the original file hashes where generated, some of the zip files change. I can use the information from the XML file to compare the stored file hash against a new version, making sure to use the same algorithm. Any files that don’t match are piped to the end.

$zhash | where { $_.hash -ne (Get-FileHash -Path $_.path -Algorithm $_.algorithm).Hash } | Get-Item

If the file still exists, it will be displayed.

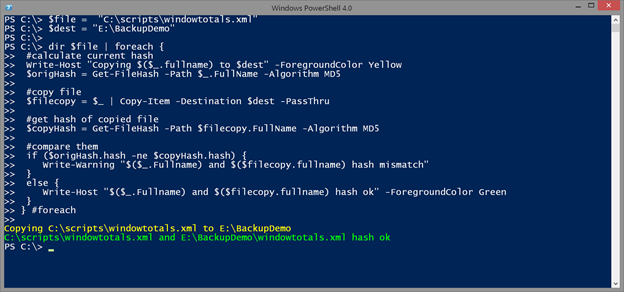

Next, let’s see how we might use this cmdlet with copying files. If the file hash between the original file and the copy is different, then something happened during the copy process and the file is most likely corrupt. Here’s how you might copy a single file.

$file = "C:\scripts\windowtotals.xml"

$dest = "E:\BackupDemo"

dir $file | foreach {

#calculate current hash

Write-Host "Copying $($_.fullname) to $dest" -ForegroundColor Yellow

$origHash = Get-FileHash -Path $_.FullName -Algorithm MD5

#copy file

$filecopy = $_ | Copy-Item -Destination $dest -PassThru

#get hash of copied file

$copyHash = Get-FileHash -Path $filecopy.FullName -Algorithm MD5

#compare them

if ($origHash.hash -ne $copyHash.hash) {

Write-Warning "$($_.Fullname) and $($filecopy.fullname) hash mismatch"

}

else {

Write-Host "$($_.Fullname) and $($filecopy.fullname) hash ok" -ForegroundColor Green

}

} #foreach

The only reason I’m using Write-Host is to make it clear what I’m doing and if there is a problem copying the file.

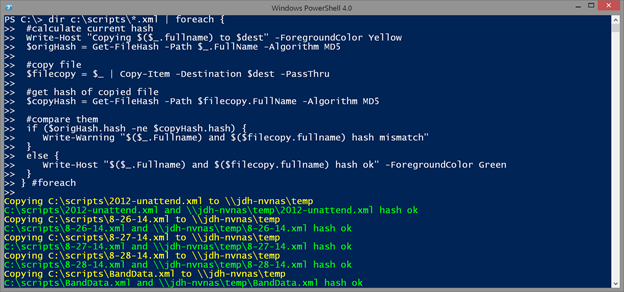

This same technique would work for multiple files as well.

$dest = \\jdh-nvnas\temp

dir c:\scripts\*.xml | foreach {

The only thing I changed was the destination and the files to be copied. I used my NAS with the hope that there might be a network hiccup and cause a problem, but the copy still worked.

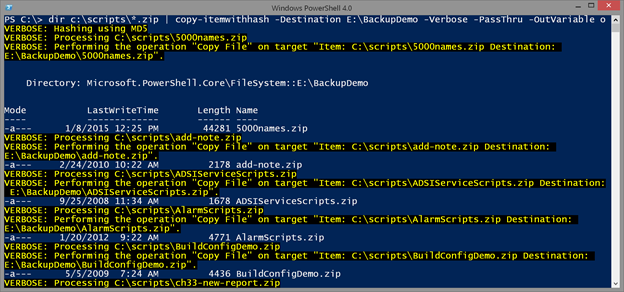

The last thing I thought I would try is create a proxy function for Copy-Item. My version is essentially Copy-Item, but it also calculates a file hash for each file before it is copied and after. If the file hashes don’t match, PowerShell will throw an exception.

#requires -version 4.0

Function Copy-ItemWithHash {

<#

.Synopsis

Copy file with hash

.Description

This is a proxy function to Copy-Item that will include hashes for the original file and copy. New properties will be added to the copied file, OriginalHash and CopyHash. The default hashing algorithm is MD5.

.Notes

Last Updated: 1/8/2015

.Example

PS C:\> dir *.zip | copy-itemwithhash -Destination E:\BackupDemo -PassThru -ov o

VERBOSE: Processing C:\Scripts\5000names.zip

VERBOSE: Performing the operation "Copy File" on target "Item: C:\Scripts\5000names.zip Destination: E:\BackupDemo\5000n

ames.zip".

Copy-ItemwithHash : File hash mismatch between C:\Scripts\5000names.zip and E:\BackupDemo\5000names.zip

At line:1 char:13

+ dir *.zip | Copy-ItemwithHash -Destination E:\BackupDemo -Verbose -PassThru -ov o

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidResult: (C:\Scripts\5000names.zip:String) [Write-Error], Hash mismatch

+ FullyQualifiedErrorId : Microsoft.PowerShell.Commands.WriteErrorException,Copy-ItemWithHash

VERBOSE: Processing C:\Scripts\add-note.zip

VERBOSE: Performing the operation "Copy File" on target "Item: C:\Scripts\add-note.zip Destination: E:\BackupDemo\add-no

te.zip".

Directory: E:\BackupDemo

Mode LastWriteTime Length Name

---- ------------- ------ ----

-a--- 2/24/2010 10:22 AM 2178 add-note.zip

VERBOSE: Processing C:\Scripts\ADSIServiceScripts.zip

VERBOSE: Performing the operation "Copy File" on target "Item: C:\Scripts\ADSIServiceScripts.zip Destination: E:\BackupD

emo\ADSIServiceScripts.zip".

Copy-ItemwithHash : File hash mismatch between C:\Scripts\ADSIServiceScripts.zip and E:\BackupDemo\ADSIServiceScripts.zip

At line:1 char:13

+ dir *.zip | Copy-ItemwithHash -Destination E:\BackupDemo -Verbose -PassThru -ov o

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidResult: (C:\Scripts\ADSIServiceScripts.zip:String) [Write-Error], Hash mismatch

+ FullyQualifiedErrorId : Microsoft.PowerShell.Commands.WriteErrorException,Copy-ItemWithHash

...

PS C:\> $o | where {$_.originalhash -ne $_.copyhash}

Directory: E:\BackupDemo

Mode LastWriteTime Length Name

---- ------------- ------ ----

-a--- 1/2/2012 4:22 PM 3492 ch33-new-report.zip

-a--- 6/2/2010 9:55 AM 1211 CreateNames.zip

-a--- 8/5/2008 1:08 PM 124883 Demo-Database.zip

-a--- 5/7/2009 10:04 AM 11402 Display-LocalGroupMember.zip

-a--- 1/20/2010 1:22 PM 2347 DomainControllerFunctions.zip

-a--- 5/7/2010 4:27 PM 3398 Get-Certificate-v2.zip

...

The first command attempts to copy files but there are hash errors. The second command uses saved output to identify files that failed.

.Link

Copy-Item

Get-Filehash

#>

[CmdletBinding(DefaultParameterSetName='Path', SupportsShouldProcess=$true, ConfirmImpact='Medium', SupportsTransactions=$true)]

param(

[Parameter(ParameterSetName='Path', Mandatory=$true, Position=0, ValueFromPipeline=$true, ValueFromPipelineByPropertyName=$true)]

[string[]]$Path,

[Parameter(ParameterSetName='LiteralPath', Mandatory=$true, ValueFromPipelineByPropertyName=$true)]

[Alias('PSPath')]

[string[]]$LiteralPath,

[Parameter(Position=1, ValueFromPipelineByPropertyName=$true)]

[string]$Destination,

[switch]$Container,

[switch]$Force,

[string]$Filter,

[string[]]$Include,

[string[]]$Exclude,

[switch]$Recurse,

[switch]$PassThru = $True,

[Parameter(ValueFromPipelineByPropertyName=$true)]

[pscredential][System.Management.Automation.CredentialAttribute()]$Credential,

[ValidateSet("SHA1","SHA256","SHA384","SHA512","MACTripleDES","MD5","RIPEMD160")]

[string]$Algorithm = "MD5"

)

begin {

try {

$outBuffer = $null

if ($PSBoundParameters.TryGetValue('OutBuffer', [ref]$outBuffer))

{

$PSBoundParameters['OutBuffer'] = 1

}

#remove added parameter

$PSBoundParameters.Remove("Algorithm") | Out-Null

#define a scope specific variable

$script:hash = $Algorithm

Write-Verbose "Hashing using $script:hash"

$wrappedCmd = $ExecutionContext.InvokeCommand.GetCommand('Copy-Item', [System.Management.Automation.CommandTypes]::Cmdlet)

$scriptCmd = {

&$wrappedCmd @PSBoundParameters | foreach {

$_ | Add-member -MemberType NoteProperty -Name "OriginalHash" -value $pv.originalhash

$_ | Add-member -MemberType ScriptProperty -Name "CopyHash" -value {

#this is the correct value

($this | get-filehash -algorithm $script:hash).hash

<#introduce a random failure for demonstration purposes

$h = ($this | Get-FileHash -Algorithm $script:hash).hash

$r = Get-Random -Minimum 0 -Maximum 2

$h.substring($r)

#######################################################

#>

} #CopyHash value

Try {

$file = $_

if ($file.originalHash -eq $file.copyhash) {

$file

}

else {

Throw

}

} #try

Catch {

Write-Error -RecommendedAction "Repeat File Copy" -Message "File hash mismatch between $($pv.fullname) and $($file.fullname)" -TargetObject $pv.fullname -Category "InvalidResult" -CategoryActivity "File Copy" -CategoryReason "Hash mismatch"

}

} #foreach

} #scriptcmd

$steppablePipeline = $scriptCmd.GetSteppablePipeline($myInvocation.CommandOrigin)

$steppablePipeline.Begin($PSCmdlet)

} catch {

throw

}

}

process {

try {

write-Verbose "Processing $_"

#get the item and add a property for the hash

$_ | Get-item | Add-member -MemberType ScriptProperty -Name "OriginalHash" -value {($this | Get-FileHash -Algorithm $script:hash).hash} -PassThru -PipelineVariable pv |

foreach {

$steppablePipeline.Process($psitem)

}

} catch {

throw

}

}

end {

try {

$steppablePipeline.End()

} catch {

throw

}

}

} #end function Copy-ItemWithHash

#define an optional alias

Set-Alias -name ch -value Copy-ItemWithHash

This function includes the same algorithm parameter as Get-FileHash, although I set my default to MD5 for performance. I haven’t tested this function under all situations, but it seems to work for simple file copies.

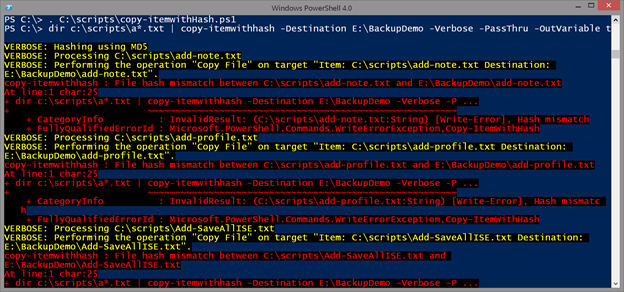

dir c:\scripts\*.zip | copy-itemwithhash -Destination E:\BackupDemo -Verbose -PassThru -OutVariable o

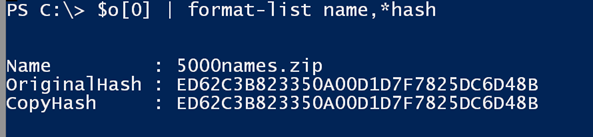

If you use –Passthru and save the results, as I’m doing with OutVariable, the resulting object will include custom properties showing the original and file copy hashes.

$o[0] | format-list name,*hash

I included code in the function that you can enable if you want to artificially introduce hashing errors for testing purposes. Comment out this line:

($this | get-filehash -algorithm $script:hash).hash

And uncomment this:

$h = ($this | Get-FileHash -Algorithm $script:hash).hash $r = Get-Random -Minimum 0 -Maximum 2 $h.substring($r)

Now I have some errors. Hopefully you will never see that many when copying files and comparing file hashes. But if you did, it would be a red flag that you have trouble in River City.

Be sure to take a few minutes to read full help and examples for Get-FileHash and if you are using it in your scripts or tasks, I’d love read about it in the comments.