How to Copy Files with PowerShell Remoting

- Blog

- PowerShell

- Post

Copying files between computers has long been a common system administration task. In the Windows world this has usually been a no-brainer. The underlying protocols are mature and simply work. And while SMB 3.0 is a valuable improvement, I thought it might be interesting to copy files through a PowerShell remoting session.

With PowerShell remoting you have a secure, encrypted connection between two computers. It can be further protected by using SSL. Personally, I find the big benefit is that communication is done through a single port, which makes the entire process very firewall friendly. So why not use this single port connection to copy files?

Using PowerShell Remoting to Copy Files

Let’s walk through the process, and maybe you’ll learn a new thing or two about PowerShell along the way. First, let’s consider a simple text file.

$file = "C:\work\ComputerData.xml" $content = Get-Content -Path $file

The $content variable holds the content of Computerdata.xml. We can use this variable with Invoke-Command and pass it to a remote computer.

Invoke-command -scriptblock {

Param($Content,$Path)

$content | out-file -FilePath $path -Encoding ascii

} -ArgumentList @($content,"c:\files\Computerdata.xml") -ComputerName jh-win81-ent

I have parameterized the scriptblock to accept values for file content and a file name. Values are passed with the –ArgumentList parameter. This takes the value of $content and sends it to the remote computer where it is saved to a new file. All of this is accomplished over a PSSession.

If you are using PowerShell 3.0 or later, you can simplify this a bit and take advantage of $using.

Invoke-command -scriptblock {

Param($path)

$using:content | out-file -FilePath $path -Encoding ascii

} -ArgumentList "c:\files\Computerdata2.xml" -ComputerName jh-win81-ent

Now, I can reference the variable $content, which exists on my computer in the remote PowerShell session. I could have even defined the path as a local variable and passed that with $using as well. This technique works fine with text files, but it will fail to properly copy anything else like a zip file. For that, we need to turn to the .NET Framework and use some methods from System.IO.File.

$file = "c:\scripts\adsvw.exe"

$content = [system.io.file]::ReadAllBytes($file)

$out = "C:\files\adsvw.exe"

Invoke-command -scriptblock {

Param($path)

[system.IO.file]::WriteAllBytes($path,$using:content)

Get-Item $path

} -ArgumentList $out -ComputerName jh-win81-ent

In this example, I am reading the content of the exe file by reading bytes. In the remote session, I can write all the bytes to the specified file. This scriptblock also gets the final file and writes the directory listing to the pipeline.

But you know, there’s no reason to have two separate techniques as reading and writing bytes will work for text files just as well. Now that I have the foundation of command, it is time to turn this into a more feature-rich tool. Allow me to introduce Copy-FiletoRemote.

Function Copy-FileToRemote {

<#

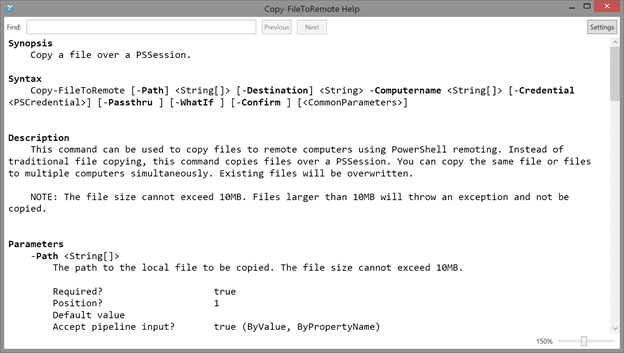

.Synopsis

Copy a file over a PSSession.

.Description

This command can be used to copy files to remote computers using PowerShell remoting. Instead of traditional file copying, this command copies files over a PSSession. You can copy the same file or files to multiple computers simultaneously. Existing files will be overwritten.

NOTE: The file size cannot exceed 10MB. Files larger than 10MB will throw an exception and not be copied.

.Parameter Path

The path to the local file to be copied. The file size cannot exceed 10MB.

.Parameter Destination

The folder path on the remote computer. The path must already exist.

.Parameter Computername

The name of remote computer. It must have PowerShell remoting enabled.

.Parameter Credential

Credentials to use for the remote connection.

.Parameter Passthru

By default this command does not write anything to the pipeline unless you use -passthru.

.Example

PS C:\> dir C:\data\mydata.xml | copy-filetoremote -destination c:\files -Computername SERVER01 -passthru

Directory: C:\files

Mode LastWriteTime Length Name PSComputerName

---- ------------- ------ ---- --------------

-a--- 10/17/2014 7:51 AM 3126008 mydata.xml SERVER01

Copy the local file C:\data\mydata.xml to C:\Files\mydata.xml on SERVER01.

.Example

PS C:\> dir c:\data\*.* | Copy-FileToRemote -destination C:\Data -computername (Get-Content c:\work\computers.txt) -passthru

Copy all files from C:\Data locally to the directory C:\Data on all of the computers listed in the text file computers.txt. Results will be written to the pipeline.

.Notes

Last Updated: October 17,2014

Version : 1.0

Learn more:

PowerShell in Depth: An Administrator's Guide (http://www.manning.com/jones6/)

PowerShell Deep Dives (http://manning.com/hicks/)

Learn PowerShell in a Month of Lunches (http://manning.com/jones3/)

Learn PowerShell Toolmaking in a Month of Lunches (http://manning.com/jones4/)

****************************************************************

* DO NOT USE IN A PRODUCTION ENVIRONMENT UNTIL YOU HAVE TESTED *

* THOROUGHLY IN A LAB ENVIRONMENT. USE AT YOUR OWN RISK. IF *

* YOU DO NOT UNDERSTAND WHAT THIS SCRIPT DOES OR HOW IT WORKS, *

* DO NOT USE IT OUTSIDE OF A SECURE, TEST SETTING. *

****************************************************************

.Link

Copy-Item

New-PSSession

#>

[CmdletBinding(DefaultParameterSetName='Path', SupportsShouldProcess=$true)]

param(

[Parameter(ParameterSetName='Path', Mandatory=$true, Position=0, ValueFromPipeline=$true, ValueFromPipelineByPropertyName=$true)]

[Alias('PSPath')]

[string[]]$Path,

[Parameter(Position=1, Mandatory=$True,

HelpMessage = "Enter the remote folder path",ValueFromPipelineByPropertyName=$true)]

[string]$Destination,

[Parameter(Mandatory=$True,HelpMessage="Enter the name of a remote computer")]

[string[]]$Computername,

[pscredential][System.Management.Automation.CredentialAttribute()]$Credential=[pscredential]::Empty,

[Switch]$Passthru

)

Begin {

Write-Verbose -Message "Starting $($MyInvocation.Mycommand)"

Write-Verbose "Bound parameters"

Write-Verbose ($PSBoundParameters | Out-String)

Write-Verbose "WhatifPreference = $WhatIfPreference"

#create PSSession to remote computer

Write-Verbose "Creating PSSessions"

$myRemoteSessions = New-PSSession -ComputerName $Computername -Credential $credential

#validate destination

Write-Verbose "Validating destination path $destination on remote computers"

foreach ($sess in $myRemoteSessions) {

if (Invoke-Command {-not (Test-Path $using:destination)} -session $sess) {

Write-Warning "Failed to verify $destination on $($sess.ComputerName)"

#always remove the session

$sess | Remove-PSSession -WhatIf:$False

}

}

#remove closed sessions from variable

$myRemoteSessions = $myRemoteSessions | where {$_.state -eq 'Opened'}

Write-Verbose ($myRemoteSessions | Out-String)

} #begin

Process {

foreach ($item in $path) {

#get the filesystem path for the item. Necessary if piping in a DIR command

$itemPath = $item | Convert-Path

#get the file contents in bytes

$content = [System.IO.File]::ReadAllBytes($itempath)

#get the name of the file from the incoming file

$filename = Split-Path -Path $itemPath -Leaf

#construct the destination file name for the remote computer

$destinationPath = Join-path -Path $Destination -ChildPath $filename

Write-Verbose "Copying $itempath to $DestinationPath"

#run the command remotely

#define a scriptblock to run remotely

$sb = {

[cmdletbinding(SupportsShouldProcess=$True)]

Param([bool]$Passthru,[bool]$WhatifPreference)

#test if path exists

if (-Not (Test-Path -Path $using:Destination)) {

#this should never be reached since we are testing in the begin block

#but just in case...

Write-Warning "[$env:computername] Can't find path $using:Destination"

#bail out

Return

}

#values for WhatIf

$target = "[$env:computername] $using:DestinationPath"

$action = 'Copy Remote File'

if ($PSCmdlet.ShouldProcess($target,$action)) {

#create the new file

[System.IO.File]::WriteAllBytes($using:DestinationPath,$using:content)

If ($passthru) {

#display the result if -Passthru

Get-Item $using:DestinationPath

}

} #if should process

} #end scriptblock

Try {

Invoke-Command -scriptblock $sb -ArgumentList @($Passthru,$WhatIfPreference) -Session $myRemoteSessions -ErrorAction Stop

}

Catch {

Write-Warning "Command failed. $($_.Exception.Message)"

}

}

} #process

End {

#remove PSSession

Write-Verbose "Removing PSSessions"

if ($myRemoteSessions) {

#always remove sessions regardless of Whatif

$myRemoteSessions | Remove-PSSession -WhatIf:$False

}

Write-Verbose -Message "Ending $($MyInvocation.Mycommand)"

} #end

} #end function

Before I explain how this works let me mention a few caveats. First, due to restrictions with PowerShell remoting, you can’t copy any single file larger than 10MB. If you do, this command will throw an exception and the file won’t be copied. The other potential gotcha involves the PowerShell v5 preview. As I’m writing this there is a known issue with $using if one computer running v5 and the other is not. If both computers are running the v5 preview, there is no problem and my function works just fine. But if you are running PowerShell 4 and try to copy files to a computer running the v5 preview, you will get an error. I’m trusting that eventually this will get sorted out.

The function includes comment-based help.

The syntax is simple enough. Specify a local file and a folder on a remote computer. The folder must already exist. My command will copy the file to the remote folder over a PSSession. This version doesn’t handle anything like recursive copying. It simply allows you to copy C:\MyFiles\Data.xml to C:\Some\Remote\Path\Data.xml on another computer. Actually, you can copy the same file or sets of files to multiple computers simultaneously because everything is done with Invoke-Command over a PSSession! Remoting also supports alternate credentials so I threw that in as well.

I assumed you would want to run a command like this:

Dir c:\files\*.xml | copy-filetoremote –destination c:\XML –computer $MyServers

A command like this would copy all XML files from the local C:\Files folder to the folder C:\XML on all the computers in the $MyServers variable.

My function uses a Begin, Process, and End scriptblock. Code in the Begin script block runs once before any pipeline input is processed. In this section of the script, I create the PSSessions to the specified computers.

$myRemoteSessions = New-PSSession -ComputerName $Computername -Credential $credential

Next, I verify that the target folder exists on each computer. If it doesn’t, then I remove that session, which in essence closes the connection.

foreach ($sess in $myRemoteSessions) {

if (Invoke-Command {-not (Test-Path $using:destination)} -session $sess) {

Write-Warning "Failed to verify $destination on $($sess.ComputerName)"

#always remove the session

$sess | Remove-PSSession -WhatIf:$False

}

}

The variable $myRemoteSessions includes all of the sessions, but now some of them will be closed. The easiest way I found to remove them is to filter them out and re-define the variable.

$myRemoteSessions = $myRemoteSessions | where {$_.state -eq 'Opened'}

In the End script block, after all the files have been copied, these sessions are removed.

if ($myRemoteSessions) {

#always remove sessions regardless of Whatif

$myRemoteSessions | Remove-PSSession -WhatIf:$False

}

The Process script block includes code to handle each file. Because the path might be piped in, typed directly, or use a relative reference, I need to make sure I get a clean filesystem path, which is where Convert-Path comes into play.

foreach ($item in $path) {

$itemPath = $item | Convert-Path

…

Next I define some local variables and split up the file path to get the file name and construct the destination name.

#get the file contents in bytes $content = [System.IO.File]::ReadAllBytes($itempath) #get the name of the file from the incoming file $filename = Split-Path -Path $itemPath -Leaf #construct the destination file name for the remote computer $destinationPath = Join-path -Path $Destination -ChildPath $filename

These variables are used within my scriptblock, which is executed using Invoke-Command.

Invoke-Command -scriptblock $sb -ArgumentList @($Passthru,$WhatIfPreference) -Session $myRemoteSessions -ErrorAction Stop

Because I wanted to support –WhatIf and –Passthru, I had to find a way to pass those settings to the remote script block.

$sb = {

[cmdletbinding(SupportsShouldProcess=$True)]

Param([bool]$Passthru,[bool]$WhatifPreference)

…

The –ArgumentList parameter passes the local values to the remote computer.

Because I’ve setup SupportsShouldProcess, I can define my own WhatIf handling because calling a .NET static method has no concept of –WhatIF.

$target = "[$env:computername] $using:DestinationPath"

$action = 'Copy Remote File'

if ($PSCmdlet.ShouldProcess($target,$action)) {

#create the new file

[System.IO.File]::WriteAllBytes($using:DestinationPath,$using:content)

If ($passthru) {

#display the result if -Passthru

Get-Item $using:DestinationPath

}

} #if should process

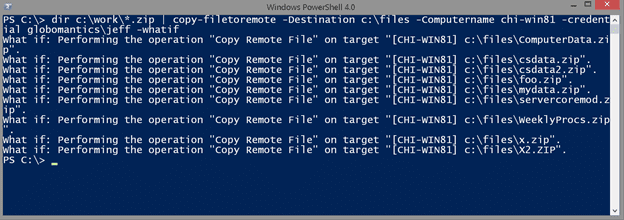

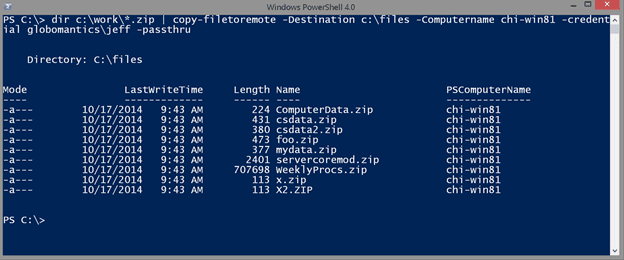

Now, I can run a command like this:

dir c:\work\*.zip | copy-filetoremote -Destination c:\files -Computername chi-win81 -credential globomantics\jeff –whatif

If that looks good, I can rerun the command without –Whatif. Note that I won’t see any results unless I use –Passthru.

I could just as easily have copied the files to 10 computers.

What do you think? Is something like this useful? What features do you think a future version should include? As with any PowerShell scripts you find on the Internet, please be sure to review and test in a non-production environment.

Related Article: