Windows Server 2012: SMB 3.0 and the Scale-Out File Server

In this article, we will explain the building blocks of the Windows Server 2012 or Windows Server 2012 SMB 3.0 R2 Scale-Out File Server. You should read our previous article, “Windows Server 2012 SMB 3.0 File Shares: An Overview,” to understand the role of SMB 3.0 for storing application data, such as SQL Server database files and Hyper-V virtual machines.

What Is a Scale-Out File Server (SOFS)?

It is clear that while Microsoft is committed to their ongoing development and support for traditional block storage, they see SMB 3.0 as a way to reduce the expense and increase the performance of one of the biggest cost centers in the data center. Once legacy concerns about SMB 3.0 performance have been put to rest, the question of high availability will come up: You don’t want to rely on a single point of failure like a file server when your entire business is relying on it. The traditional active/passive file server will not suffice; the failover process is too slow.

In Windows Server 2012, Microsoft gave us a new storage architecture called the Scale-Out File Server (SOFS), which is (deep breath required) a scalable and continuously available storage platform with transparent failover. Users of block storage will wonder what this new alien concept is. Actually, it’s not that different to a SAN.

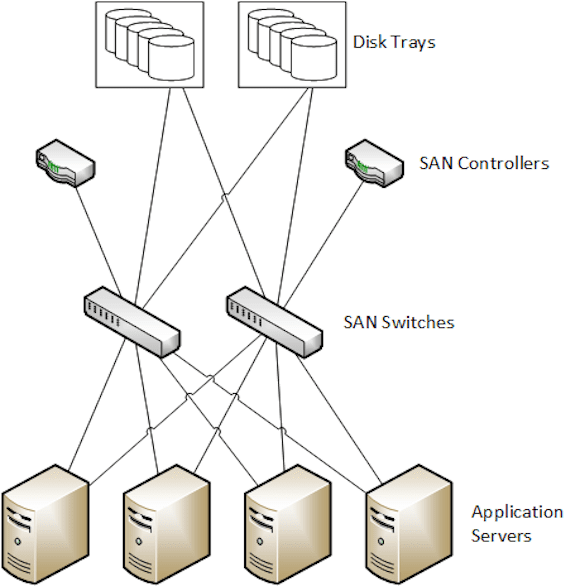

In the case of a SAN, there are four major components or layers:

- Disk trays

- Switches

- Controllers

- Application servers

SAN: Disk Trays

The disks of the SAN are inserted into one or more disk trays. Disks are aggregated into pools or groups. Logical units (LUNs) or virtual disks are created from theses disk pools, and RAID is optionally configured to provide disk fault tolerance for each LUN. The SAN manufacturer usually requires you to use disks for which they have created a special firmware. While this can provide higher quality, the price of these prone-to-failure devices is increased substantially over their original cost. SANs can offer tiered storage, where slices of data (subsets of files) are automatically promoted from cheaper/slower storage to expensive/faster storage, and vice versa, depending on demand. This gives a great balance between performance and capacity. The disk tray can be seen as a single point of failure – this is why some SAN solutions also offer a form of RAID for disk trays.

SAN: Switches

The entire SAN is connected together by two or more fiber channel switches. Ideally, the switches are redundant (for network path fault tolerance) and dedicated (for performance) switches. The switches connect the entire SAN solution together, forming the storage area network.

SAN: Controller

The controller is an appliance that is actually a very specialized form of server. It is responsible for storage orchestration, mapping logical units to servers, enabling management, and it provides caching. The controller is also a very expensive part of the SAN. Basic SAN models can only have two controllers, and higher end storage systems can expand to more than two controllers.

SAN: Application Servers

The application servers will have at least one connection to each switch, and typically these are dedicated connections, such as a network card for iSCSI or a host bus adapter for SAS or Fiber Channel. Multipath IO (MPIO) is installed and configured on the application servers. This software is normally provided by the SAN manufacturer. The role of MPIO is to aggregate the servers’ connections to the switches and to provide transparent redundancy. The application servers access data that is stored in LUNs on the disk tray via the SAN controllers.

Summarizing the SAN

The SAN has become the de facto standard for bulk storage in the medium-to-large enterprise. Solutions provide impressive scalability, relatively centralized (per SAN footprint) LUN management, great redundancy, and easier backup. But is this the right solution? The cost per gigabyte of SAN storage is extremely high. The amount of data that we are generating and being forced to retain is escalating. And we do have to ask two very serious questions:

- Do we need to use a SAN?

- If some SAN functionality is required (such as LUN-LUN replication), then do we need to store all/most/just some of our data on this expensive storage?

The Scale-Out File Server

Before Windows Server 2012, deploying systems with resilient and scalable storage meant deploying a SAN. Microsoft realized that this was becoming an issue for small and large businesses, as well as hosting companies due to the price/GB and the vendor lock-in that this solution requires. Microsoft included multiple features in WS2012 that, when used together, give us software-defined storage that is more economic, more scalable, more flexible, and easier to deploy than a traditional SAN.

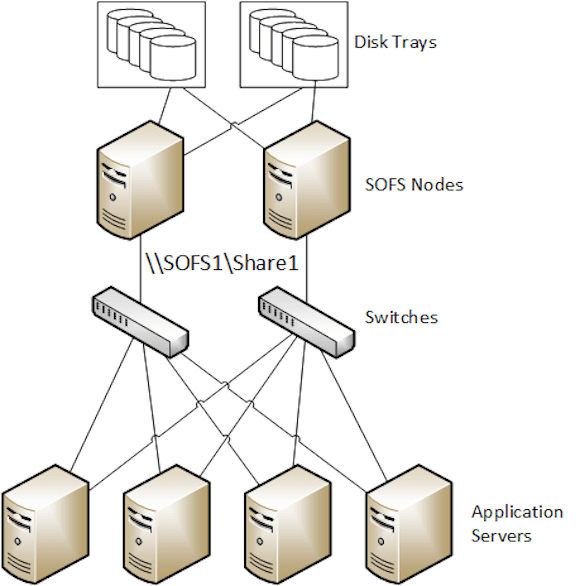

One example of this is the Scale-Out File Server (SOFS). This new architecture is different to a SAN, but it won’t be alien to storage engineers. That is because most of the roles are still there in a different form:

- JBOD trays

- Clustered servers + Scale-Out File Server clustered role

- Switches

- Application servers

JBOD Trays

The most commonly explained implementation of a SOFS uses SAS-attached JBOD trays as the shared storage. These are very economic and scalable units of storage that typically have a hardware compatibility list that supports SAS controllers and disks from many manufacturers.

Instead of using hardware RAID, Windows Server Storage Spaces are used. A storage pool is created to aggregate disks. Virtual disks with different kinds of fault tolerance can be created from the storage pool. Virtual disks can be made fault tolerance across multiple JBOD trays. And WS2012 R2 offers tiered storage across SSDs and HDDs, and it builds on the IOPS SSD to provide persistent write-through caching that applications such as Hyper-V can use. This means that a JBOD with Storage Spaces can be cheaper than using SAN disk trays, offering most of the functionality that we want for enterprise level performance.

SOFS Switches

The application servers will communicate with the storage of the SOFS via two redundant switches. The SOFS is based on WS2012 and this means a wide variety of networking is supported.

- 1 GbE

- 10 GbE

- iWarp: 10 Gbps Remote Direct Memory Access (RDMA – low CPU impact & high speed offloaded networking)

- ROCE: 10/40 Gbps RDMA

- Infiband: 56 Gbps RDMA

The switches can be dedicated, but it is more likely that they will be used for several roles, including the private networks of a cluster. This especially applies to Windows Server 2012 R2 Hyper-V where Live Migration can make use of RDMA networking. That means an investment in 10 GbE or faster networking can offer more than fast storage; WS2012 R2 can use RDMA networking for CSV redirected IO and SMB Live Migration.

Any brand of switch can be used in a SOFS implementation. This avoids the vendor lock-in that one associates with a SAN, provides the customer with choice, and makes the price more competitive.

SOFS: Application Servers

The application servers will use SMB 3.0 to access the SOFS through the switches. SMB 3.0 provides:

- SMB Multichannel: A feature that requires no configuration to work, and allows an SMB client to communicate to an SMB server via more than one NIC. This MPIO replacement requires no input from a hardware vendor and provided scalable and automatically fault tolerant networking straight out of the box.

- SMB Direct: Using RDMA enabled NICs, the SMB client can stream data to/from an SMB server with high speed and with much lower utilization of the processor.

SMB 3.0 is supported by WS2012 (and alter) Hyper-V, IIS, and SQL Server. The simplicity of SMB 3.0 and the speed of the protocol, combined with the bandwidth of supported NICs and the potential of RDMA, makes SMB 3.0 a much better alternative to iSCSI or Fiber Channel storage protocols.

SOFS: Clustered File Servers

Application servers will be communicating with between two and eight file servers that are clustered together. The file servers play the role of a SAN controller – a very economic SAN controller, to be precise.

Each of these file servers are connected to the same shared JBOD trays at the back end. Virtual disks are created in the JBOD trays. These appear as available storage in the file server cluster and are converted into active/active Cluster Shared Volumes (CSVs).

A special cluster role is known as the file server for application data is deployed on the cluster. This active/active role creates a computer account in Active Directory; this is the “computer” that will share storage with the application servers. Active/active shares are created on the cluster. The shares’ permissions are configured to allow full control to application servers and administrators. And now you have active/active storage, which also handles transparent failover if one of the clustered file servers fails. All that remains is to use the UNC path of the shares (via the single SOFS computer account) on the application servers to deploy files on the SOFS.

Summarizing the SOFS

It doesn’t take long to figure out that:

- SANs have expensive disk trays and vendor locked-in disks, but a SOFS can use relatively inexpensive JBODs and disks that offer the fault tolerance and performance features of their wealthier cousin.

- A SAN has switches, and so does a SOFS, but we can use the SOFS switches for more than just storage.

- SAN controllers are expensive devices, but you can use any server as a cluster node in a SOFS. And by the way, the RAM will be used as a cache!

- Application servers will use legacy block storage protocols to connect to a SAN, but SOFS clients use the faster SMB 3.0 that provides the aggregation and fault tolerance of MPIO but without the complexity.

- If engineered correctly, SAN or a SOFS implementations don’t have single points of failure.

Perhaps you’re wondering: If I can deploy a SOFS with more performance than a SAN, and much more economic than a SAN, that is software defined (file shares), then why do you continue to pay for overpriced legacy storage platforms? With the SOFS, Microsoft has given you a choice that you have never had before.

SOFS FAQs

Can I use servers with DAS and replicate/stripe that data to create a SOFS?

No; the SOFS is a cluster and must have shared storage. In the most commonly presented example, the SOFS uses shared JBOD with Storage Spaces.

Must I use a JBOD?

No. The SOFS can use any shared storage that WS2012 or WS2012 R2 Failover Clustering supports. This includes JBOD, PCI RAID, SAS, iSCSI, and Fiber Channel Storage. Microsoft argues that using SMB 3.0 in-front of any storage offers better performance and easier operations. It also reduces the number of ports required in a SAN (only the SOFS nodes need connect to the SAN switches). Usingsha legacy SANs as the shared storage of a SOFS offers features such as Offloaded Data Transfer (ODX), TRIM, and UNMAP.

Can I deploy Storage Spaces on my SAN?

No. Storage Spaces is intended to be used with JBOD disk trays that have no hardware RAID.

Does SOFS have the ability to replicate virtual disks?

No. You would instead use Hyper-V Replica in the case of virtual machines, or one of SQL Server’s replication methods. These are hardware agnostic and very flexible.

Related Article: