Designing a Basic Scale-Out File Server (SOFS)

We’re back with our look into building a basic scale-out file server (SOFS). In the first post, I showed you how to create a scale-out file server. In this post I’ll show you what goes into designing a basic Windows Server 2012 Scale-Out File Server. This will offer scalable and continuously available SMB 3.0 connectivity to storage for workloads such as Hyper-V, IIS, and SQL Server.

(If you need to do some catching up, be sure to check out our previous articles on Windows Server 2012: SMB 3.0 and the Scale-Out File Server.)

Scale-Out File Server: The Concept

A Scale-Out File Server (SOFS) is a Windows Server cluster with some form of shared storage. The cluster provides a single access point for applications to connect to the shared storage via SMB 3.0. This has two benefits:

- Software defined storage: Provisioning new storage for application is a matter of creating file shares and settings the permissions. This simple abstraction provides a common administrative model for all forms of physical storage in the data center. It’s also a model that every Windows administrator should already understand.

- SMB 3.0: Microsoft’s datacenter and enterprise level storage protocol is simpler than MPIO (SMB Multichannel) and provides better performance than block storage alternatives such as iSCSI and fiber channel (SMB Direct). (For more on the topic, check out our previous article: Windows Server 2012 SMB 3.0 File Shares: An Overview.)

SOFS High-Level Architecture

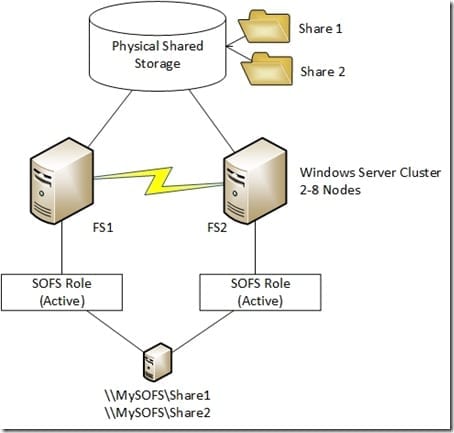

A common misconception is that a SOFS is something like a HP P4000 storage appliance; this appliance is a server with direct attached storage (DAS) that cooperates with more appliances to create a single, striped, logical storage entity. This is not what the SOFS does. It is a traditional Windows Server cluster running a special SOFS role that is active/active across all nodes in the cluster. This role shares out the common physical storage of the cluster to other applications, as shown below.

All data that is shared by the SOFS is stored on some kind of shared storage. Between two and eight clustered servers are connected to this common storage. A special role, called the File Server For Application Data is activated on the cluster. This role becomes active on all nodes in the cluster and synchronizes settings through the cluster’s internal database. This role creates a computer account in active directory, and registers in DNS using the IP addresses of the cluster nodes. Shares are created on the common storage, and are shared out through all nodes in the cluster. Any application that wants to store or access data on the SOFS will use a UNC path that points to the SOFS computer account (MySOFS in the example) and the shared folder (\\MySOFS\Share1).

A Basic Scale-Out File Server.

The Shared Storage

Any storage that is supported by Windows Server 2012 Failover Clustering may be used to create a SOFS. This includes traditional block storage:

- SAS attached SAN

- iSCSI

- Fiber Channel

- FCOE

- PCI-RAID

Why would you consider using a SOFS to connect applications to your block storage? You might because:

- Simplified access: Once a SAN administrator has configured LUNs and connected the SOFS nodes, you no longer have to do much SAN engineering. You simply create new file shares and permission your application servers (such as Hyper-V hosts) to connect to the shared storage.

- Broader application access: Windows Server 2012 R2 is introducing some interesting new scenarios if SMB 3.0 storage is used. A share can be used by more than one Hyper-V cluster. This means that we can use Cross-Platform Live Migration to quickly move VMs from a WS2012 cluster to a WS2012 R2 cluster, with no downtime. We can also run guest clusters where the virtual nodes are running on different Hyper-V clusters.

- Greater scalability: A SAN has a limited number of expensive switch ports. We can connect between two and eight clustered SOFS nodes to the SAN, and use them to share the block storage with many application servers, with that number being very high if we use Remote Direct Memory Access (RDMA) capable networking.

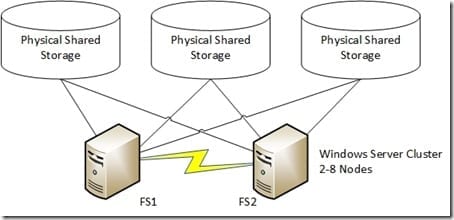

Most examples of a SOFS that are presented will use “dumb” just-a-bunch-of-disks (JBOD) trays. Disk fault tolerance and unified management is provided by Storage Spaces. Windows Server 2012 R2 adds tiered storage and Write-Back Cache to improve both read and write performance of the JBOD, while retaining disk scalability. Note that WS2012 only supports mirrored virtual disks. WS2012 R2 adds support for parity virtual disks. A single JBOD tray might be considered a single point of failure. You can use three-way mirroring to storage the data of each virtual disk in the storage pool on three different JBODs that are all connected to the SOFS nodes.

A SOFS with three-way mirroring.

Consult with the storage manufacturer for precise guidance. Typically each host will have two connections to the shared storage. In the cause of Storage Spaces, each SOFS node will have two SAS connections to each JBOD tray.

Designing Scale-Out File Servers and Networking

A SOFS is a Windows Server Failover Cluster and has the same basic networking requirements as a cluster. A number of networks are required:

- 1* management network: This is to manage the SOFS. Backup traffic will pass through this connection, however you might add another network if required. This connection might require two NICs in a NIC team.

- 2 * cluster networks: Best practice is to have two private networks for the cluster communications and redirected IO traffic (for metadata operations and storage path fault tolerance). Note that Cluster-in-a-Box (CiB) appliances use a single ultra-reliable copper connection for cluster communications. Ordinary servers use unreliable Ethernet and switch connections; hence there being a need for two networks for reliability.

- 2 * client access networks: At least two networks should be used to allow application servers to store and access data on the SOFS. These must be two different networks, such as 192.168.1.0/24 and 192.168.2.0/24 for SMB 3.0 Multichannel and Failover Clustering to be compatible. You can constrain SMB 3.0 traffic to these connections. These are the networks that you will target with RDMA technology if you can afford it.

At first look, it looks like you need five or six NICs to network a SOFS node. Using converged networks based on WS2012 and WS2012 R2 Quality of Service (QoS), you can use fewer, higher capacity NICs, and you can run multiple functions through those NICs. QoS, implemented by the OS Packet Scheduler, will ensure minimum levels of service for each protocol (SMB 3.0, RDMA, Remote Desktop, cluster communications, backup, and so on).