Top Storage Pitfalls to Avoid with Windows Failover Clusters

Windows Failover Clusters can provide the highest level of availability for business critical applications such as SQL or Exchange. The fault tolerant design of clusters ensures there is no single point of failure including multiple servers, multiple network connections and multiple storage controllers. The trade-off that you pay for this high availability is the additional layer of complexity involved with installing, configuring, and troubleshooting issues.

Storage is one area of Windows Failover Clusters that can drastically affect the overall stability of the cluster. Careful planning and design of the storage subsystem will ensure a smooth cluster installation, and will help with any future troubleshooting efforts. This article will examine the various storage components that need to be considered when designing, installing and configuring failover clusters, and how to avoid the common pitfalls that affect stability.

Choosing the Right Partition Size

Perhaps one of the biggest decisions to make when installing a Windows Failover Cluster is the size of the storage partitions. While this might seem like a simple decision, the consequences can directly affect the future availability of the cluster. Most people tend to under estimate their storage needs which presents several problems. As available free space shrinks, the fragmentation of files increase which directly affects performance. Also, administrators must continually spend time purging, archiving, and moving files around to free up room for new files.

Fortunately, most storage subsystems allow storage LUNs (logical unit numbers) to be dynamically expanded. The Windows operating system also allows partitions to be dynamically expanded with tools such as Diskpart. Unfortunately, the procedure to dynamically expand disk drives in a cluster environment is non-trivial and typically requires downtime. Furthermore, if more than 2TB (terabytes) of disk space is needed on a single partition, GPT disk formats must be used instead of the typical MBR-based disks. To convert from MBR to GPT-based disks, all partitions must be deleted before the drive can be converted. So making the correct decision about the partition size can have a direct bearing on the cluster’s availability. Further information can be found here in article on GPT vs MBR Disk Comparison.

Compatible & Consistent Storage Drivers

Another big storage pitfall that people stumble across is incompatible and/or inconsistent storage drivers. As discussed in the Storage Disk Architecture article, there are numerous device and filter drivers that interoperate to perform I/O operations (read and writes). While Microsoft provides high level storage drivers such as Storport and MPIO, there are many 3rd party vendors that provide device specific drivers for their host bus adapters and storage arrays. All of these drivers must be compatible to work together.

Furthermore, in a cluster environment, it is extremely important that all servers in the cluster run the same version of the storage drivers along with the same firmware version. Fortunately, the Cluster Validation Wizard can be used to inventory device drivers and flags inconsistencies between servers. For more information on using Validate, see the Cluster Validation article which discusses leveraging the Cluster Validation Wizard for troubleshooting storage problems.

Controller Specific Settings

A final word of caution with configuring storage and clusters would include array specific settings. Most storage controllers offer a variety of settings to accommodate servers running different operating systems. With Windows Failover Clusters, it is important that storage arrays be configured to support SCSI-3 persistent reservations. Windows 2008 and later uses persistent reservations to control access to shared disk drives. Just because a storage controller worked with Windows 2003 clusters does not mean it will function with Windows 2008. Once again, the Cluster Validation Wizard can be used to check that your storage controllers will function with failover clusters.

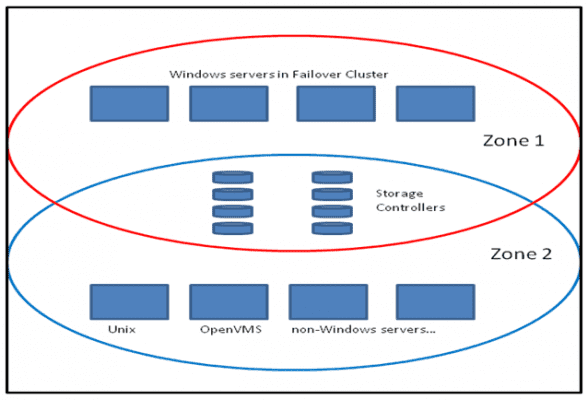

Additional storage controller and switch settings that should be configured include LUN masking and SAN zoning. In a clustered environment, LUN’s should be configured to allow only servers in the cluster to have shared access. This is called LUN masking. The cluster disk driver controls which server actually owns a particular shared drive. It is also recommended to establish SAN zoning to isolate the cluster I/O traffic to only include cluster servers and storage controllers as seen in figure 1 below. This helps to reduce I/O latencies on the SAN potentially caused by other operating systems.

Summary

Windows Failover Clusters offer functionality that makes them suitable for mission critical applications. Special attention needs to be given when configuring storage components to make certain they will meet your needs and function properly in a cluster environment. Choosing the right partition size, ensuring compatible and consistent storage drivers, and configuring storage controller specific settings can be some of the top gotchas you may encounter. But with a little planning and the help of tools like the Cluster Validation Wizard for failover cluster setup, you can avoid these pitfalls and implement failover clusters with confidence.