Using The Biggest Virtual Machine in Microsoft Azure’s Cloud

When you hear about some giant server with crazy memory or processors, or a SAN that supports immense amounts of flash storage, you’ll most likely think to yourself, “I’ll never see one of those, let alone have the chance to play with one.”

The great thing about having access to a public cloud like Azure is that you have access to anything, and you only pay for it by the minute while you use it. That gives you a chance you play with the big stuff for a very short amount of time!

I decided to deploy the largest possible virtual machine that you can in any of the “big three clouds” according to Microsoft, a GS5. I’ll explain what I got from this machine, how it performed when stressed, and how much it cost me.

The Microsoft Azure GS5 Virtual Machine

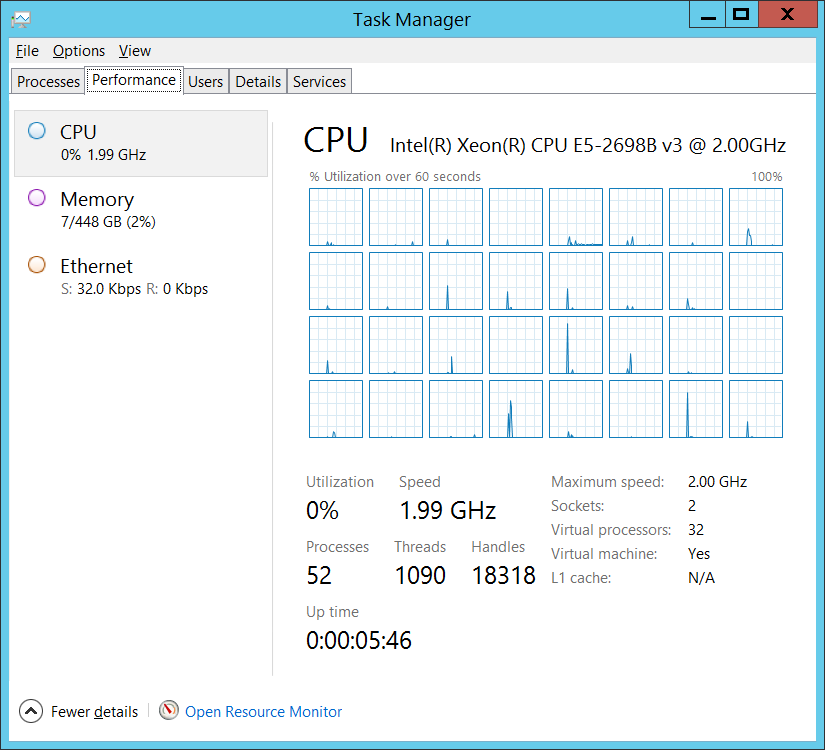

At the moment, the GS5 is the premium virtual machine in Azure. The GS-Series is based on the G-Series machines, based on hosts with the 2.0 GHz Intel Xeon E5-2698B v3 CPU. The G- and GS-Series virtual machines offer much more RAM per core than any of the other Azure virtual machine specifications. The GS1 starts with two cores and 28 GB RAM, which is much more than the DS2, which has two cores and 7 GB RAM. The workloads that you run in these machines are intended to be extremely memory intensive, possibly using RAM to cache data instead of disk.

The GS-Series gives you the option to deploy the OS and data disks on HDD-based Standard Storage or SSD-based Premium Storage. The temporary disk is based on a host-local SSD drive. You can have up to 64 data disks offering up to 80,000 IOPS with a transfer rate of 2,000 MB per second.

So, let’s think about that for a second. This is a machine with:

- 32 Xeon processor cores.

- 448 GB RAM.

- Up to 64 x 1023 GB data disks, running at SSD speeds and transfer rates.

How often would you ever get to fire that machine up, and what would it cost you? I decided to deploy such a machine in Azure on my spare MSDN Premium subscription, which includes a nice Azure credit benefit. Note that Windows virtual machines are charged at Linux rates for MSDN customers because the Windows license is covered by MSDN benefits, terms, and conditions.

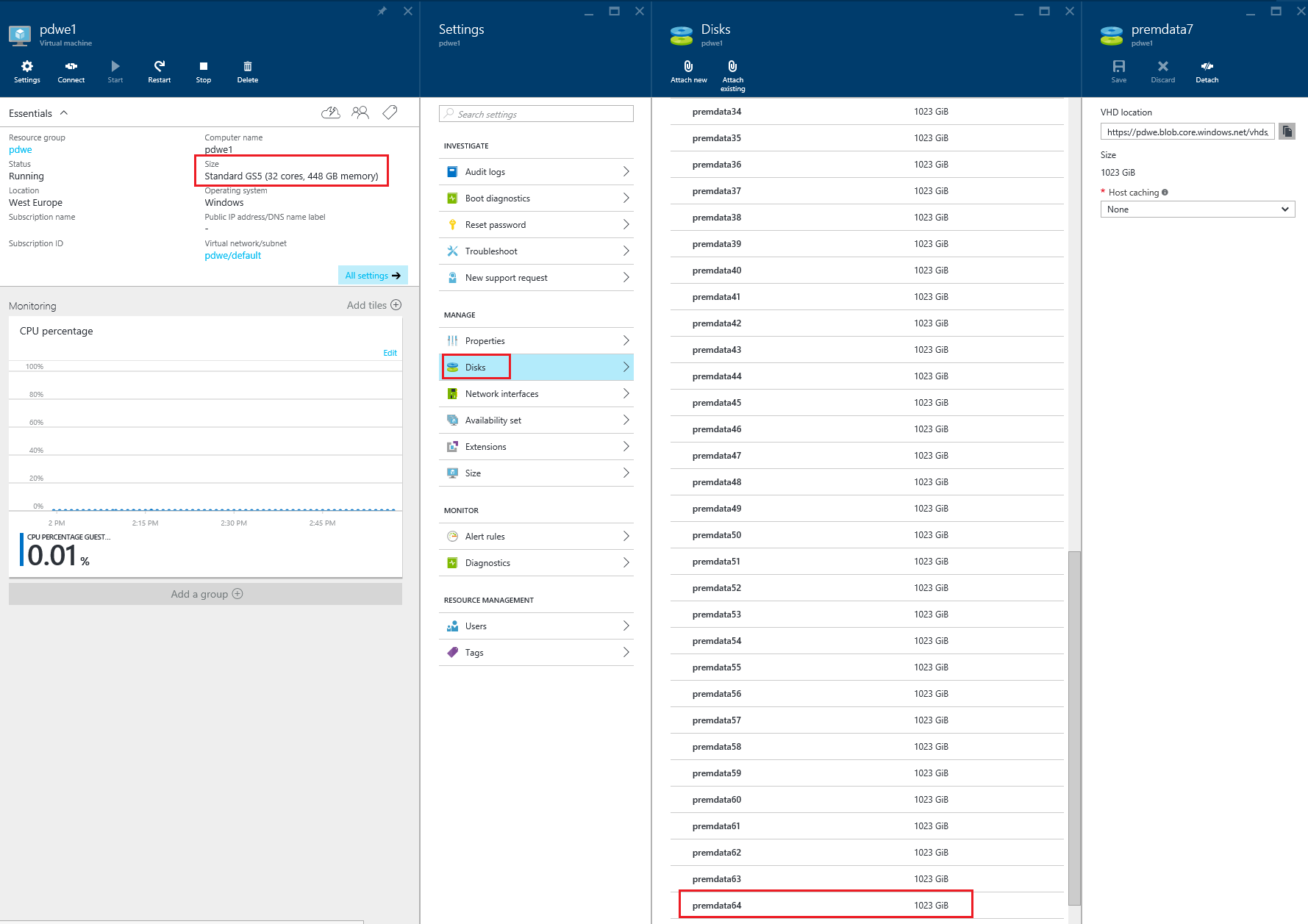

To speed up deployment and clean up, I deployed a V2 virtual machine using Azure Resource Manager, containing all of the dependent resources in a single resource group. Next, I used Azure Resource Manager and PowerShell to create and assign 64 x 1023 GB P30 data disks, with caching disabled, to the virtual machine.

$vm = get-azurermvm -Name pdwe1 -ResourceGroupName pdwe

for ($i = 1; $i -lt 65; $i++)

{

CLS

Write-Host "Disk $i"

$diskname = "premdata$i"

$l = $i - 1

Add-AzureRmVMDataDisk -VM $vm -Name "$diskname" -VhdUri "https://pdwe.blob.core.windows.net/vhds/$diskname.vhd" -LUN $l -Caching None -DiskSizeinGB 1023 -CreateOption Empty

}

Update-AzureRmVM -ResourceGroupName "pdwe" -VM $vm

The following screenshot shows the configuration of the resulting machine.

Processor and RAM

I logged into the machine and did what every nerd does when faced with a big machine; I launched Task Manager and took a look around.

7 GB of RAM was being used by Windows Server, out of a possible 448 GB, and you could see all 32 of the logical processors, just waiting for me to provide a workload.

Storage Configuration and Performance

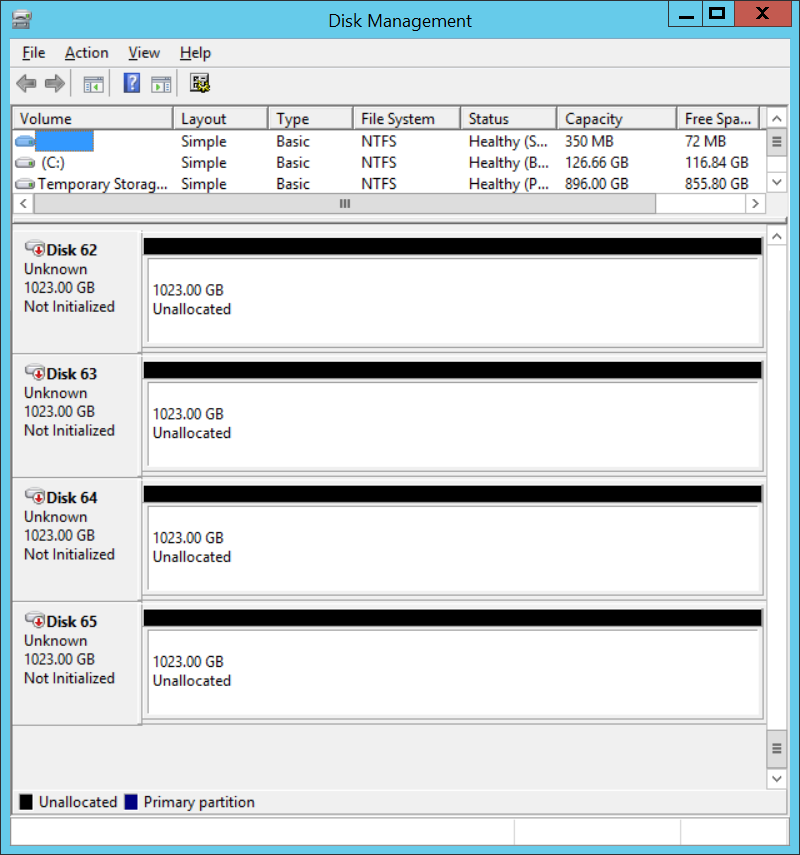

In Disk Manager, I could see the OS disk and 64 x data disks:

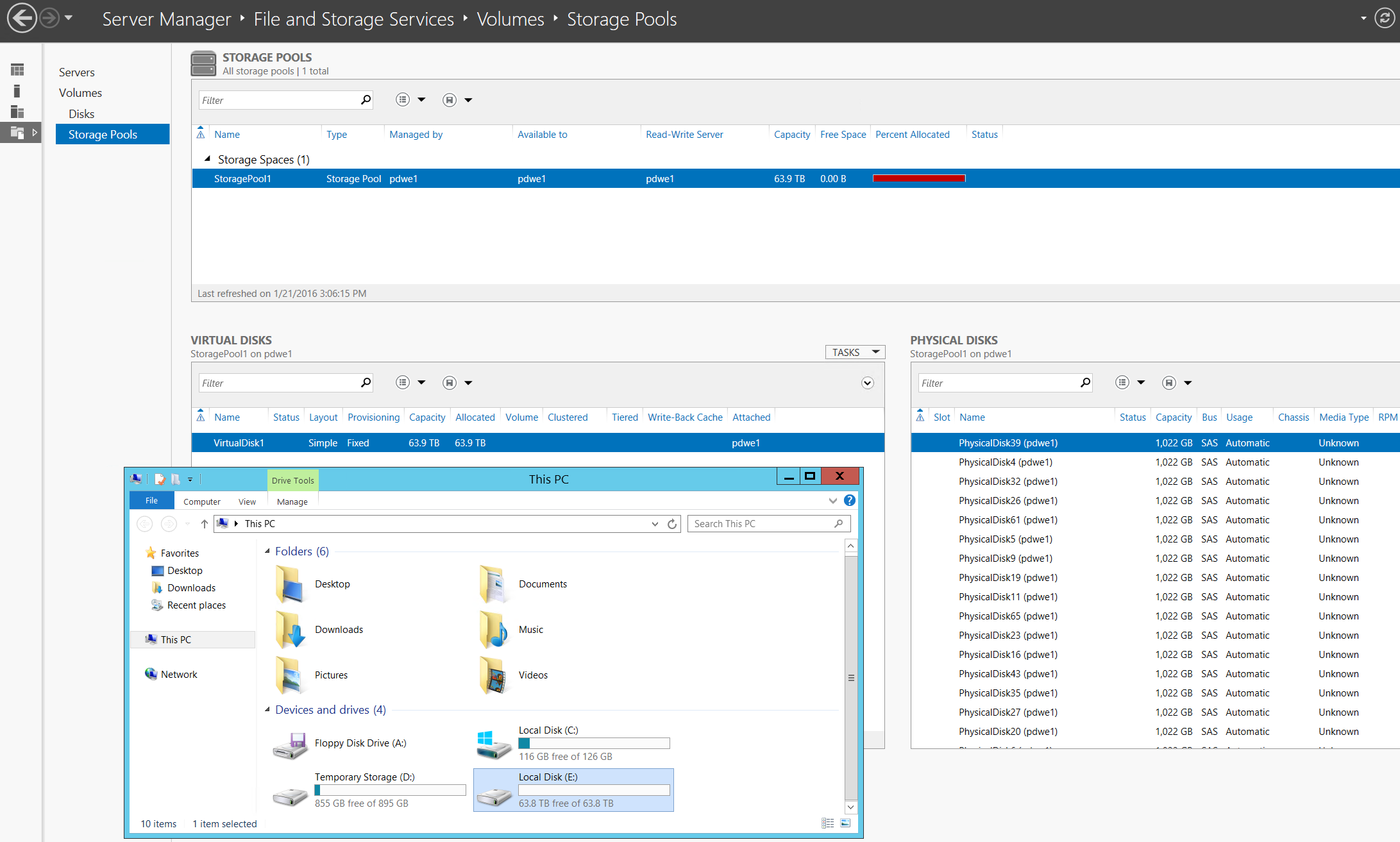

I used the PowerShell code that I previously shared to deploy a single LUN, based on:

- A storage pool comprised of all 64 Premium Storage data disks.

- A virtual disk with a 64 KB interleave.

- NTFS formatted with an allocation unit size of 64 KB.

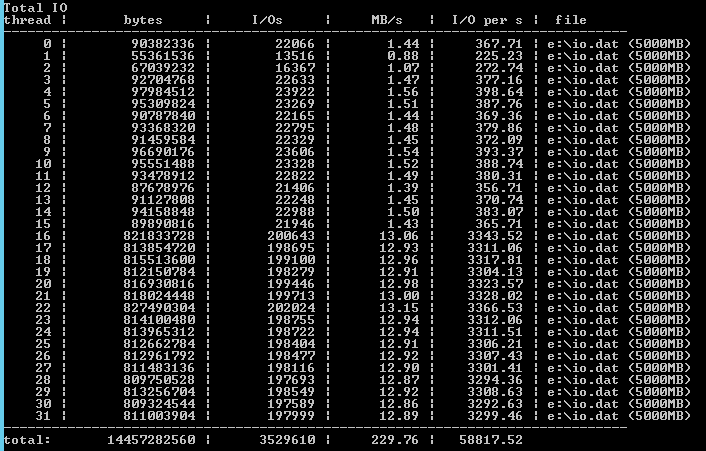

Just how would this storage perform? In theory, the GS5 can support up to 80,000 IOPS without caching. The machine had 64 x P30 data drives, and each of those drives can offer up to 5,000 IOPS. I installed Microsoft’s free tool, DskSpd, and started to run my tests:

- Start stressing the disks with 4K reads, with eight overlapping IOs on two threads.

- Increase the load by reconfiguring the tests.

The only problem was that:

- The virtual machine was costing around €8.4246 per hour

- Each data disk, 64 of them, was costing quite a bit of money

- I had roughly €136 of credit remaining

I had a limited amount of time to reconfigure the tests to hit a peak. I managed to hit 58,817 IOPS before I ran out of time and money. It’s not 80,000 IOPS, but it’s more than I’d hit before. I did observe some spikes to 77,000 IOPS, so I probably wasn’t far from the optimal test configuration before Azure shut the subscription down due to lack of credit.

Note that I performed these tests with caching disabled on each data disk. I could have boosted performance, perhaps by around 25 percent if my previous tests are indicative, by enabling the cache.

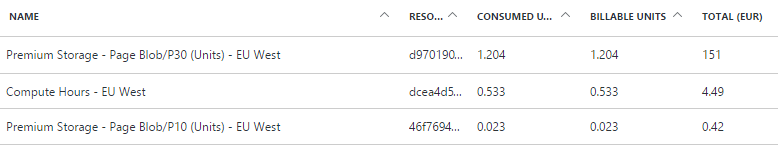

How Much Did This Test Cost?

I normally deploy Azure resources in either the East US 2 or North Europe regions. I deployed this machine in West Europe (Amsterdam) because the GS5 spec was available here, and I could also isolate the billing to see how much the test would cost.

The machine wasn’t running for very long, so it ‘only’ cost me €4.49 (€9.3607 per hour) to run it. However, the Premium Storage capacity cost me much, much more. The machine was deployed in the evening, and that’s when I ran my first tests. I planned to run more tests the following day, but Azure shut down the subscription due to lack of credit remaining. Overnight, the storage consumed €151 of credit! Note that the test file in the data LUN was just 5,000 MB.

Final Thoughts on Azure’s GS5 Virtual Machine

I was lucky enough to have some spare credit around that allowed me to run these tests in Azure. With it, I ran the biggest machine in Microsoft Azure’s cloud, and, yes, it was big. I wish I had more time to stress the disks, RAM, and CPU, but playtime was ended by Azure before I was ready.

This leads me to another point. All too often, newbies to the cloud assume that service, and therefore machine, designs should be the same in the cloud as they would be on-premises. Public clouds are designed and priced to suit smaller machines. Services should be designed to scale out, ideally on the fly, based on demand using small machines. This gives you cost efficiencies by using smaller machines, as well as availing of the per-minute billing that Azure offers.

Crazy-big machines should be a rarity in the cloud, for genuinely crazy-big workloads that justify the costs. As you can see above, those costs can be high. But for nerds like me, accruing those costs can be fun — while it lasts.