My PowerShell Troubleshooting Toolkit Expanded

- Blog

- PowerShell

- Post

Update: January 28, 2015 – 11:00 AM MT – It has come to our attention that the original CSV file in this article unintentionally contained a malware payload. If you have already downloaded the original CSV file, you should delete it immediately. We have replaced the original CSV file with a new CSV file that is safe to download. As a gentle reminder, please test and scan downloads in a non-production environment before using.

Sincerely, the Petri IT Knowledgebase editorial staff.

Original post below.

A few months ago I posted a few articles on how to use PowerShell to build a troubleshooting toolkit. If you’re like me, then you’re often called upon to troubleshoot a computer problem. The call may come as part of your official job, or you may enjoy the privilege of being the resident expert for your family or neighborhood, which is certainly true in my case. Since writing this article series, I’ve revised my PowerShell tools, so let’s take a look.

Originally, I showed you a few different ways to build a toolkit. I’m not going to rehash that in this article. Personally, I think it is better to separate the download tool from the list of files. Stated differently, it’s much smarter to maintain the list of tools separately, rather than hard coding the list of tools. I use a CSV file for this, and this is the most current version of the CSV file that I use. You should save a copy locally. Feel free to add your own tools to the list.

Next, we need a PowerShell tool to process the list. I have made a few revisions to my original Get-MyTool function. Here’s version 2.0 of that function.

#requires -version 3.0

#create a USB tool drive

Function Get-MyTool {

<#

.Synopsis

Download support and scanning tools from the Internet.

.Description

This command will download a set of troubleshooting and diagnostic tools from the Internet. The path will typically be a USB thumbdrive. If you use the -Sysinternals parameter, then all of the SysInternals utilities will also be downloaded to a subfolder called Sysinternals under the given path.

.Parameter URI

The direct HTTP or HTTPS download link. The link must point to a file.

.Parameter Product

The name or description of the tool to be downloaded.

.Parameter NoClobber

Do not overwrite existing files.

.Parameter Sysinternals

Download the Sysinternals tools and place in a subfolder under the given path.

.Parameter Path

This is the location for downloaded files. It can be a local folder or a USB thumbdrive.

.Example

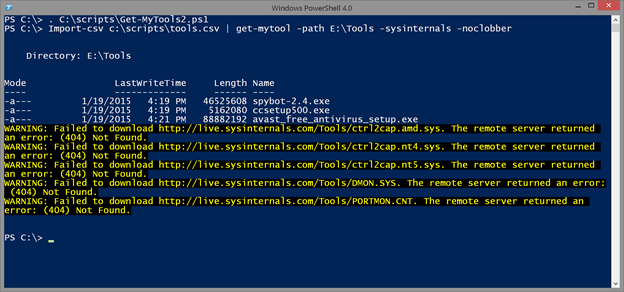

PS C:\> Import-csv c:\scripts\tools.csv | get-mytool -path E:\Tools -sysinternals

Import a CSV of tool data and pipe to this command. This will download all tools from the web and the Sysinternals utilities. Save to drive G:\.

.Example

PS C:\> get-mytool -path e:\tools -product Defraggler -uri http://files.snapfiles.com/localdl936/dfsetup217.zip -noclobber

Download Defraggler from the given location and save to E:\Tools. Skip the download if the zip file already exists.

.Notes

Last Updated: January 19, 2015

Version : 2.0

Learn more about PowerShell:

Essential PowerShell Learning Resources

****************************************************************

* DO NOT USE IN A PRODUCTION ENVIRONMENT UNTIL YOU HAVE TESTED *

* THOROUGHLY IN A LAB ENVIRONMENT. USE AT YOUR OWN RISK. IF *

* YOU DO NOT UNDERSTAND WHAT THIS SCRIPT DOES OR HOW IT WORKS, *

* DO NOT USE IT OUTSIDE OF A SECURE, TEST SETTING. *

****************************************************************

.Link

Invoke-WebRequest

#>

[cmdletbinding(SupportsShouldProcess=$True)]

Param(

[Parameter(Position=0,Mandatory=$True,HelpMessage="Enter the download path")]

[ValidateScript({Test-Path $_})]

[string]$Path,

[Parameter(Mandatory=$True,HelpMessage="Enter the tool's direct download URI",

ValueFromPipelineByPropertyName=$True)]

[ValidateNotNullorEmpty()]

[string]$URI,

[Parameter(Mandatory=$True,HelpMessage="Enter the name or tool description",

ValueFromPipelineByPropertyName=$True)]

[ValidateNotNullorEmpty()]

[string]$Product,

[switch]$Sysinternals,

[switch]$NoClobber

)

Begin {

Write-Verbose "Starting $($myinvocation.MyCommand)"

#hashtable of parameters to splat to Write-Progress

$progParam = @{

Activity = "$($myinvocation.MyCommand)"

Status = $Null

CurrentOperation = $Null

PercentComplete = 0

}

#a private function to handle the download

Function _download {

[cmdletbinding(SupportsShouldProcess=$True)]

Param(

[string]$Uri,

[string]$Path,

[switch]$NoClobber

)

$out = Join-Path -path $path -child (split-path $uri -Leaf)

#check if file exists if using NoClobber

if ($NoClobber -AND (Test-Path -Path $Out)) {

Write-Verbose "Skipping $out because it already exists"

#bail out

Return

}

Write-Verbose "Downloading $uri to $out"

#hash table of parameters to splat to Invoke-Webrequest

$paramHash = @{

UseBasicParsing = $True

Uri = $uri

OutFile = $out

DisableKeepAlive = $True

ErrorAction = "Stop"

}

if ($PSCmdlet.ShouldProcess($uri)) {

Try {

Invoke-WebRequest @paramHash

Get-Item -Path $out

}

Catch {

Write-Warning "Failed to download $uri. $($_.exception.message)"

}

} #should process

} #end download function

} #begin

Process {

Write-Verbose "Downloading $product"

$progParam.status = $Product

$progParam.currentOperation = $uri

Write-Progress @progParam

_download -Uri $uri -Path $path -NoClobber:$NoClobber

} #process

End {

if ($Sysinternals) {

#test if subfolder exists and create it if missing

$sub = Join-Path -Path $path -ChildPath Sysinternals

if (-Not (Test-Path -Path $sub)) {

mkdir $sub | Out-Null

}

#get the page

$sysint = Invoke-WebRequest "http://live.sysinternals.com/Tools" -DisableKeepAlive -UseBasicParsing

#get the links

$links = $sysint.links | Select -Skip 1

#reset counter

$i=0

foreach ($item in $links) {

#download files to subfolder

$uri = "http://live.sysinternals.com$($item.href)"

$i++

$percent = ($i/$links.count) * 100

Write-Verbose "Downloading $uri"

$progParam.status ="SysInternals"

$progParam.currentOperation = $item.innerText

$progParam.PercentComplete = $percent

Write-Progress @progParam

_download -Uri $uri -Path $sub -NoClobber:$NoClobber

} #foreach

} #if SysInternals

Write-verbose "Finished $($myinvocation.MyCommand)"

} #end

} #end function

The primary change is the addition of a NoClobber parameter. This is the same parameter used by Out-File, where the parameter essentially means, “don’t overwrite any existing files.”

If I’ve already downloaded a file from the CSV file, then there’s no need to download it again. Here’s how it is called from the private download function:

#check if file exists if using NoClobber

if ($NoClobber -AND (Test-Path -Path $Out)) {

Write-Verbose "Skipping $out because it already exists"

#bail out

Return

}

Once the CSV file is revised, I can run the following command to only download the files that don’t already exist in the destination path:

Import-csv c:\scripts\tools.csv | get-mytool -path E:\Tools -sysinternals -noclobber

Or, you can run the following command to download tools directly:

get-mytool -path e:\tools -product Defraggler -uri http://files.snapfiles.com/localdl936/dfsetup217.zip

Of course, I’ve also updated the function’s comment-based help.

Unfortunately, the Get-MyTool function can only process a single download at a time. That’s where I like using a workflow so that I can take advantage of parallelism. I added the same NoClobber feature to the workflow script, and I also added a parameter where you can specify the CSV file path. Here’s my updated workflow.

#requires -version 4.0

#create a USB tool drive using a PowerShell Workflow

Workflow Get-MyToolsWF {

<#

.Synopsis

Download tools from the Internet.

.Description

This PowerShell workflow will download a set of troubleshooting and diagnostic tools from the Internet. The path will typically be a USB thumbdrive. If you use the -Sysinternals parameter, then all of the SysInternals utilities will also be downloaded to a subfolder called Sysinternals under the given path.

The workflow uses a parameter to point to a CSV file with download information. The default is file called Tools.csv in the same directory as the workflow. The CSV should look like this:

product,uri

HouseCallx64,http://go.trendmicro.com/housecall8/HousecallLauncher64.exe

HouseCallx32,http://go.trendmicro.com/housecall8/HousecallLauncher.exe

"RootKit Buster x32",http://files.trendmicro.com/products/rootkitbuster/RootkitBusterV5.0-1180.exe

The product should be a name or description of the tool. The URI is a direct download link. The link must end in the executable file name (or zip or msi). The file will be downloaded and saved locally using the last part of the URI.

Use -Noclobber to skip any existing files. You can limit the number of concurrent downloads with the ThrottleLimit parameter which has a default value of 5.

.Example

PS C:\> Get-MyToolsWF -path G:\ -sysinternals

Download all tools from the web and the Sysinternals utilities. Save to drive G:\.

.Notes

Last Updated: January 19, 2015

Version : 2.0

Learn more about PowerShell:

Essential PowerShell Learning Resources

****************************************************************

* DO NOT USE IN A PRODUCTION ENVIRONMENT UNTIL YOU HAVE TESTED *

* THOROUGHLY IN A LAB ENVIRONMENT. USE AT YOUR OWN RISK. IF *

* YOU DO NOT UNDERSTAND WHAT THIS SCRIPT DOES OR HOW IT WORKS, *

* DO NOT USE IT OUTSIDE OF A SECURE, TEST SETTING. *

****************************************************************

.Link

Invoke-WebRequest

#>

[cmdletbinding()]

Param(

[Parameter(Position=0,Mandatory=$True,HelpMessage="Enter the download path")]

[ValidateScript({Test-Path $_})]

[string]$Path,

[string]$CSVPath = ".\tools.csv",

[switch]$Sysinternals,

[int]$ThrottleLimit=5,

[switch]$NoClobber

)

Write-Verbose -Message "$(Get-Date) Starting $($workflowcommandname)"

Function _download {

[cmdletbinding()]

param([string]$Uri,[string]$Path,[switch]$NoClobber)

$out = Join-path -path $path -child (split-path $uri -Leaf)

#check if file exists if using NoClobber

if ($NoClobber -AND (Test-Path -Path $Out)) {

Write-Verbose "Skipping $out because it already exists"

#bail out

Return

}

Write-Verbose -Message "Downloading $uri to $out"

#hash table of parameters to splat to Invoke-Webrequest

$paramHash = @{

UseBasicParsing = $True

Uri = $uri

OutFile = $out

DisableKeepAlive = $True

ErrorAction = "Stop"

}

Try {

Invoke-WebRequest @paramHash

Get-Item -Path $out

}

Catch {

Write-Warning -Message "Failed to download $uri. $($_.exception.message)"

}

} #end function

Sequence {

#Import download data from the CSV file

Try {

$workflow:download = Import-CSV -Path $CSVPath -ErrorAction Stop

}

Catch {

Throw $_

}

} #end sequence

Sequence {

foreach -parallel -throttle $ThrottleLimit ($item in $download) {

Write-Verbose -message "Downloading $($item.product)"

_download -Uri $item.uri -Path $path -NoClobber:$NoClobber

} #foreach item

} #end sequence

Sequence {

#region SysInternals

if ($Sysinternals) {

#test if subfolder exists and create it if missing

$subfolder = Join-Path -Path $path -ChildPath Sysinternals

if (-Not (Test-Path -Path $subfolder)) {

New-item -ItemType Directory -Path $subfolder

}

#get the page

$sysint = Invoke-WebRequest "http://live.sysinternals.com/Tools" -DisableKeepAlive -UseBasicParsing

#get the links

$links = $sysint.links | Select -Skip 1

foreach -parallel -throttle $ThrottleLimit ($item in $links) {

#download files to subfolder

$uri = "http://live.sysinternals.com$($item.href)"

Write-Verbose -message "Downloading $uri"

_download -Uri $uri -Path $subfolder -NoClobber:$NoClobber

} #foreach

} #if SysInternals

} #end sequence

Write-verbose -message "$(Get-Date) Finished $($workflowcommandname)"

} #end workflow

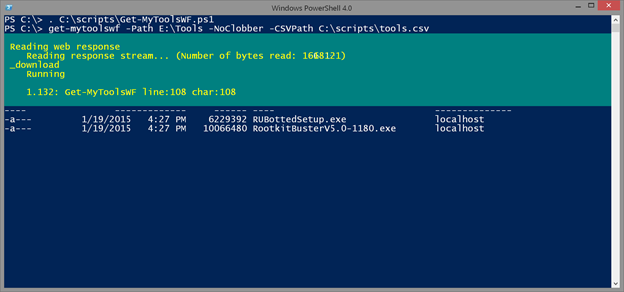

Here’s how you might use it:

get-mytoolswf -Path E:\Tools -NoClobber -CSVPath C:\scripts\tools.csv

This runs much faster because it downloads five files in parallel, although you can change that with the ThrottleLimit parameter. In terms of overall performance, the workflow option is the best choice I believe. I can’t think of much that I need to add to the function or workflow in terms of features. Now the only think I need to keep up to date is the CSV. In a future article, I’ll suggest one way to do just that.