Key Takeaways:

- Microsoft’s PyRIT (Python Risk Identification Toolkit) is a newly released tool to help security teams mitigate security risks within generative AI systems.

- The toolkit offering a practical solution to automate routine tasks and enhance risk detection and mitigation processes.

- PyRIT is not intended to replace manual red teaming and it complements existing expertise by providing a streamlined approach to risk assessment.

Last week, Microsoft introduced its Python Risk Identification Toolkit for generative AI (PyRIT) The new tool provides security teams and machine learning engineers with tools to identify and mitigate risks within AI systems.

In 2022, Microsoft introduced PyRIT to help its AI red team identify security risks within its generation AI systems, such as Copilot. A red team is a group of skilled professionals responsible for simulating cyberattacks on a corporate network or infrastructure. The primary goal is to detect security vulnerabilities and improve security measures.

Microsoft emphasized that the red-teaming process for these systems differs from classical AI or traditional software. This is because Microsoft must account for both responsible AI risks and typical security risks. Consequently, analyzing these various risks can be a slow, tedious, and time-consuming process.

How does the PyRIT toolkit work?

The PyRIT toolkit is designed to help security teams automate time-consuming routine tasks, such as the creation of malicious prompts in bulk. It comprises five primary components: targets, datasets, scoring engine, attack strategies, and memory.

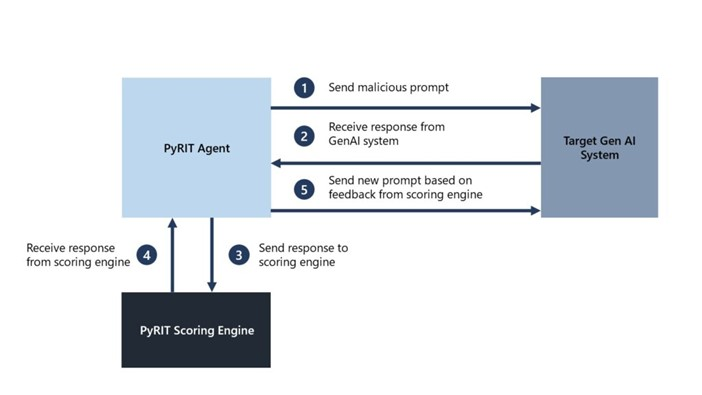

Additionally, the PyRIT toolkit offers two distinct attack styles. In the single-turn strategy, PyRIT sends a combination of harmful prompts to a target generative AI system prior to scoring the response. The second strategy, dubbed “multiturn,” involves sending a set of malicious prompts and then scoring the response. PyRIT sends a new prompt to the AI system depending on the previous score.

Microsoft says that the PyRIT toolkit does not serve as a substitute for manual red teaming of generative AI systems. Instead, it is intended to complement the expertise and skills of the existing red team.

“For instance, in one of our red teaming exercises on a Copilot system, we were able to pick a harm category, generate several thousand malicious prompts, and use PyRIT’s scoring engine to evaluate the output from the Copilot system all in the matter of hours instead of weeks,” said Microsoft explained.

The PyRIT toolkit is available to download for customers on GitHub, with Microsoft offering a range of demos to facilitate user familiarization. The company also plans to host a webinar on Mar 05 2024, to guide participants about how to use PyRIT for red teaming generative AI systems. If you’re interested, you can register for the upcoming webinar on Microsoft’s website.