Key takeaways:

- A 38TB storage bucket containing private data was accidentally leaked by a Microsoft employee due to a misconfigured GitHub repository.

- The breach exposed sensitive information, including passwords, secret keys, and internal messages, emphasizing the critical need for robust data security measures.

- Microsoft advised organizations to enhance monitoring of Shared Access Signature (SAS) tokens and follow best practices to minimize the risk of unauthorized access.

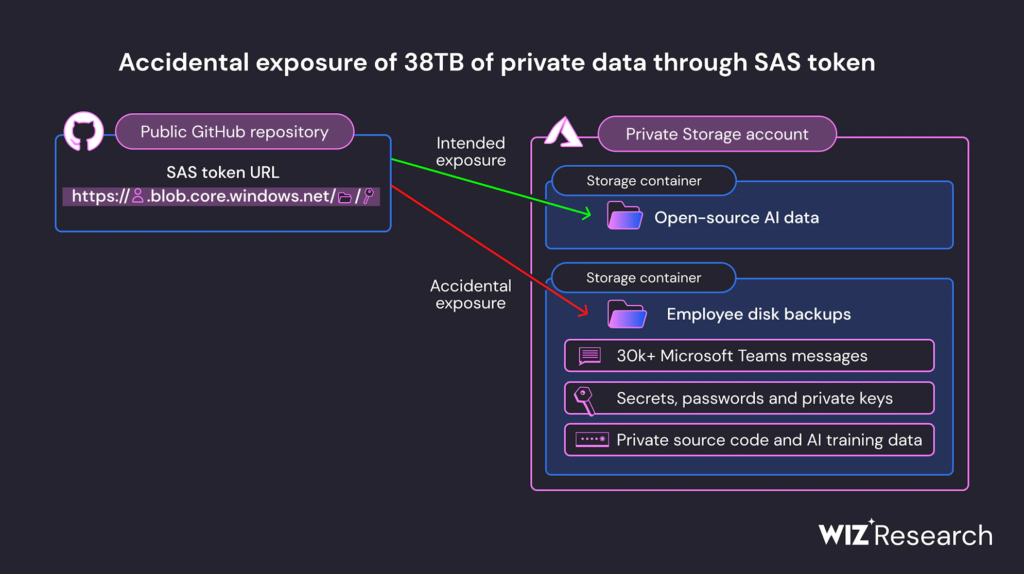

Cybersecurity researchers have uncovered a 38TB storage bucket containing private data inadvertently leaked by a Microsoft employee. The breach, attributed to a misconfigured GitHub repository within Microsoft’s AI research team, exposed sensitive information including passwords, secret keys, and internal messages.

In a report published this week, Wiz researchers explained that they discovered a misconfigured GitHub repository belonging to the Microsoft AI research team while looking for misconfigured storage containers. The repository contained a misconfigured URL that allowed access to a SAS Account token. A SAS token is a signed URL that grants specific access to Azure Storage resources.

The Wiz researchers claimed that the misconfiguration provided access to full backups of two Microsoft employee workstations. It also exposed personal data such as secret keys, passwords of Microsoft services, and over 30,000 internal Microsoft Teams messages. Wiz researchers notified the Microsoft Security Response Center about the exposure on June 22. Microsoft subsequently revoked the over-permissioned SAS Account token on June 24.

Microsoft details SAS security recommendations to prevent abuse

Microsoft’s Security Response Center said that there is no evidence that customers’ data was exposed. However, the researchers found that the over-permissioned SAS Account token had granted full control permissions that allowed hackers to delete or overwrite existing files.

“Due to a lack of monitoring and governance, SAS tokens pose a security risk, and their usage should be as limited as possible. These tokens are very hard to track, as Microsoft does not provide a centralized way to manage them within the Azure portal. In addition, these tokens can be configured to last effectively forever, with no upper limit on their expiry time. Therefore, using Account SAS tokens for external sharing is unsafe and should be avoided,” Wiz researchers wrote.

Microsoft has expanded GitHub’s spanning service to monitor all SAS tokens with overly permissive privileges. The company also detailed several SAS best practices to reduce the risk of unauthorized access. These include limiting the scope to short-lived SAS tokens, carefully handling SAS tokens, and limiting permissions to only those required by the application. It’s also recommended to design a revocation plan and apply the principle of least privileges.