Microsoft Leads the Way in Responsible AI Integration and ESG Compliance

Artificial intelligence (AI) has rapidly evolved from a sci-fi fantasy to a part of the everyday vernacular for individuals and businesses alike. The guidance and insights in this blog post dissect how AI can apply to all aspects of ESG compliance and how companies like Microsoft are shining examples of how to integrate responsible AI and do it methodically.

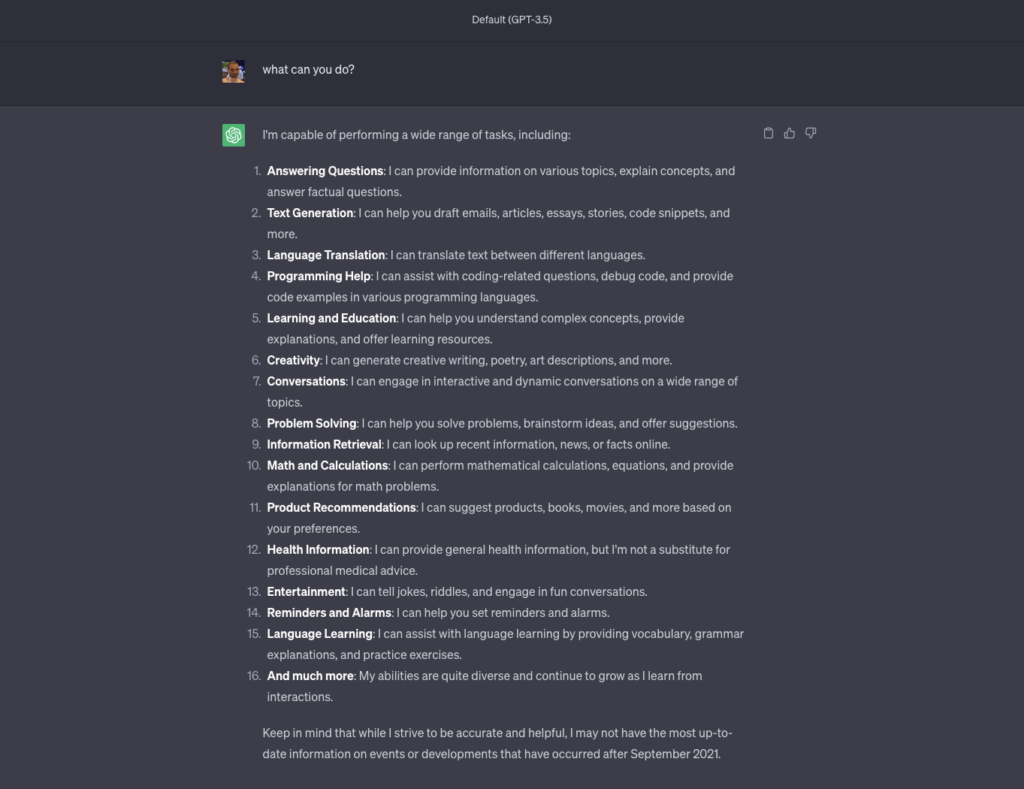

Generative AI in particular has gained a lot of traction in the last year alone, most notably Microsoft’s prototype ChatGPT, with persuasively human-like conversations now commonplace, making it hard to differentiate from genuine human writing and images.

Behind the scenes, the integration of AI – a technology notorious for its deep learning capabilities – has seen businesses thrive and achieve productivity improvements. AI products, at a glance, can aggregate large datasets, analyze metrics, generate swathes of assets, and facilitate administrative workloads on businesses across all sectors. However, the advancement of this technology has led to rumblings of widespread disruption around the principles of responsible AI adoption. In the main, the concerns center around the responsible use of AI trust – ethics, transparency and controlled input.

Potential barriers of AI

It was only recently that the EU agreed on new laws and top-level aims and goals for AI regulation, citing human protection and reassurance as its number one priorities. However, even with such an alarming lack of regulation in its current form, AI capabilities continue to expand at a rapid pace. Even ChatGPT – the de-facto face of generative AI – has been outspoken about the need for regulation.

Even ChatGPT – the de-facto face of generative AI – has been outspoken about the need for regulation.

Organizations sector-wide are walking a tightrope trying to supervise AI’s influence within their firms, which is coming at a time when they’re under greater scrutiny to meet Environmental, Social, and Governance (ESG) goals. Rightfully so, as business leaders now recognize the need to prioritize people and the planet ahead of profits, as evidenced in tech eCommerce retailer MPB’s Impact Report. Ongoing development and the responsible deployment of AI systems, therefore, remains crucial.

More broadly speaking, organizations are facing increased pressure to adopt new strategies and processes that help them meet (and exceed) their ESG targets. Companies are harnessing the power of AI by recognizing its limitations and impairments and instead, using them as motivators to spearhead responsible AI principles. So there begs a niche question; can a responsible AI strategy be an asset to companies and workflows?

So there begs a niche question; can a responsible AI strategy be an asset to companies and workflows?

As ethical conversations around AI have gotten progressively louder, seemingly parallel to how AI has evolved, it’s up to leaders and policymakers to pioneer its use in business.

The surge of AI predictive models

Upon its launch, ChatGPT demonstrated a level of language manipulation and proficiency that many had not seen up to that point. Back then and still to this day, numerous business leaders wax lyrical about ChatGPT’s ability to deliver passable content at scale. Over time, approximately a year after its launch, the underpinning generative AI technology is advancing rapidly, with other tools, programs and solutions entering the market poised to reach a $207 billion valuation by 2030.

Nowadays, AI is firmly ingrained into a plethora of critical business functions like accounting, content creation, document generation, and metric analysis, among others. Breakthroughs in data accuracy and deep learning refinement have paved the way for this. Now, many organizations are entrusting AI and ML (machine-learning) algorithms to take on high-stakes roles, while liberating their teams from the manual and arduous administrative duties, instead focusing on higher priority tasks. As such, AI is seen nowadays as a valuable productivity-enhancing asset that augments teams superbly.

However, the conversations around its regulation and supervision have only worsened and grown in intensity. Data scientists predict that AI, if given continued investment and innovation, risks significant disruption of jobs, supply chains, and markets. Companies with prominent influence in these sectors must manage these changes responsibly, under their ESG commitments.

Companies are being held accountable for reporting and disclosing their ESG risks. The EU, for example, is tightening its regulations within the CSRD (Corporate Sustainability Reporting Directive) which will be phased out in 2024. Essentially, this change signals new reporting requirements for its member states to report activity in line with its ESG framework.

A typical report, as an example, will require companies to be more forthcoming with social and governance measures that AI directly impacts, such as employee rights. The U.S. is seemingly not far behind, with the SEC (Securities and Exchange Commission) proposing rules that make sustainability reporting obligatory.

Considering the purpose of ESG reporting, coupled with evolving ethical concerns of AI, it’s easy to spot a correlation between the two issues. Greater autonomy necessitates better responsibility. As AI is entrusted to perform more roles in business, its programming priorities influence ESG outcomes, for better or worse. Leaders must consciously establish ethics and policies within AI systems and prepare to navigate the consequences of rapid deployment.

Responsible AI

Let’s look at how organizations should implement a responsible AI strategy.

Environmental: AI’s energy and materials footprint

On the one hand, AI has the power to inform environmental research at scale, such as identifying climate patterns, optimizing energy consumption based on usage patterns, as well as making accurate predictions when equipment is likely to fail.

Training complex AI models requires substantial computing resources, energy, and human supervision. Companies must be cautious about developing solutions that don’t come at an unnecessary environmental expense. Microsoft itself has been forthcoming about its pledge to be carbon-negative and entirely waste-free by 2030, while also committing to build a ‘Planetary Computer’ by 2025.

Microsoft itself has been forthcoming about its pledge to be carbon-negative and entirely waste-free by 2030.

AI also enables the optimization of various entry points throughout any supply chain. AI technology can be deployed to help suppliers and buyers curb emissions, which, in time, should prove invaluable as companies try to reach net zero targets.

Responsible AI minimizes the need for unnecessary manual and labor-intensive work, instead delegating these to intelligent, bespoke programs that work within the confines of their intended datasets. If companies can leverage technology to automate processes that eat up hours of valuable employee time, without any worry that the technology can stray from its intended purpose, this will have a profound social impact.

Fundamentally, leaders cannot ignore calls for stringent AI regulation and its potential to automate certain jobs out of existence entirely. Workers’ concerns need to be addressed transparently, with companies that need to leverage AI solutions at scale managing transitions responsibly.

In turn, the proliferation of end-to-end AI can open the door to new types of roles with workers affected, so expectations need to be met. Microsoft categorizes responsible AI as going hand in hand with other ESG priorities around ‘ethics and integrity’, with its most recent Sustainability Report demonstrating clear progress in this field.

Microsoft categorizes responsible AI as going hand in hand with other ESG priorities around ‘ethics and integrity’, with its most recent Sustainability Report demonstrating clear progress in this field.

Unconscious gender biases and algorithms need to be managed considerably so that unfairness or misinformation cannot be allowed to perpetuate. Responsible AI best practices will require protecting workers from displacement and ensuring equal access to opportunities in a market that’s been affected, rather than one that can no longer accommodate their valuable experience and skills.

Governance: New rules for new tech

AI technology has garnered controversy in recent months due to copyright violations, thus posing corporate governance challenges. Any business that adopts AI must consider the potential legal, privacy, regulatory, or industry fines due to improper AI content use or neglecting to disclose its intended use. In highly regulated industries like banking and finance, some firms may find that it’s more prudent to restrict access to generative AI technology for their workers.

Recent high-profile use cases have seen generative AI’s limitations be felt in full force. Alphabet, for example, suffered a $100 billion stock price hit when Google’s generative AI tool, Bard, dispensed inaccuracies and mistakes during a demonstration. This ultimately resulted in a tightening of controls over its responses.

In the near future, responsible governance of AI will likely see organizations impose their own ethics and social teams to mandate the technology’s inclusiveness and reliability. External oversight and independent reviews are going to prove invaluable as this technology grows. Microsoft has pledged responsible innovation in data governance to accelerate continued AI development at the company while ensuring transparency across the board.