Understanding Character Encoding in PowerShell

- Blog

- PowerShell

- Post

Working with strings in PowerShell is core to many different operations. With strings come the many different encodings that can be used. Learning to manipulate, understand, and use those encodings can make working with documents of different languages and types much easier to do. Read on to learn how to effectively work with character encodings in PowerShell.

What are Character Encodings?

First, it’s important to understand what character encodings even are. There are a variety of character-encoding standards used around the world. These are methods of representing an individual character and it’s traits within a file. This allows the character to be rendered appropriately by different operating systems. The PowerShell system supports Unicode exclusively for character and string manipulation. Windows itself supports both Unicode and traditional character sets such as Windows code pages. Code pages, an older standard, uses 8-bit values or combinations of 8-bit values to represent a character used in a specific language or geographical setting.

What is the BOM?

There is one other oddity to be aware of with character encodings and that is the BOM (byte-order-mark). This strange construct is a Unicode signature included at the start of a file or text stream which indicates the Unicode encoding. The complication is that this is not consistently used by different operating systems, commands, and PowerShell versions!

- Windows PowerShell always uses BOM except for

UTF7. - PowerShell Core and 7, defaults to

utf8NoBOMfor all text output. - Non-Windows systems do not generally support BOM.

As you can tell there is complexity in how BOM is supported and used. The best overall compatibility, especially if exclusively using new versions of PowerShell, is to always use utf8NoBOM and you will avoid cross-platform issues.

Character Encoding in PowerShell

PowerShell Core, and 7, supports the following character encodings:

ascii: Uses the encoding for the ASCII (7-bit) character set.bigendianunicode: Encodes in UTF-16 format using the big-endian byte order.oem: Uses the default encoding for MS-DOS and console programs.unicode: Encodes in UTF-16 format using the little-endian byte order.utf7: Encodes in UTF-7 format.utf8: Encodes in UTF-8 format (no BOM).utf8BOM: Encodes in UTF-8 format with Byte Order Mark (BOM)utf8NoBOM: Encodes in UTF-8 format without Byte Order Mark (BOM)utf32: Encodes in UTF-32 format.

You may notice a few differences from the Windows PowerShell character encoding support. The following are no longer included in the allowed encoding list.

BigEndianUTF32– Uses theUTF-32encoding with big-endian byte order.Byte– Encoded characters into a byte sequence, this has been replaced by the-Byteparameter usually.Default– Corresponded to the system’s active code page, typicallyANSI.String– Same asUnicodeUnknown– Same asUnicode

Starting with PowerShell Core 6.2 you can use either numeric IDs or string names for all registered code pages, such as windows-1251.

Character Encoding in Practice

Not all commands support character encodings and several have different defaults and ways that they are supported. The commands that support the -Encoding parameter are the following.

- Microsoft.PowerShell.Management

Add-ContentGet-ContentSet-Content

- Microsoft.PowerShell.Utility

Export-ClixmlExport-CsvExport-PSSessionFormat-HexImport-CsvOut-FileSelect-StringSend-MailMessage

Let’s demonstrate how this works with an example where we need to convert an incoming CSV file. In this example, we are going to show what happens when you import a file using the wrong encoding. Since the default value for the latest versions of PowerShell is UTF8NoBOM, most character’s will correctly import.

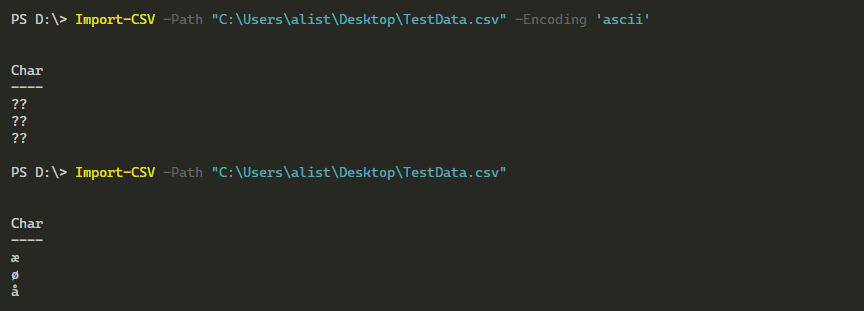

# Import the file and force the ascii encoding Import-CSV -Path "C:\Users\alist\Desktop\TestData.csv" -Encoding 'ascii' # Import the file using the default encoding of UTF8NoBOM Import-CSV -Path "C:\Users\alist\Desktop\TestData.csv"

Since the characters won’t correctly encode, the output shown is incorrect. With UTF8 you will find that the output shows correctly. Additionally, this will work properly on both Windows and Linux and Mac OS X platforms since the default is no longer using the BOM.

Changing the Default PowerShell Character Encoding

The main way to change the default encoding is through two different variables. One simply automatically sets the -Encoding parameter to a specific value so that when a command is run it will always use that value instead of relying on the user to always set the value.

$PSDefaultParameterValues['*:Encoding'] = 'utf8'

The second variable that you can modify is the $OutputEncoding variable, but this only affects the encoding that PowerShell uses to communicate with external programs. This does not affect the internal PowerShell commands, redirection, or file output commands.

Conclusion

As you can see, working with character encodings in PowerShell is easy, but you do have to understand the differences between the various PowerShell versions and how cross-platform support is handled. Thankfully, newer PowerShell versions continue to simplify support and make character encodings more uniform.