Using System Center 2012 SP1 Orchestrator for Log and Disk Maintenance

Now that you’ve started to understand System Center 2012 SP1 – Orchestrator and its power, in this post I’m going discuss Orchestrator’s disk maintenance, and I’ll demonstrate why Orchestrator is like the IT assistant you never had.

One of the jobs which drives me nuts is the maintenance of logs and data on my hosts. Take Exchange for example: This software in my production environment is more than happy to generate over 1.2Gb a day of logs per server just for users connecting over HTTPS. As you can appreciate, it doesn’t take too many days to start starving the server for storage, and deleting these logs isn’t an option, so they must be archived.

Once a week this process is executed manually by myself or a member of my team. As these are live systems, we need to keep an eye on the servers while the 10Gb on average logs are archived away – which is like watching paint dry.

Due to the size of this solution, I am going to break the post into two parts, so let’s get this going.

System Center 2012 SP1 – Orchestrator and Disk Maintenance

With my trusted friend Orchestrator, I have taken the opportunity to delegate this task to its ever-capable hands. To save time, Orchestrator is willing to help by running this process for me on a nightly basis, reducing the impact even further. In addition, the list of servers that require maintenance never appears to shrink, so instead of updating the runbook for each new server, I have chosen to create a central list to work from. Not every file should be archived while we are implementing, so I have also added the ability to simply purge files from the system.

Configuration Database

Let’s being with the central list. For this I have decided to use SQL, with a database specifically for my MIS Activities.

- In the SQL Management Studio, expand the Object Explorer, and select the Databases Node.

-

Right-click and select the option New Database, to launch the New Database dialog.

- In the Database name field, type the name for your MIS Database, e.g. ITServices.

- For the Owner, I am going to set this to the Orchestrator Service account, as it will be the Primary user of this database, at least for now. E.g. [DigiNerve\!or]

- Click OK to create our database.

Storage Maintenance Plan

I plan to use this database for a number of different projects and tasks, including logging my Runbook activity status, and of course the list of disks that I need to maintain. Let’s begin first by creating the table for our disk maintenance work.

- In the SQL Management Studio, expand the Object Explorer, Databases, ITServices, and select the Tables Node.

-

Right-click and select the option New Table, to present the table designer.

-

Populate the table design with the following information:

| Column Name | Data Type | Allow Nulls |

| SourcePath | nvarchar(1024) | No |

| SourceMask | nvarchar(50) | No |

| Action | nvarchar(50) | No |

| Age | numeric(18,0) | Yes |

| TargetPath | nvarchar(1024) | Yes |

- After you have defined your table design, from the toolbar click Save to commit the table.

- You will be prompted for a table name; for example, ITStorageMaintenacePlan.

Finally, lets seed the table with some initial pruning and archive work which we will have Orchestrator process for us.

- In the SQL Management Studio, expand the Object Explorer, Databases, ITServices and select the Tables Node.

- Right-click and select Refresh, which should add our new ITStorageMaintenancePlan to the view.

-

Select our new table dbo.ITStorageMaintenancePlan. Right-click it and select the option Edit Top 200 Rows.

-

The table editor will now be presented, ready for us to add some sample data.

-

Populate the table with some suitable sample paths; for example, I am using the following for my Exchange Server.

| SourcePath | SourceMask | Action | Age | TargetPath | ||

| \\pdc-ex-svr01\c$\inetpub\logs\LogFiles\W3SVC1\ | *.log | Archive | 10 | \\pdc-fs-smb01\archives | ||

| \\pdc-ex-svr01\C$\Windows\ | *.evtx | Purge | 5 | |||

Orchestrator Runbooks

With our database now ready, we can proceed to use orchestrator to create a set of four runbooks for our project. Of course we could achieve all that we need in a single runbook, but segregating the runbooks into smaller jobs ensures that we can unit test the solution in bite-size chunks.

Let’s begin by creating a new folder for our solution. In my case, I am calling this 2. Storage Maintenance.

Archive Files Runbook

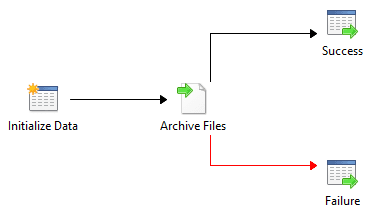

The first runbook I create will be called 2.2 Archive Files. This runbook will accept the details of the archive job, including the Source, Target, and Age of the files for archival.

On the canvas I will place, and hook up the following:

- I add an Initialize Data activity to accept in the parameters for the job.

- Next, I use a Move Files activity to do the actual archival, which I rename to Archive Files.

-

And finally, I will add two Return Data activities.

- First, for a successful execution, which I rename Success.

- Second, for a failed execution, which I also rename, this time Failure.

Starting with the Initialize Data Activity I configure the following properties:

-

Details page (Add four parameters as follows)

- Name: SourcePath, Type: String

- Name: SourceMask, Type: String

- Name: Age, Type: Integer

- Name: TargetPath, Type: String

Next, on my Archive Files activity I define the following setting:

-

Details page

- Source File: {SourcePath from “Initialize Data”}{ SourceMask from “Initialize Data”}

- Destination Folder: {TargetPath from “Initialize Data”}

- Source File: {SourcePath from “Initialize Data”}{ SourceMask from “Initialize Data”}

-

Advanced

- Filter File Age: Is more than

- Filter days: {Age from “Initialize Data”}

- Set modified date: Same as Original

- Filter File Age: Is more than

Finally, select the Pipeline/Link from the Archive Files activity to the Failure activity, and set its properties.

-

Include Page

- Click on the entry Success and from the Results dialog, select the option Warning and Failed and clear the option “Success.” Then click OK.

-

Options Page

- Set the color to Red.

At this point you are well on the way – our database is now in place and the first of our runbook for file processing is now also in place and ready for use.

In the next post, we will continue creating the runbooks, and we will even see how easy it is to leverage PowerShell within the runbook.