Nvidia Announces New Hardware and Services for Enterprise AI at Computex

Nvidia announced several new AI products at the Computex annual trade show in Taipei, Taiwan. The biggest announcements made by the chip maker include the Nvidia DGX GH200, a new supercomputer designed for enterprise AI, an accelerated networking platform for hyperscale generative AI, as well as a new modular server architecture optimized for AI workloads.

As Google and Microsoft both filled their recent annual developer conferences with various generative AI announcements, Nvidia is positioning itself as a leading provider of hardware, software, and services to power this new AI revolution.

“We’re now at the tipping point of a new computing era with accelerated computing and AI that’s been embraced by almost every computing and cloud company in the world,” said Nvidia founder and CEO Jensen Huang at the company’s Computex keynote. According to the exec, 40,000 large companies and 15,000 startups are now leveraging Nvidia technologies such as the company’s CUDA parallel computing platform.

Nvidia announces new DGX GH200 AI supercomputer

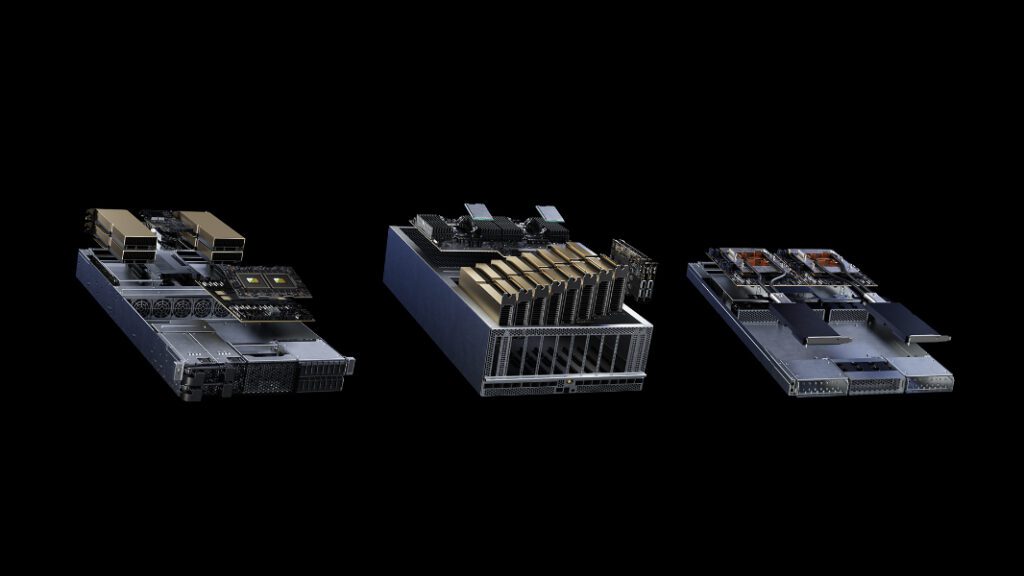

The Nvidia DGX GH200 is a new AI supercomputer designed for generative AI, data processing, and recommender systems. It provides 1 exaflop of computing performance and 144 terabytes of shared memory, which is approximately 500x more than the DGX A100 supercomputer Nvidia announced back in 2020.

The DGX GH200 is powered by 256 Nvidia GH200 Grace Hopper chips, which combine an Arm-based Nvidia Grace CPU with an Nvidia H100 Tensor Core GPU. All this computing power will help customers such as Google Cloud, Meta, and Microsoft to improve their generative AI workloads, which require a lot of resources.

“Training large AI models is traditionally a resource- and time-intensive task,” said Girish Bablani, CVP of Azure Infrastructure at Microsoft. “The potential for DGX GH200 to work with terabyte-sized datasets would allow developers to conduct advanced research at a larger scale and accelerated speeds.”

Nvidia details new MGX server specification

Nvidia MGX is the name of a new modular server architecture designed to accelerate AI, high-performance computing (HPC), and Nvidia Omniverse workloads. It will allow system makers to quickly create over 100 server designs with a large choice of GPUs, CPUs, data processing units (DPUs), and network adapters.

“With MGX, manufacturers start with a basic system architecture optimized for accelerated computing for their server chassis, and then select their GPU, DPU and CPU. Design variations can address unique workloads, such as HPC, data science, large language models, edge computing, graphics and video, enterprise AI, and design and simulation,” the company explained.

Nvidia said that QCT, AsRock Rack, Asus, Gigabyte, Pegatron, and Super Micro will be among the first system makers to launch new MGX server designs, which will be supported by Nvidia’s full software stack.

Nvidia Launches Spectrum-X networking platform

At Computex, Nvidia also announced Spectrum-X, a new accelerated networking platform designed to improve AI workflows running on Etherned-based AI clouds. Nvidia Spectrum leverages the company’s Spectrum-4 Ethernet switches, which are built specifically for AI networks, as well as Nvidia’s BlueField-3 DPUs.

With Spectrum-X, Nvidia promises 1.7x better AI performance and power efficiency compared to traditional Ethernet fabrics, all while maintaining interoperability with Ethernet-based stacks. “NVIDIA Spectrum-X enables unprecedented scale of 256 200Gb/s ports connected by a single switch, or 16,000 ports in a two-tier leaf-spine topology to support the growth and expansion of AI clouds while maintaining high levels of performance and minimizing network latency,” the company said in the announcement.

At its Build conference last week, Microsoft also detailed its partnership with Nvidia to improve AI workloads on Windows 11 PCs powered by Nvidia’s latest RTX GPUs. The company has already released new drivers that provide big performance improvements for workloads relying on text-to-image models

Soon, Nvidia will also launch new Max-Q low-power inferencing for AI-only workloads on RTX GPUs. This will allow mobile workstations with Nvidia’s latest GPUs to optimize Tensor Core performance for these workloads while keeping GPU power consumption as low as possible.