Windows Server 2016 Feature: Switch Embedded Teaming

Switch Embedded Teaming (SET) is a new way of deploying converged networking in Windows Server 2016 (WS2016), making it easier to take advantage of fewer and bigger network connections in Hyper-V hosts. In this article, I’ll explain what Switch Embedded Teaming (SET) is and discuss the advantages it has over legacy networking. I’ll also explain what we’re able to do with SET in Windows Server 2012 and Windows Server 2012 R2.

A Reminder on Why We Use Converged Networking

I’ve written quite a few articles on converged networking soon after I joined the ranks at the Petri IT Knowledgebase. I know that few people have used the features of Windows Server, because almost every time when I’m in the field, I encounter hosts with gaggles of 1 GbE NICs, and those who have 10 GbE NICs are often using costly blade chassis switching solutions that offer a hardware-based alternative. So here’s a quick reminder on Microsoft’s software-defined converged networking technologies that have been with us since Windows Server 2012.

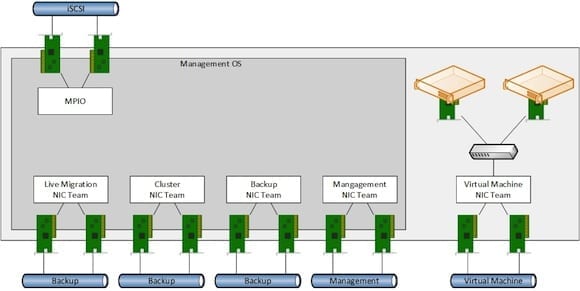

In the days of Windows Server 2008 and Windows Server 2008 R2 Hyper-V, we had large collections of 1 GbE networks, each made up of 1 or 2 teamed physical NICs. Each network was assigned an individual role, such as management, cluster communications, Live Migration, iSCSI, and so on. Such a design is depicted below.

There are several different problems that are associated with this kind of design:

- Complicated: Any time I had a problem with a new host deployment, it was because of NIC configuration issues caused by the sheer number of connections.

- Expensive: People will rail against the cost 10 GbE or faster switches, but the reality is that if each host requires 12 switch ports, then I’m go have pretty large capital and operational expenses for top-of-rack switching. If I could do more with fewer and faster switch ports, then I could off-set the cost of 10 GbE or faster switching?

- Limiting: Even small-to-medium enterprises (SMEs) are deploying hosts with 128 or 256 GB RAM. The normal host I deploy these days for a SME has at least 128 GB RAM. How long does it take to live migrate 120 GBs of virtual machine RAM from one host to another over 1 GbE networking? That has an impact on performance and the time to wait before doing preventative maintenance. If you’re relying on virtualization to provide you with flexibility, then why would you limit your flexibility?

All of these problems help illustrate why we use converged networking. With this kind of design, we can deploy fewer physical NICs, connect to fewer switch ports, and get access to faster networking. Using software, we carve up the aggregated bandwidth of those physical NICs into a small number software-defined networks. The roles that we had before, such as management, backup, storage, cluster communications, Live Migration, and so on, can be spread out across the networks in a coordinated manner. Service levels can be managed using quality-of-service (QoS) rules both on the host, in a virtual machine’s guest OS, and on the physical network. In effect, what Microsoft gave us in Windows Server 2012 was the ability to deploy very flexible networking in every Windows Server that often would cost enterprise customers an extra $35,000 for every blade chassis that they deployed.

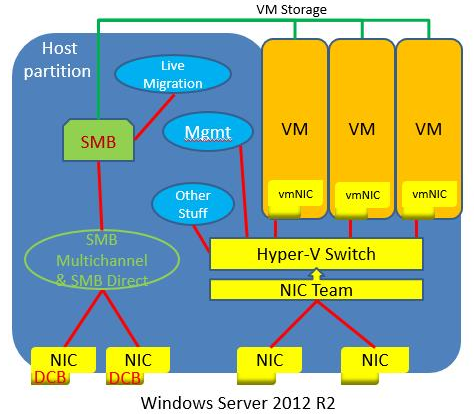

Here is a diagram of a Windows Server 2012 R2 host design with four NICs that takes advantage of converged networking:

- NIC1 and NIC2, both 10 GbE or faster, are not teamed. They will be used for SMB 3.0 and cluster communications traffic, which includes redirected IO.

- NIC1 and NIC2 are rNICs, meaning that they offer RDMA or SMB Direct. Data Center Bridging (DCB) is enabled on the rNICs to offer better service on the switches.

- NIC1 and NIC2 are not teamed — RDMA is incompatible with teaming and further convergence in Windows Server 2012 R2.

- NIC3 and NIC4 are teamed. A virtual switch with QoS configured is connected to the team interface.

- Virtual machines connect to the virtual switch.

- Virtual NICs in the management OS connect to the virtual switch. This is how management and other non-SMB 3.0 communications will reach the physical network.

The above design simplified the host deployment quite a bit. And with Consistent Device Naming (CDN), it;s really easy to automate the above configuration using PowerShell. You can also deploy it at scale using System Center Virtual Machine Manager (SCVMM) during bare metal host deployment.

With that in mind, there’s room for improvement. If you are implementing SMB 3.0 storage with SMB Direct (RDMA), then you have no choice but to use at least four NICs in the host as above. Wouldn’t it be better if we could deploy a pair of fault tolerant NICs that lets all network roles be converged. In other words, we want to converge RDMA traffic through the virtual switch, but this isn’t possible in Windows Server 2012 R2.

Secondly, why do we need a NIC team? Physical switches have the concept of multiple uplink ports that work as an aggregated unit. Why can’t the virtual switch do that and further simplify the design?

Converged RDMA and Switch Embedded Teaming

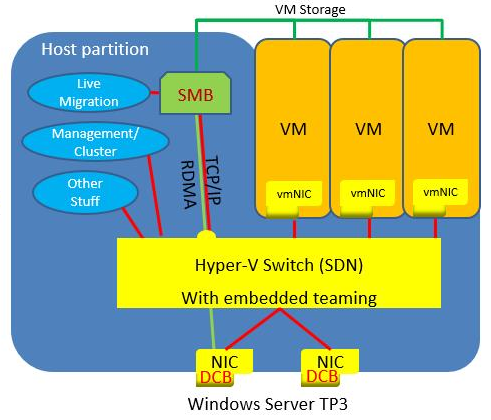

With the release of the Windows Server 2016 Technical Preview 3, Microsoft revealed that Windows Server 2016 will support a new simpler and more efficient way to do converged networking that’s an evolution of what we could do in Windows Server 2012 R2.

The first improvement is that we will not require a NIC team to converge networks in a Hyper-V host. Instead, as you can see below, the physical NICs in the host will be connected to the virtual switch; this is called Switch Embedded Teaming (SET). The concepts of Windows Server teams are still there:

- Teaming modes: SET only supports Switch Independent (no static or LACP support)

- Load balancing methods: Hyper-V Port or Dynamic (default is recommended unless you use Packet Direct that requires a Hyper-V Port)

With a SET configuration, networking admins are saved from the NIC teaming step, where the physical NICs in the host are effectively uplinks for the virtual switch.

A SET configuration also provides a major improvement, where RDMA can be converged. This means that we can:

- Deploy two RDMA enabled NICs (rNICs) on the host and enable DCB on them, which is recommended

- Converge the two rNICs using a SET switch

- Connect virtual machines to the SET switch

- Create virtual NICs in the management OS that are connected to the SET switch and assign QoS rules to them

- Enable RDMA on some of the management OS virtual NICs

Some of those virtual NICs might be for SMB 3.0 traffic that can now leverage SMB Direct and take advantage of storage, Live Migration, and redirected I/O communication that has lower latency and impact on the host CPU and virtual machine services.

Notes on Switch Embedded Teaming

Because we are still in the technical preview stage with WS2016, things are probably going to change. Until then, here are some notes on this feature.

Compatible Networking Features

The following are compatible with SET:

- Datacenter bridging (DCB)

- Hyper-V Network Virtualization. NV-GRE and VxLAN are both supported in Windows Server 2016 Technical Preview.

- Receive-side Checksum offloads (IPv4, IPv6, TCP). These are supported if any of the SET team members support them.

- Remote Direct Memory Access (RDMA)

- SDN Quality of Service (QoS)

- Transmit-side Checksum offloads (IPv4, IPv6, TCP). These are supported if all of the SET team members support them.

- Virtual Machine Queues (VMQ)

- Virtual Receive Side Scalaing (RSS)

Incompatible Networking Features

The following are incompatible with SET:

- 1X authentication

- IPsec Task Offload (IPsecTO)

- QoS in host or native OSs

- Receive side coalescing (RSC)

- Receive side scaling (RSS)

- Single root I/O virtualization (SR-IOV)

- TCP Chimney Offload

- Virtual Machine QoS (VM-QoS)

Note that host-side QoS is noted, and this makes DCB even more important. I suspect that QoS will be supported at a later date.

SET Switch NICs

You can use up to eight NICs as uplinks in a SET switch. Unlike in teaming, the NICs must be identical. There is no concept of failover or standby NICs. If one member fails, then all NICs are active and traffic will be redirected.

Virtual Machine Queues (VMQs)

You should enable VMQ with SET. You should also note that VMQ should not be used with one GbE networking, therefore you should use SET with 10 GbE or faster networking. You should configure *RssBaseProcNumber and *MaxRssProcessors to assign cores, not hyperthreads, for processing interrupts for each SET member NIC.

Live Migration

At this point, Live Migration is not supported in SET. In my opinion, this will change in a later preview release. In fact, Microsoft will need to change this in a later preview release to truly make SET a useful design option.

Switch Embedded Teaming as a Design for Hyper-V Host Deployments

Switch Embedded Teaming has the potential to be a very useful design for Hyper-V host deployment in the future. Right now, SET is theory, but once Live Migration and QoS support are added, SET will become my norm for deploying WS2016 hosts in my lab.