How Would Microsoft Design a Scale-Out File Server?

Other than a bunch of blog posts scattered around the Internet, there is no one official document from Microsoft on how to design a Scale-Out File Server (SOFS) that provides scalable and continuously available storage over SMB 3.0 networking with transparent failover. In part one of this series, I will look at various pieces of information and summarize what I think Microsoft would suggest. Watch out for part two in the coming weeks.

There’s More to SOFS Than Storage Spaces

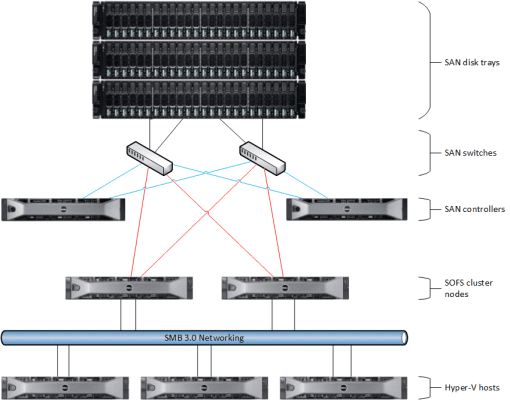

Consider what a SOFS actually is. The architecture combines several Windows components together to provide a new way to present shared storage to servers, such as those running Hyper-V virtualization:

- Cluster shared storage: A common misconception is that you must use Storage Spaces on one more JBOD trays. This is the most affordable storage for a new installation, but as Microsoft says, the cheapest storage you can get is the storage you already own. Any form of shared storage that is supported by Windows Server failover clustering may be used in a SOFS. This includes PCI RAID and SANs that use SAS, fiber channel, fiber channel over Ethernet (FCoE), and iSCSI networking.

- Clustered file servers: At least two – and up to eight (the support limit for SOFS) – servers are directly connected to the shared storage. These servers run Windows failover clustering and virtualize the storage using Storage Spaces.

- SMB 3.0 Networking: The clustered file servers share the physical storage using shared folders via the SMB 3.0 protocol to servers, such as Hyper-V hosts.

So you do not need to use Storage Spaces. You can quite happily take an existing SAN, connect between two and four file servers to the SAN, provision a few LUNs (at least one per file server), cluster those file servers, and configure the File Server for Application Data role in the cluster. That would allow you to create shared folders and store Hyper-V virtual machines on that SAN using SMB 3.0 networking.

How would Microsoft build a SOFS? There are a few clues to be found. A whitepaper called Windows Server 2012 R2 Storage depicts how the Windows build team switched from SANs to a SOFS system based on JBODs with Storage Spaces. With 4 TB drives, each stack of JBODs (four JBODs with 60 x 3.5 inch disk slots each) could offer up to 0.96 petabytes of nonconfigured capacity at a fraction of the cost that a SAN could do.

Another clue can be found in Microsoft’s announcement that they are participating in the Open Compute Project. You will find a reference to “JBOD expansion.” That seems to imply Storage Spaces, but in actuality, Microsoft doesn’t talk about how they provide physical storage in Windows Azure. But it is clear that although a SOFS doesn’t require Storage Spaces, every time Microsoft talks about it, that’s what they are implying and that’s what people are perceiving.

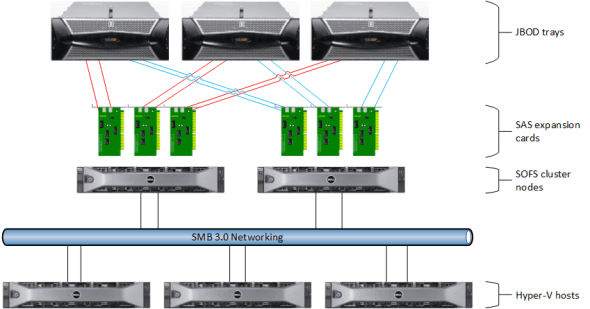

JBOD Architecture

If you are deploying a Storage Spaces for a larger deployment over time, then you really should consider using more than one JBOD in the original installation. There are a few considerations:

First, if you have three JBODs then any virtual disks (spaces) that you create with 2-way mirroring will survive the loss of a single tray. That goes up to four trays required to survive if you have implemented 3-way mirroring.

Second, if you implement 2- or 3-way mirroring with just a single tray, the fault tolerant slabs (interleaves or blocks) of data will not magically move if you add another tray of disk. You need to start out with the fault tolerant architecture if you will require it in the future. Quite honestly, the trays are not that expensive, especially if you are purchasing from an authorized distributor/reseller. Buy three or four trays and spread the amount of disk that you require evenly across each tray. Remember that you will need a minimum number of SSDs per tray if you are implementing tiered virtual disks.

| Disk enclosure slot count | Simple space | 2-way mirror space | 3-way mirror space |

| 12 bay | 2 | 4 | 6 |

| 24 bay | 2 | 4 | 6 |

| 60 bay | 4 | 8 | 12 |

| 70 bay | 4 | 8 | 12 |

Third, you should only use identical trays that are certified for the version of Storage Spaces that you are implementing. Notable names include DataOn Storage, RAID Incorporated, Dell, and Intel. Look for that Windows Server 2012 R2 logo if you are implementing Windows Server 2012 R2 – Windows Server 2012 is a different version!

So how do you connect up the configuration? The best solution, and one I have seen a number of times from Microsoft, is to directly connect each SOFS clustered file server to each JBOD tray. Each server will have 2 x 6 Gbps SAS connections to each tray. Each cable offers 4 x 6 Gbps connections. That means that you will have (2 * 6 * 4) 48 Gbps of storage throughput between each clustered file server and each tray!

Note that the typical 2U JBOD tray that is certified for Storage Spaces will accommodate two servers connecting to it. In a Scale Out File Server, that means that you can have up to two SOFS cluster nodes as in the above example. The larger JBODs can offer (check with the manufacturer) up to four servers to connect to the trays. These are the kinds of trays that Microsoft show in their examples. In those architectures there are:

- 4 x JBOD trays

- 4 x SOFS cluster nodes

- Each cluster node connects directly to each JBOD as in the above 2-node example

That is the sort of architecture that Microsoft envisioned when they were designing a SAN alternative for large enterprises and hosting companies (public clouds).

Why four SOFS servers? It’s not primarily for throughput. It’s believed that two nodes should be more than enough for speed. In reality have four SOFS nodes gives you:

- Fault tolerance: You still have fault tolerance when performing maintenance operations such as Cluster Aware Updating (CAU) and you can still offer peak performance even if you lose a node.

- Future migration: Despite our pleas, Windows Server Failover Clustering does not offer in-place upgrades. I can envision a day where we will have to remove nodes from a WS2012 R2 SOFS cluster to build a Window Server vNext (WS2015?) SOFS cluster, followed by a reconnection of the JBODs, Storage Spaces, and CSVs.

Be aware that Storage Spaces does have limits on physical disks in a pool (240, or 4 * 60), total capacity (480 TB in a pool), and pools in a cluster (four).

Here is a question to ask yourself: Do you want to build a SOFS with multiple storage bricks? Are there scenarios where this works? Sure, the Microsoft build team might be doing this because it could give them a single blob of storage capacity that they reuse huge chunks of on a daily basis.

I don’t think I would use this design in a cloud. There’s just the risk of something going wrong in a single cluster, and that bringing down two or four huge storage footprints. I would want to use the naval approach and to seal off points of failure instead. I think that’s what Microsoft would do in a public cloud too; go back to their Open Cloud Project submission and you’ll see that Microsoft is proposing a pod approach. Maybe your cloud won’t use a pod per se, but maybe your “pod” could be a rack. The rack could contain 4 x JBODs, 4 x SOFS nodes, 2 x access switches, and a bunch of Hyper-V host capacity. If a SOFS cluster fails completely, at least you lose “just” 42U of customer business – but at least that’s better than losing twice or four times that amount! The damage is contained within the “pod” rather than across multiple physical storage footprints.