Implement and Enforce QoS with Data Center Bridging Hardware (DCB)

In this post we will show you how to implement and enforce Quality of Service (QoS) bandwidth management rules that are enforced by Data Center Bridging (DCB) -capable hardware.

What Is DCB QoS?

QoS rules that are managed by DCB can be used when you are deal with networking that is not passing through a Hyper-V virtual switch in the configured operating system. This form of bandwidth management is enforced by DCB capable hardware; your source/destination NICs and the intermediate switches must support DCB. The benefits of DCB are:

- Offloaded bandwidth management: The physical processor is not used to enforce QoS so performance is better than possible with enforcement by the OS Packet Scheduler.

- Support for Remote Direct Memory Access (RDMA): DCB works at the hardware layer so it can enforce QoS on invisible protocols such as RDMA. This means you can reliably converge SMB Direct traffic with other protocols.

You can use DCB to provide QoS for non-virtual switch traffic in:

- Physical NICs in a Hyper-V host that are used only for host networking

- Physical servers that have nothing to do with Hyper-V

Example of QoS Enforced by DCB

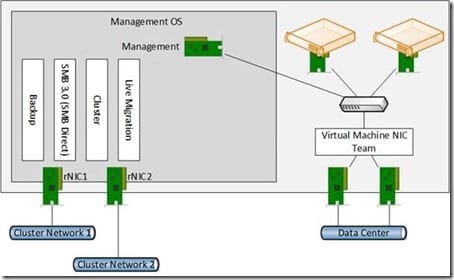

In this design we will be converging several networks on WS2012, as shown below.

- Backup: A backup product that uses TCP 10000 to communicate with agents on the host is being used.

- SMB 3.0: SMB 3.0 will be used as the storage protocol to store virtual machines on a Scale-Out File Server (SOFS). The NICs being used support RDMA so the server will actually be using SMB Direct.

- Cluster: This is the cluster communications network that is responsible for the heartbeat.

- Live Migration: The host is WS2012 Hyper-V so traditional TCP/IP Live Migration is being used.

A possible WS2012 converged networks design.

Two physical RDMA capable NICs are being used for the host networking. Try not to overthink this! That’s a common mistake for novices to this networking. The design is quite simple:

- Two NICs, each on a different VLAN/subne.t

- Each NIC has a single IP address.

- The NICs are not teamed.

This configuration is a requirement for SMB Multichannel communications to a cluster such as a SOFS. Each NIC has a single IP address. Critical services such as cluster heartbeat, live migration, and SMB Multichannel will failover the non-teamed NICs automatically if you configured correctly. For example, enable both NICs for live migration in your host/cluster settings. Also keep in mind that RDMA and NIC teaming are incompatible.

Just as with QoS that is enforced by the virtual switch, your total assigned weight should not exceed 100. This makes the algorithm work more effectively and makes your design easier to understand. Weights can then be viewed as percentages. We will assign weights as follows on this host with 10 GbE networking:

- Backup: 20

- SMB Direct: 40

- Cluster: 10

- Live Migration: 30

Implementing QoS Enforced By DCB

There are two phases for implementing DCB bandwidth management:

- Configure the switches: Consult with your switch’s manufacturer or networking engineer.

- Create the QoS rules using PowerShell: This step was pretty easy for OS Packet Scheduler bandwidth management. The creation of QoS rules for DCB is not as simple and this is what we will focus on. (Editor’s note: Learn more about Deploying QoS Packet Scheduler in Windows Server 2012.)

In PowerShell, there are four core steps:

- Create QoS rules using New-NetQoSPolicy.

- Enable DCB via Server Manager or Install-WindowsFeature.

- Create traffic classes for DCB using New-NetQoSTrafficClass.

- Enable DCB on the NICs using Set-NetQosDcbxSetting and Enable-NetAdapterQos.

If you are using RDMA over Converged Ethernet (RoCE) NICs, then you must also enable Priority Flow Control (PFC) using Enable-NetQosFlowControl to prioritize the flow of SMB Direct traffic – think of this as the bus or car-pooling lane on a busy highway where SMB Direct gets an easy ride past the other traffic on the NICs’ circuitry.

1. Create the QoS Rules

The following will create the four required QoS rules for our example. Note that the IEEE P802.1p priority flag is being used. These priorities will be used to map the QoS rules (or policies) with the DCB traffic classes.

# Create a rule for the backup protocol sent to TCP port 10000 New-NetQosPolicy “Backup”-IPDstPort 3343 –Priority 7 # Create a rule for RDMA/SMB Direct protocol sent to port 445. # Note the use of NetDirectPort for RDMA New-NetQosPolicy “SMB Direct” –NetDirectPort 445 –Priority 3 # Create a rule for the cluster heartbeat protocol sent to TCP port 3343 New-NetQosPolicy “Cluster”-IPDstPort 3343 –Priority 6 # Create a rule for Live Migration using the built-in filter New-NetQosPolicy “Live Migration” –LiveMigration –Priority 5

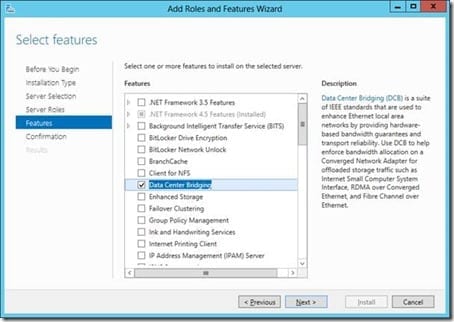

2. Enable DCB via Server Manager

You can enable the DCB feature in Server Manager:

Enabling the Data Center Bridging role in Server Manager.

Alternatively, if you are writing a script to do configure your networking then you might use PowerShell to install the feature:

Install-WindowsFeature Data-Center-Bridging

3. Create DCB Traffic Classes

In the next step you will create DCB traffic classes. That each cmdlet will create a class that matches the higher level QoS rule:

- Name the class.

- Use a priority to pair the QoS rule with the DCB class – the IEEE P802.1p priotiy flags will match.

- Specify the fair ETS algorithm instead of the strictly “higher priority first) Strict ETS algorithm.

- Assign a minimum bandwidth weight. Our total assigned weight will sum to 100 to make the weights appear as percentages and to optimize algorithms.

# Create a traffic class on DCB NICs for backup traffic New-NetQosTrafficClass “Backup” –Priority 7 –Algorithm ETS –Bandwidth 20 # Create a traffic class on DCB NICs for SMB Direct New-NetQosTrafficClass “SMB Direct” –Priority 3 –Algorithm ETS –Bandwidth 40 # Create a traffic class on DCB NICs for Cluster New-NetQosTrafficClass “Cluster” –Priority 6 –Algorithm ETS –Bandwidth 10 # Create a traffic class on Data Center Bridging (DCB) NICs for Live Migration New-NetQosTrafficClass “Live Migration” –Priority 5 –Algorithm ETS –Bandwidth 30

4a. Enable PFC for RoCE

The following step is a requirement for RoCE NICs and should not be used on iWARP or Infiniband NICs. This will force RoCE NICs to prioritize SMB Direct (Priority 3) over other protocols.

Enable-NetQosFlowControl –Priority 3

4b. Enable DCB on the NICs

The first step will be to enable the DCB settings:

Set-NetQosDcbxSetting –Willing $false

The second step will be to enable DCB on the RDMA capable NICs (rNICs). This is much easier if you have servers capable of Consistent Device Naming (CDN) because you can then predict the name of the required NICs on every host:

Enable-NetAdapterQos “rNIC1”

Enable-NetAdapterQos “rNIC2”

There is more work involved in configuring QoS that is managed by DCB, but the effort is worth it. Thanks to DCB you can take advantage of RDMA for economic and fast SMB 3.0 storage (WS2012 and WS2012 R2) and use SMB Direct to offload the processing of live migration (WS2012 R2).