In this article we will explain the basic Hyper-V host networking requirements. This isn’t necessarily a post on “How many NICs do I need in a Hyper-V host?”. Instead, this article will discuss what communications are performed by Hyper-V and identifying the need for isolating those protocols or functions to have a stable cloud. Understanding these needs is a critical step in designing Hyper-V hosts, particularly those that will take advantage of new features in Windows Server 2012 (WS2012) or Windows Server 2012 R2 (WS2012R2).

Basic Hyper-V Host Network Requirements and Designs

Network design for Hyper-V hosts was much simpler before the release of WS2012 because we did not have many options. The decision making process simply came down to:

- Will my hosts be clustered?

- Will I use NIC teaming?

- Do I need a dedicated backup network?

- Is the storage connected by iSCSI?

- If I am using WS2012, do I want a Live Migration network on non-clustered hosts?

In essence, there were two designs with minor variations depending on the answers to those questions. Those two designs are standalone (or non-clustered) hosts or clustered hosts.

Requirements of a Standalone Host

In this simple design there must be two networks:

- Management: This network is use to remotely manage Management OS (sometimes referred to as the host OS) of the host. Remote desktop, monitoring, backup, and Hyper-V management traffic all pass through this network by default.

- Virtual Machine: A virtual switch (referred to as a virtual network prior to WS2012) is connected to this network. This network allows virtual machines to connect to the physical network of your computer room, data center, or cloud.

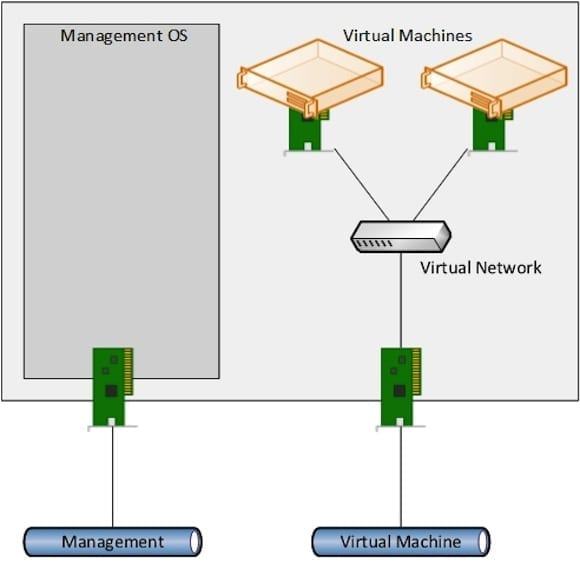

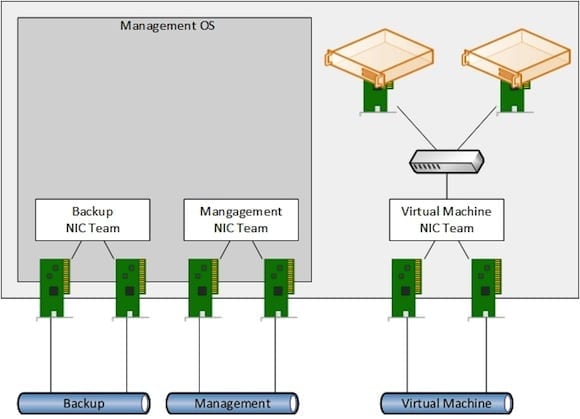

Basic Standalone Host Design

Note how I haven’t talked about NICs yet. Instead, I have deliberately use the word “network.” A NIC is a physical connection; think of it as a port and cable that you can touch. A network is a logical connection, so think of it as a role that serves a purpose. In W2008/R2 both of these networks usually did have dedicated networks.

Basic networking in a standalone host.

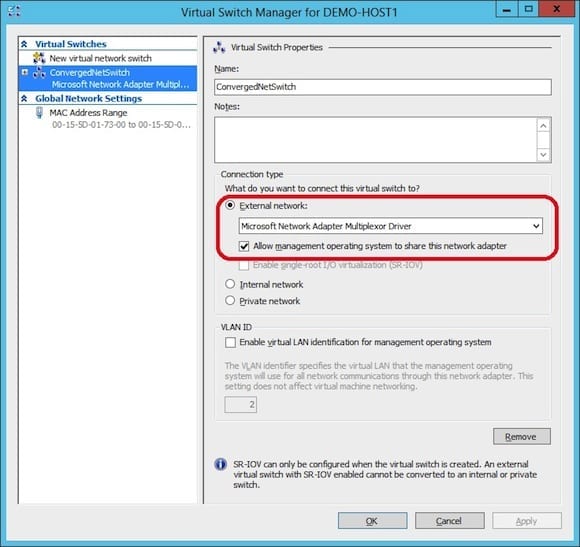

How many NICs is that? Two: one for management and one for virtual machines. Veterans of Hyper-V will know that there is a slightly different variation to this. In the properties of a virtual network (before WS2012) or virtual switch you can enable a setting called Allow Management Operating System To Share The Network Adapter.

Allow the management OS to connect to the network via the virtual switch.

This modifies the basic design by creating a virtual NIC in the Management OS. That takes a few moments to comprehend – remember that the Management OS sits on top of Hyper-V. This new virtual NIC appears in Control Panel > Network Connections just like a physical NIC would. But instead of being connected to a physical switch, this new Management OS vNIC connects to your virtual network or virtual switch. That means that the management OS can connect to the physical network via the virtual switch just like a virtual machine. It also means that you no longer need a dedicated NIC for the Management OS.

A basic host using a single Management OS virtual NIC.

Prior to WS2012 we usually advised against implementing this design in production. The problem is that there was no quality of service (QoS) prior to WS2012 to protect one network from the other flooding the network connection. For example:

- A backup or restore job via the Management OS network would prevent virtual machines from being able to use the network.

- A rogue virtual machine could flood the network and you would have to physically visit the host to take remedial action.

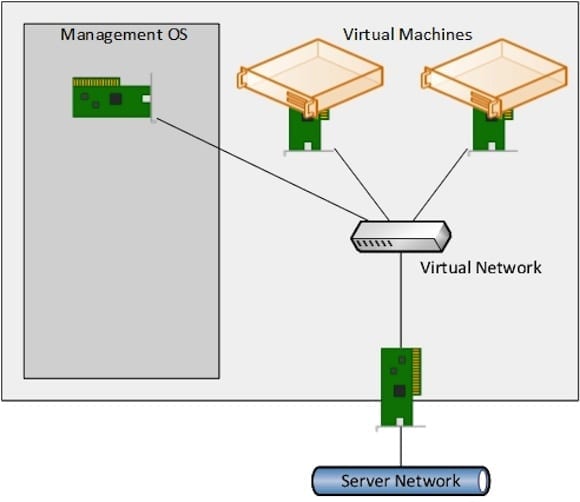

Adding a Backup Network to a Standalone Host

Modern backup tools are very light touch with their impact on hosts and networking. That because they capture changes only, and some even add deduplication functionality to that optimization. A restore, which is a time-important task, is very heavy on the network, and as previously mentioned, it could flood a physical connection and therefore put other roles on that network out of action.

This is why many engineers have decided to implement a dedicated backup network, as you can see below. Adding this additional NIC gave physical isolation to backup traffic in lieu of having QoS functionality in Windows Server 2008/R2.

Adding a dedicated backup network.

Adding NIC Teaming to a Standalone Host

The designs so far have only used a single NIC for each networking role. That NIC can only be connected to a single top-of-rack (TOR) or access switch by a single cable or bus. There are several single points of failure along that chain, so the switch is the one that is most likely to fail. You can introduce fault tolerant network paths by adding NIC teaming. Before Windows Server 2012 this required the use of software that is provided by the NIC or server manufacturer. Note that use of NIC teaming software is not and never has been supported by Microsoft in Hyper-V. It can be done, but you now have to add the vendor into your support chain for Hyper-V, and you must follow the instructions very carefully to get stability and security.

Microsoft added built-in and completely supported NIC teaming to WS2012, meaning you no longer have to use third-party software for this design. That simplifies support, and to be honest, simplifies implementation – it is standardized across servers, supports mixed vendor teams, and can be automated with PowerShell or System Center.

Adding NIC teaming, as shown below, does increase network path fault tolerance but it also increases costs:

- Double the NICs

- Double the switches (purchase, support, power, administration, and so on

Adding NIC Teaming.

NIC teaming seems like the automatic sensible choice for everyone. However, in a (huge) cloud where fault tolerance is built into application rather than at the host layer, concepts like host clusters and NIC teaming make absolutely no sense. The rack is considered as a fault domain, and virtual machines in the same tier of a service are spread across racks. This allows those cloud “landlords” to use very simple hosts (single power supply, NICs, TOR switches, and so on) while the service still stays highly available.

Further Standalone Host Thoughts

So far we haven’t considered some of the concepts that WS2012 have introduced to non-clustered Hyper-V hosts:

- SMB 3.0 Storage: Non-clustered hosts can store virtual machines on common SMB 3.0 shares. This allows for the introduction of highly available storage (instead of DAS) for easier recovery from non-clustered host failure. It also introduces the possibility of Live Migration. Note that SMB Multichannel can use multiple NICs.

- Live Migration: Non-clustered hosts can perform Live Migration of virtual machines on SMB 3.0 storage and Shared-Nothing Live Migration. Live Migration is bandwidth intensive; a network can be added to hosts (with or without NIC teaming) and designated as the Live Migration network.

As you can see, the number of NICs that you might deploy to implement these networks keeps growing and adding costs to the project. Wait till you see what clustering has for us!

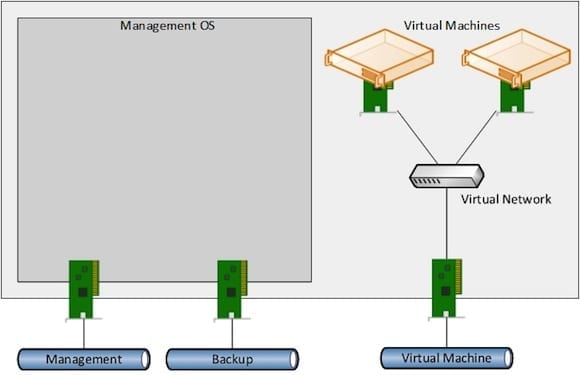

The Clustered Host

Clustering has a need for quality guaranteed networking to ensure clustering stability. Without QoS in Windows Server, each network requires its own NIC. And you are going to have to double those NICs if you want to have network path fault tolerance.

The following networks are required in a clustered host. Note: Please ignore materials on non-Hyper-V clustering where fewer networks are required:

- Management: To manage the host remotely.

- Virtual Machine: To let virtual machines connect to the physical network.

- Cluster Communications: This private (non-routed) network is used to provide the heartbeat between cluster nodes to assess health. This network must be reliable. In W2008/R2 Hyper-V, this network is also used for Redirected IO (it has the lowest metric). Note that in WS2012, Redirected IO uses SMB 3.0, and therefore can use multiple NICs over SMB Multichannel.

- Live Migration: Having two private (non-routed) networks is considered good practice in clustering because it gives the cluster heartbeat a second private, and therefore reliable, path. Relying on the Management network could be foolish if it becomes swamped, for example, by a virtual machine deployment. This second private network will primarily be used for Live Migration traffic. Remember, if you have multiple clusters then make sure this network is common between those clusters for Shared-Nothing Live Migration.

- Storage: Usually two adapters will be used to connect the hosts to either SMB 3.0 or SAN storage. These adapters could be SAS controllers, and thus outside the scope of this networking discussion. However they could be iSCSI NICs (use MPIO, not NIC teaming) or they could be for SMB 3.0 (do not use NIC teaming if implementing RDMA/SMB Direct).

- Backup: Once again, we will add a backup network to isolate backup/restore traffic from the management network.

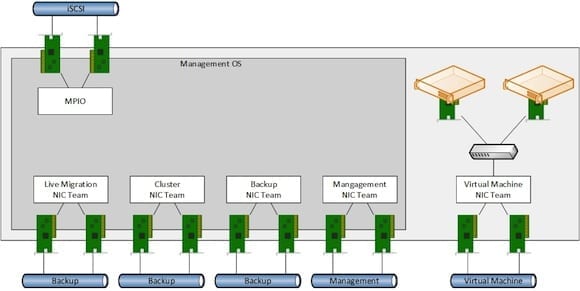

Without the storage network and NIC teaming, that gives us a total of 5 NICs. Adding NIC teaming and we have 10 NICs. If we include iSCSI or SMB 3.0 storage, we now have 12 NICs. That is a lot of NICs, cables, switch ports, electricity, complexity, expense, management, and critically, more stuff that can break.

Remember, in the below diagram:

- The use of iSCSI (shown) or NICs for SMB 3.0 (not shown) are dependent on the type of storage you require

- The backup network is not listed as required by Microsoft, but it is strongly recommended to ensure a host is manageable during the restoration of a virtual machine

A clustered host with fault tolerant networking.

Are These Designs for WS2012 Hyper-V?

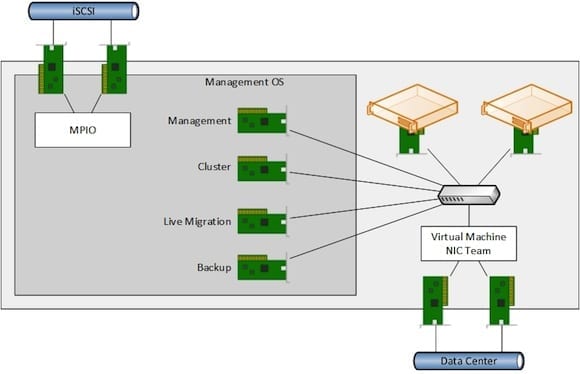

To paraphrase American comedian Denis Leary: I got two words for ya: Hell No! Earlier in this document we stressed that the networks (or roles) did not necessarily map to NICs (physical connections). But they do in the case of W2008/R2 Hyper-V because we have no means to guarantee a minimum level of service for the functions of Hyper-V/Failover Clustering in those legacy operating systems. WS2012 introduces a new concept for Hyper-V called converged networks, also known as converged fabrics. Using built-in QoS we can create minimum bandwidth rules. That opens up a wide range of new design options where we use fewer, larger bandwidth NICs, and merge our networks into those NICs. Here’s a teaser for you in the image below, in which just two 10 GbE NICs provide all the networking functionality of a clustered host, with two more dedicated NICs for iSCSI storage:

Convergence via the virtual switch plus dedicated iSCSI NICs

Watch out for future articles where we will dig deeper into understanding and designing converged networks hosts.