What are Hyper-V Containers?

You’ve probably read about Windows Server Containers in the past and that concept sort of made sense to you, until you really started to wonder what the technical difference was between containers and machine virtualization (Hyper-V or vSphere). Things might be about to get really unclear now. Microsoft will be bringing a new kind of container to us with Windows Server 2016 (WS2016) called Hype-V Containers that crosses Hyper-V with containers. Is that a container in a virtual machine or the other way around? Thankfully, Mark Russinovich was featured recently in a Channel 9 video to explain what Windows Server Containers and Hyper-V Containers are. I’ll explain the difference in this article.

User and Kernel Mode

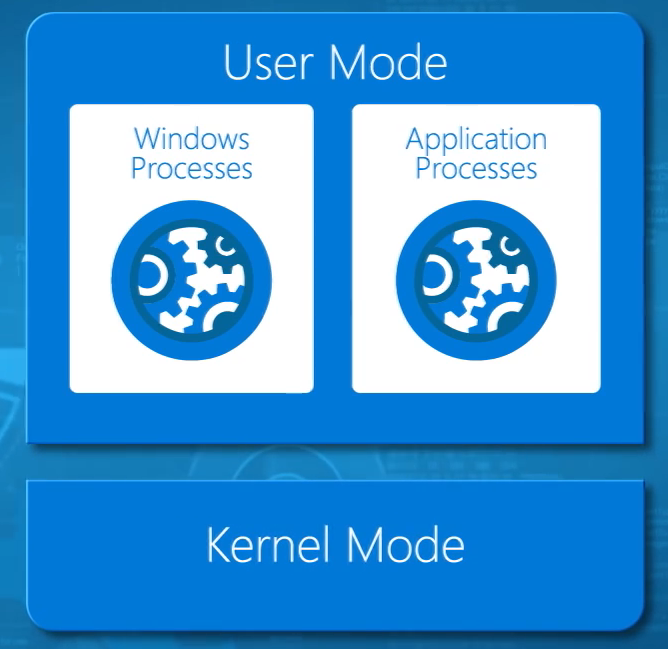

The key to understanding how containers work is rooted in day one of learning computer science. We have two layers of operation in an operating system. A computer’s processor switches between these modes depending on the process that it is running.

The first of these operational levels is kernel mode. This is where the core functions of an operating system reside. You’ll also find features such as drivers here too. Kernel mode is the heart of the operating system, and therefore it is very sensitive and secure. An operating system restricts what can run in kernel mode because the virtual memory space is shared between all processes that execute here.

User mode is where processes that we recognize from Task Manager run. Unlike in kernel mode, something crashing in user mode doesn’t bring down the entire machine, it only crashes the process. Non-kernel mode Windows processes and applications run in user mode.

In the world of servers, we typically use the one service per operating system rule. Gone are the days of admins installing lots of services onto a single machine to reduce server sprawl. A key method we adopted was machine virtualization; this reduced the required number of physical servers, but also meant that, with templates and automation, we could deploy a new machine in under an hour instead of a week or a month. You’d think that anywhere from 10 minutes to an hour would be amazing, but we’re in a new world were services need to appear or grow instantly, and machine virtualization doesn’t accommodate that:

- OS specialization (sysprep)

- Configuring a network stack

- Joining a domain

- Patching

- Multiple reboots

- Install service pre-requisites

- Install the service

- More patching and reboots, and so on …

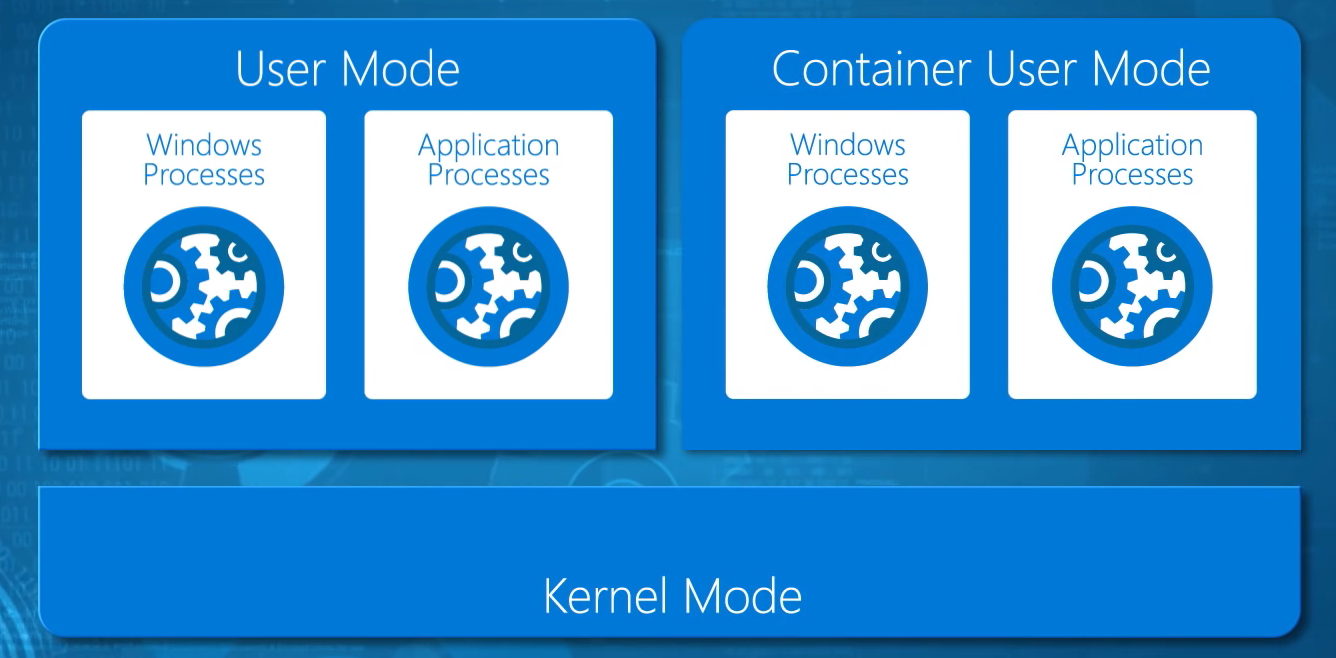

Windows Server Containers

When you install a machine, be it physical or virtual, that machine has a single user mode executing on top of a single kernel mode. We have used machines, physical or virtual, as a boundary to allow us to have multiple user modes so that we can deploy lots of isolated applications. For example, Hyper-V offers us child partitions (virtual machines), which each can have their own Windows or Linux guest OS with the requisite kernel and user modes, and each application is installed in each user mode (each virtual machine). But what if we could have more than one user mode per kernel mode, and only required one machine per kernel mode? That’s what containers do.

Microsoft has worked very closely with the open source community to bring us the Windows alternative to Linux Containers, called Windows Server Containers. A machine, physical or virtual (a VM host), is deployed with the usual Windows Server operating system with a kernel mode and a user mode. The user mode of the original operating system is used to manage the container host (the machine hosting the containers). A special stripped down version of Windows, stored in a container repository as a container OS image, is used to create a container. This container only features a user mode — this is the distinction between Hyper-V and containers because a virtual machine runs a guest OS with user mode and kernel mode. The Windows Server container’s user mode allows Windows processes and application processes to run in the container, isolated from the user mode of other containers.

Windows Server Containers, by virtualizing the user mode of the operating system, allow multiple applications to run in an isolated state on the same machine, but they do not offer secure isolation.

There is a single kernel mode, owned by the container host. The user mode of the host and the user mode of any containers on this host share this single kernel mode. And this leads to a potential security issue. What if a container is facing the public (and therefore can be compromised) or is going to run “hostile code” (code from an external source). There is a single kernel mode, which would then be open to attack, and once compromised, would open up all other containers on that container host to the perpetrator. In the Channel 9 video, Russinovich explains that Microsoft wants to use containers for Azure Automation; this allows Microsoft to instantly deploy and start containers to run code supplied by Azure customers. Microsoft has no idea what this code is doing and cannot allow that code to directly access the kernel mode of the underlying Azure hosts because it would open Microsoft and other Azure customers open to attack.

Hyper-V Containers

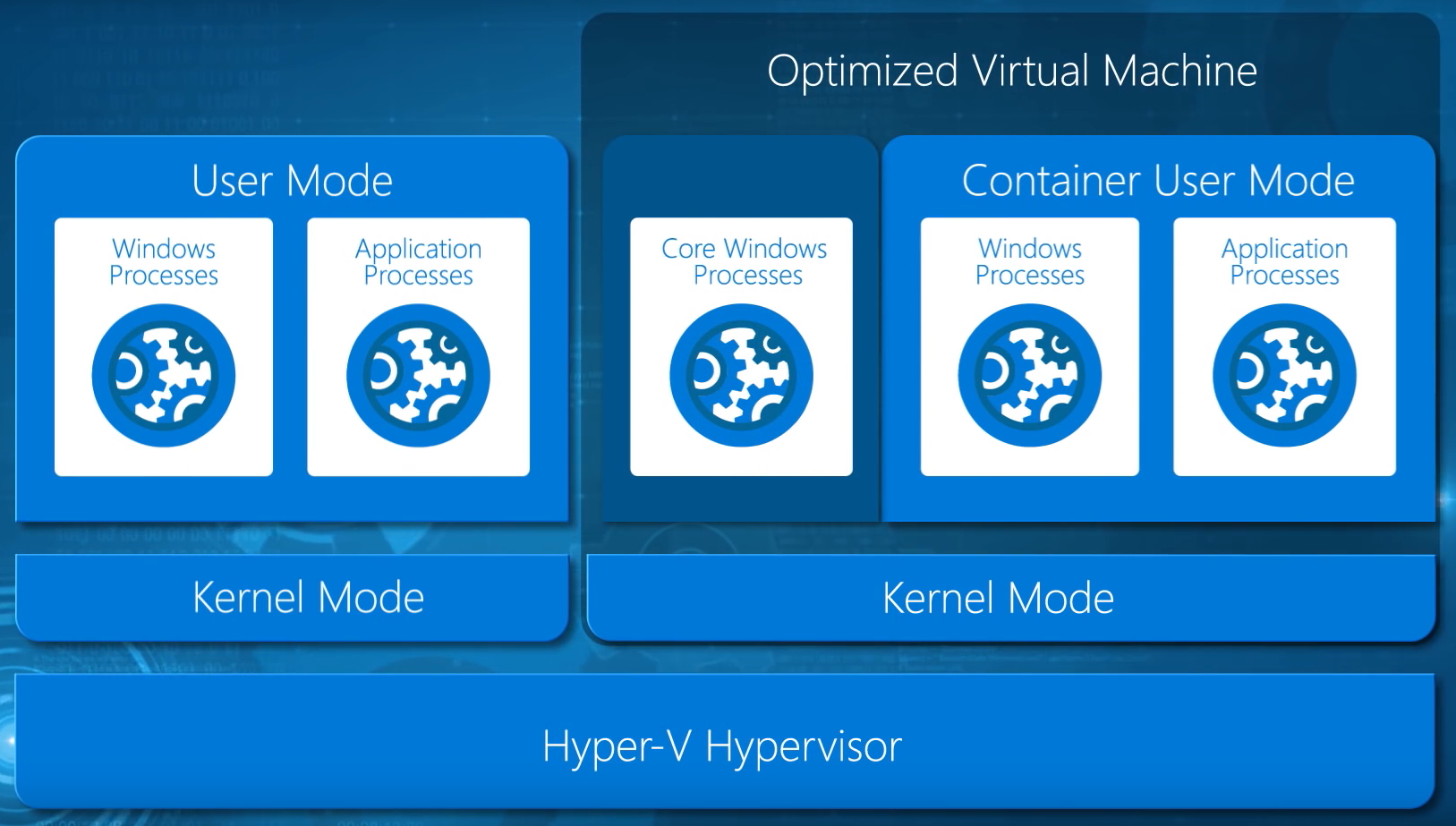

Hold onto your slippers, Dorothy, things are about to get rough. At this point you understand that a simple way to differentiate a container from a virtual machine is that the virtual machine runs a full guest OS with kernel and user modes, but a container only has a user mode, right? That’s kind of, sort of, close to being right. But then we have Hyper-V Containers, which completely confuse the issue.

Imagine a machine, physical or virtual (nested virtualization, coming in WS2016), that we can install the Hyper-V role on to. The parent partition (or management OS) has a isolated kernel and user modes, and is responsible for managing the host. Each child partition, or hosted virtual machine, runs an operating system with a kernel mode and user mode.

In the case of Hyper-V Containers, things are similar, but different. The child partition that is deployed is not a virtual machine, it is a Hyper-V Container. The guest OS is not the normal Windows that we know, but it is an optimized, stripped-down version of Windows Server. No, this is not the same as Nano Server.

When deployed the Hyper-V container boots in seconds, much faster than a virtual machine with a full OS (even Nano). The Hyper-V container features an isolated kernel mode, a user mode for core system processes, and a Container User Mode, which is the same thing that runs in a Windows Server Container. The boundary provided by the Hyper-V child partition provides secure isolation between the Hyper-V container, other Hyper-V containers on the host, the hypervisor, and the host’s parent partition. And this is the technique that Microsoft wants to use to host “hostile code” in services such as Azure Automation.

The containers that are used to create Hyper-V Containers and Windows Server Containers are compatible. We can simply change the run type of a container (while stopped) to switch it between the two deployment types.

Beyond this approach, little more has been shared publicly by Microsoft about Hyper-V Containers. At Ignite, Microsoft promised that we’d see a Technical Preview 4 (TP4) release of Windows Server 2016 that would include Hyper-V Containers and the required Hyper-V nested virtualization (in a recent Windows Insiders preview of Windows 10).