Configuring Quality of Service (QoS) Rules in Windows Server 2012

Windows Server 2012 (WS2012) introduced enhanced Quality of Service (QoS) to allow us to guarantee a minimum level of service for network protocols (physical or virtual machines) or virtual NICs (virtual machine or management OS). There are several ways to implement QoS, and it is important that you choose the right one for the host or server design that you are using.

What Is Quality of Service (QoS)?

Quality of Service allows an administrator to control bandwidth guarantees or to set limits so that multiple services can share a single network connection. This gives us the ability to reliably create converged network designs where multiple networks are running on a single NIC or NIC team. For example, a clustered Hyper-V host requires management, live migration, cluster communications, and backup networks. These can all be connected to the same virtual switch as the virtual machines that are running on the host, and the virtual switch can be connected to a pair of high bandwidth NICs.

There are two kinds of QoS rules:

- Minimum bandwidth: A service is guaranteed a minimum amount of bandwidth if it needs it. Unused bandwidth is available to other services that need to burst beyond their minimum guarantee. This offers great flexibility and it is the recommended option from Microsoft.

- Maximum bandwidth: This option limits the bandwidth that can be used by a service. It is inflexible and it is recommended that it is used only when you really need to cap utilization, such temporarily limiting the damage caused by a troublesome virtual machine that is eating up the network connection until the issue is fixed.

Weight or Absolute?

There are two ways to define bandwidth when you create QoS rules. The first is to assign a weight to a virtual NIC or network protocol. This method allocates a share of bandwidth to a specific service. For example, say you allocate the following:

- 40 to the Live Migration protocol

- 10 to cluster communications

- 50 to SMB 3.0

In this case there is a sum weight of 100 (40+10+50) – 40 is 40% of 100, so live migration has a guarantee of 40% of the bandwidth if it needs it. Live migration can burst beyond the 40% share if there is enough demand for the bandwidth and the other services are not fully utilizing their minimum guaranteed allocations.

How to Apply QoS

There are three ways that you can apply a QoS rule:

- Assign rules to virtual switch ports (effectively virtual NICs)

- Applied by the OS Packet Scheduler of a host or virtual machine (per protocol)

- Enforced by a NIC using Datacenter Bridging (DCB)

Virtual Switch QoS

You will choose this option if you want to apply rules to virtual NICs that are either owned by the management OS of a host or a virtual machine. Note that the rules are actually assigned to the virtual switch’s ports, but the ports are an attribute of the virtual NIC, and this means that a virtual machine’s QoS rule(s) will follow the virtual machine from host to host.

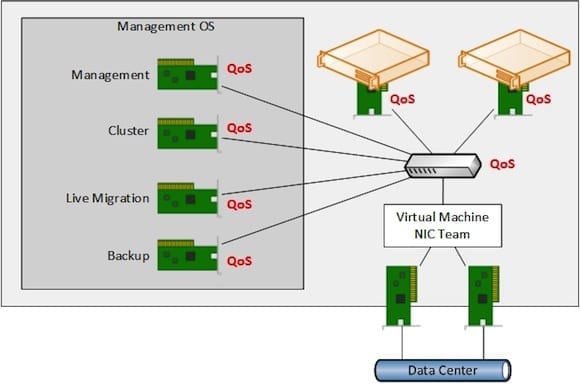

The following diagram shows the three places that you can apply QoS rules to:

- Any virtual NICs that are owned by the management OS for host communications.

- The virtual NICs of virtual machines.

- A default “bucket” on the virtual switch. Any virtual NIC without a rule will contend for this bandwidth.

A common solution is to apply minimum bandwidth rules only to management OS virtual NICs and to the virtual switch default bucket. In the below example, the bucket might have a weight of 50 (from a total of 100 meaning it is 50%. None of the virtual NICs on the virtual machines will have a QoS rule so they will all contend for that 50% of minimum guaranteed bandwidth on a first-come-first-served basis.

Applying QoS rules to virtual switch ports.

It should be clear that this form of QoS can only be used on Hyper-V hosts because we are using the virtual switch. Traffic that does not pass through a virtual switch, either on a Hyper-V host or a non-Hyper-V server or even in a virtual machine, can also have QoS rules and that’s why we have two other ways to implement QoS on a per-protocol basis.

OS Packet Scheduler QoS

With this approach, the OS Packet Scheduler in Windows Server will apply QoS rules that are applied to network protocols. This is a key difference to virtual switch option: there are no virtual NICs in this option. Therefore this method can be used on any machine (physical or virtual) in which the protocols’ traffic traveling from that machine does not pass through a virtual switch in that machine’s operating system.

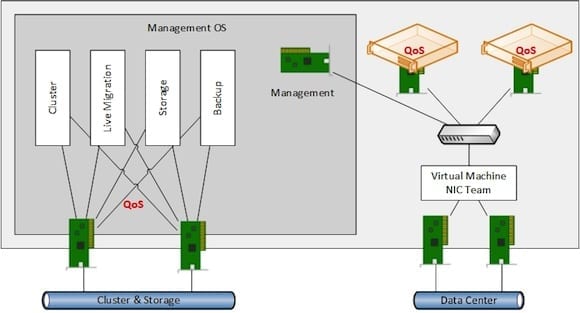

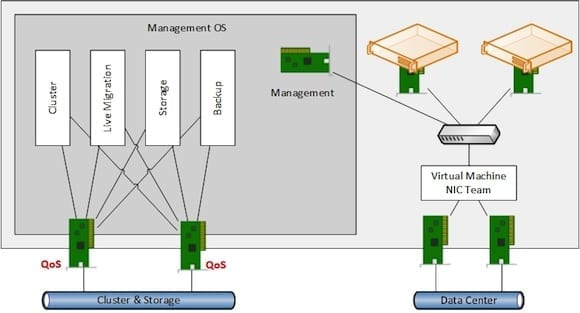

The below example is of a Hyper-V host in which the management function of the management OS is done using a virtual NIC. This could use the aforementioned QoS approach that is enforced by the virtual switch. But note that the cluster, live migration, storage, and backup protocols (not virtual NICs) run through two non-teamed (in this example) physical NICs. Those services are running through two NICs, with each NIC having a single IP address, probably on two different VLANs. This answers the requirements for SMB Multichannel to a Scale-Out File Server and the best practice of having two networks for cluster heartbeat to traverse, not to mention allowing WS2012 R2 SMB powered live migration to make use of double the bandwidth. Those services do not pass through a virtual switch so in this example, we would create QoS rules that are applied by the OS Packet Scheduler.

Take note of the virtual machines. We can also apply OS Packet Scheduler QoS rules in the guest OS. This allows us to control bandwidth shares of protocols within a virtual machine.

Applying QoS using the OS Packet Scheduler.

Datacenter Bridging QoS

Remote Direct Memory Access (RDMA) provides us with SMB Direct. This protocol bypasses the networking stack of the management OS to reduce latency, reduce processor impact, and to improve overall SMB performance for storage (WS2012 and later) and live migration (an option in WS2012 R2 for 10 Gbps or faster networks). Notice a problem with that sentence? RDMA bypasses the networking stack of the management OS. That means the OS Packet Scheduler cannot apply QoS rules to RDMA traffic which, if left uncontrolled, could easily 100% consume the bandwidth on your NICs and shutout critical services such as the cluster heartbeat.

If you are going to use RDMA NICs (or rNICs) then you must use Datacenter Bridging (DCB) to enforce your QoS rules. This process pushes the rules down the networking stack so that your hardware applies QoS for you. This approach requiress rNICs that support DCB and that your switches between the client and server must also support and be configured for DCB.

An additional benefit of DCB (for very large workloads) is that the processor on the host or server is not used to apply QoS rules.

Applying QoS using DCB.

SMB 3.0 Maximum Bandwidth Rules

WS2012 R2 introduces some new features that caused QoS to evolve:

- Virtual machines can use Virtual Receive Side Scaling (VRSS) to perform SMB Multichannel over a virtual NIC, assuming that the virtual switch is connected to DVMQ enabled physical NICs. This could consume a lot of bandwidth.

- A WS2012 R2 host can use SMB Direct for Live Migration and a per-protocol QoS rule would not be able to differentiate between this and SMB 3.0 storage traffic.

It’s for this reason that we can set maximum bandwidth rules for 3 kinds of SMB 3.0 traffic:

- Default: Storage traffic

- VirtualMachine: SMB 3.0 traffic from a virtual machine

- LiveMigration: SMB 3.0 traffic caused by Live Migration.

QoS: Not Just for Hyper-V

Each of the above examples illustrates how QoS makes converged networks possible for WS2012 or WS2012 R2 Hyper-V. However, QoS can be applied on any non-Hyper-V WS2012 or WS2012 R2 server using the OS Packet Scheduler or DCB. For example, you might use DCB-enforced rules on a Scale-Out File Server that uses rNICs. Or you might implement OS Packet Scheduler rules in the guest OS (WS2012 or later) of a virtual machine to ensure that a specific application protocol gets a fair share of bandwidth.