A-Series Virtual Machines

We will start with the most basic of the virtual machines. One thing you get used to with virtual machines in Azure is that they use a temporary disk that is assigned to D:. This drive is where the paging file is located, and some DBAs will use this disk for temporary databases. Any Hyper-V admin that is using Hyper-V Replica might already be deploying this kind of design. The benefit for replication is that we can opt not to replicate the D: drive, and therefore not cause wasteful replication. In addition, if migrating this virtual machine to Azure might be an option, the disk design work is already completed.

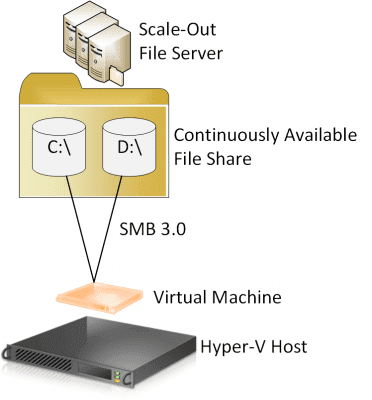

If you need data disks, then you should add additional virtual hard disks to the SCSI controller of the virtual machine. This brings up another interesting point. Each disk in Azure has a maximum performance of either 300 (Basic) or 500 (Standard) IOPs. The way that we can accumulate more IOPS for data throughput with Azure VMs is to deploy more data disks. Azure is applying Storage QoS (coming in Windows Server vNext for SOFS deployments) to cap IOPS per virtual hard disk.

Microsoft and storage vendors also use this method of adding more than one virtual hard disk, in addition to some other unrecommended hacks, to achieve those one or two million IOPS claims.

There are two other features of Azure virtual machines that are worth noting.

Azure VMs give you dedicated processing capacity, where virtual machines get dedicated capacity for their virtual processors. For a cloud the scale of Azure, that’s not a huge cost. A-Series virtual machines run on lower-cost AMD processors with large numbers of cores. For those that have different purchasing prices, that’s not a realistic option. However, some workloads, such as SharePoint, might require dedicated processing. You can use Processor Limitations to ensure that a virtual machine is getting a certain percentage of the logical processors that it is running on.

A8 and A9 Virtual Machines

The big perk of A8 and A9 virtual machines is that they are connected to Infiniband networks. We cannot do this because Infiniband is not supported as a media type for Windows Server 2012 R2 Hyper-V virtual switch connectivity.

We can connect other kinds of high bandwidth NICs (10 or 40 Gbps) to our virtual switches, but remember there is no vRDMA in WS2012 R2 Hyper-V. However, we can turn on vRSS in our virtual machines to push through huge amounts of data to the virtual machines — just remember that this will interrupt the virtual processors so you have to find the correct balance between bandwidth availability and processor load.

D-Series Virtual Machines

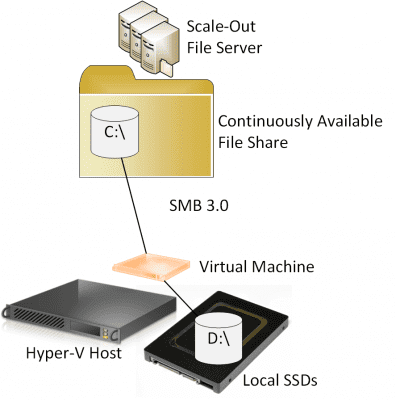

You can emulate the design of D-Series virtual machines by using a local SSD or array of SSDs in the host(s). When you create virtual machines, you place the operating system and data disks on your shared storage and place the temporary disk that contains the guest OS paging file and temporary databases on the hosts’ local SSD storage. This provides the virtual machine with huge amounts of IOPS for paging and disk-based caching to improve the performance of guest services.

But there is a downside. You cannot do this kind of implementation with highly available virtual machines. That’s because you cannot fail over a virtual machine from one host to another because the temporary drive is not located on shared storage. And don’t forget that creation of such virtual machines will require you to implement complex automation because tools such as System Center Virtual Machine Manager (SCVMM) won’t do this kind of implementation for you.

There are two alternatives you can consider that could boost the performance of virtual machines. The first is to use tiered shared storage. If we use Storage Spaces, then we can tier the disks as follows:

- High speed SSDs for the hot working set data

- 7200 RPM 4TB, 6 TB (and 8TB on the way) HDDs for colder data

Storage Spaces automatically and transparently optimizes the placement of blocks using a heat map. This is something that many SANs can offer too, sometimes with more tiers of storage. The storage is not local to the host, and therefore not local to the virtual machine, so there is still some latency over the network. Using RDMA storage networking or virtual fibre channel LUNS can reduce this, but there is another solution.

Products such as Proximal Data AutoCache enables you to deploy a mixture of the previous two solutions. Host-local SSD or NVMe (PCI Express flash storage) can be used as a local read cache for hot data. The caching solution will store hot blocks on this low latency storage and all committed writes are sent to the shared storage. This allows virtual machines to be made highly available, yet still have fast performing services. Note that this kind of solution could even replace the need for tiering on your shared storage. All virtual machines are placed on the shared storage as normal, so deployment will not be complicated.

G-Series Virtual Machines

The Godzilla virtual machines have two features, which includes SSD storage for temporary drives and huge amounts of memory.

The memory sizes of Azure virtual machines are odd (0.75 GB, 3.5 GB, and so on) for a reason, and that’s because Azure provides virtual machines that are NUMA aligned. Preventing Non-Uniform Memory Access when using medium-large workloads that span processors and physical divisions of memory on the motherboard ensures that you have optimal placement of processes and scheduling of memory to achieve peak performance.

On a host with dual CPU sockets and correctly placed 64 GB RAM, a virtual machine that occupies more than one physical CPU and half of the RAM will span NUMA nodes on the motherboard. So imagine what’s going to happen when you start to deploy virtual machines with massive amounts of memory.

Hyper-V can provide NUMA alignment to virtual machines only if you use static memory. I typically recommend that we use Dynamic Memory for all workloads if all of the following are true:

- Support it

- Don’t have demanding performance requirements where the virtual machine will span NUMA nodes on the host

So if you are going to deploy a virtual machine that is going to span NUMA nodes, and this is the sort of workload that will require peak performance, then do not use Dynamic Memory. If you follow this advice, Hyper-V will use virtual NUMA nodes within the virtual machine to ensure that the guest OS efficiently schedules processes and assigns memory within the guest to offer peak performance on the host’s hardware.

Microsoft can out-spend and undercut the pricing of anything we do simply because of the scale of Azure. However, there is much that we can do to emulate what is done in their hyper-scale cloud because the core Hypervisor that we can deploy is the same – WS2012 R2 Hyper-V is actually newer than what is deployed in Azure. The question you will have to ask yourself is: is the feature affordable and justifiable?