50,000 IOPS with an Azure VM: Is It Possible?

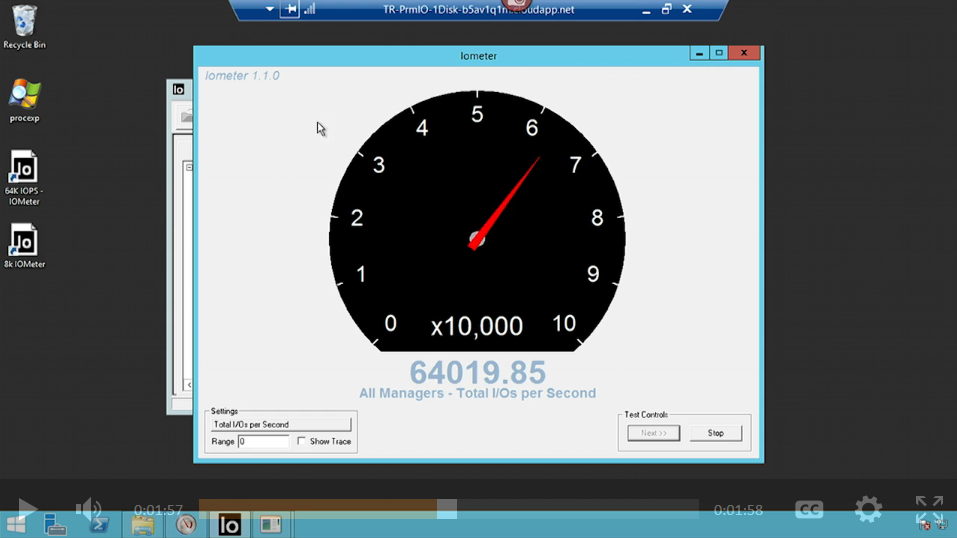

I’ve written a lot lately about how Microsoft Azure offers Premium Storage for IaaS virtual machines and how data disks can be used to aggregate potential performance and storage capacity. I’ve also explained how the performance of HDD and SSD storage scales linearly when you add disks. When I think about Azure Premium Storage, I think about the demo that Mark Russinovich did at Microsoft Ignite 2015, where he showed a virtual machine with Premium Storage that hit over 64,000 IOPS reads/writes by using caching when stressed using IOMETER.

Of course, you can expect that Azure’s Chief Technical Officer (CTO) will have a fully functional and performing demo. Every lever and micro-setting would be configured to guarantee nothing but the best. With that said, what can some mere mortal accomplish? I wanted to find out if I could run a virtual machine with super-high IOPS just as easily.

The Test Lab

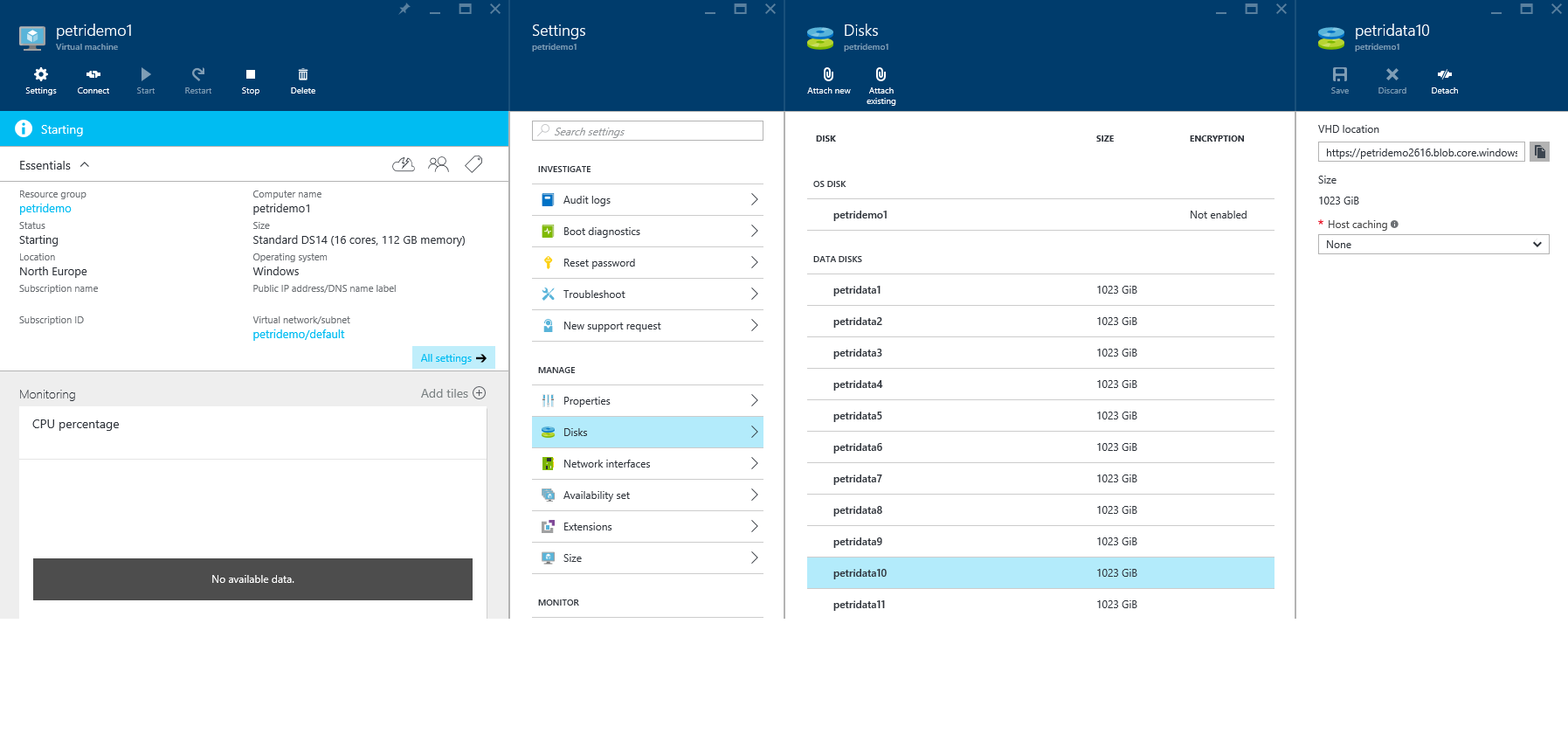

My solution is based on multiple Premium Storage data disks that are aggregated using Storage Spaces. I utilized the PowerShell that I previously shared to create a single data volume from 10 x 1023 GB P30 Premium Storage data disks:

- A single storage pool

- One virtual disk with 64 KB interleaves

- Formatted with a single NTFS volume (E:) with an allocation unit size of 64 KB

I needed a virtual machine specification that would support the IOPS of 11 x P30 data disks (10 x 5,000 IOPS = 50,000 IOPS), so I selected the DS14, capable of up to 32 data disks and 50,000 IOPS.

Note that my plan was to test the virtual machine with a Storage Spaces virtual disk made from 10 data disks, hitting the maximum of 50,000 IOPS. Then I would see what happens with 11 x P30 disks, which should exceed the maximum IOPS supported by the virtual machine.

Note that I would have preferred to deploy a GS5 virtual machine, supporting up to 64 data disks and 80,000 IOPS, but the 32 cores in that specification exceed the default limitation of 20 cores per Azure subscription limit. I opened a support request to address this issue, but it was going to take some time for Microsoft to lift the limit.

In short, I deployed an Azure virtual machine by using a spec that anyone has access to with 10 fast data disks, aggregated using Storage Spaces via some PowerShell that Microsoft had previously shared. There was nothing mysterious or hidden there.

Test with No Disk Caching

My stress tests were based on DskSpd, Microsoft’s free storage benchmarking tool, which is used by Microsoft to test solutions, such as Cloud Platform System (CPS). Initially, I ran tests with the following command:

Diskspd.exe -b4K –d60 -h –o8 -t4 –si -c50000M e:\io.dat

That translates to:

- 4K reads

- For 60 seconds

- 8 overlapped IOs

- 4 threads per target

- Using a 50,000 MB file called E:\IO.DAT.

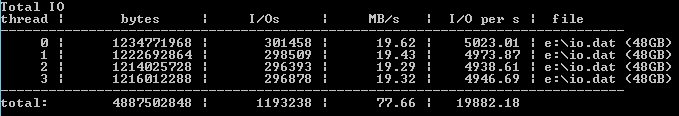

When the virtual machine was ready, I downloaded and extracted DskSpd and ran the above test. The result was a rather paltry 19,882 IOPS, something I could have achieved with just 4 x P30 data disks.

I considered a few factors for this lack of speed:

- Alignment: My 4 KB tests should have been aligned with the 64 KB allocation unit size and the 64 KB interleaves.

- Disk Cache: I had deliberately disabled the cache on each data disk when building the test lab. I enabled caching, but I still wasn’t reaching the promised land of 50,000 IOPS.

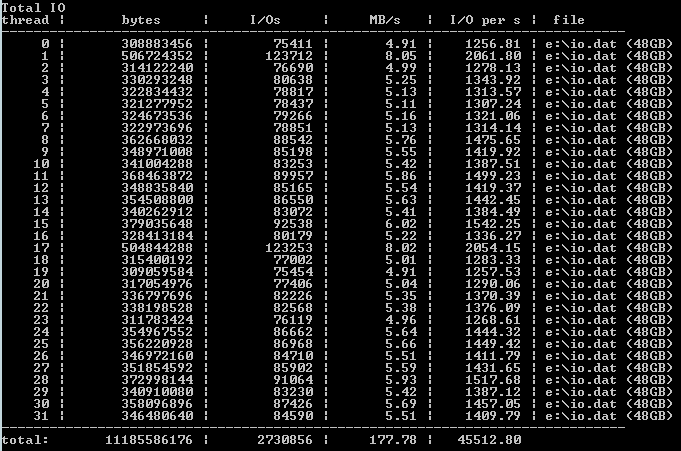

I decided that the problem must be the benchmark test. I reset the disk cache to be disabled, and I went through a process of modifying the rate of overlapped IOs and threads, reaching peak performance with the following:

Diskspd.exe -b4K -d60 -h -o128 -t32 -si -c50000M e:\io.dat

I monitored performance using Performance Monitor and observed IOPS being between 42,000 and 51,000; DskSpd reported an average of 45,512 IOPS.

So what would happen if I added another disk, offering 11 x 5,000 potential raw IOPS? I destroyed the 10-disk storage pool and created a new storage pool with 11 x P30 data disks.

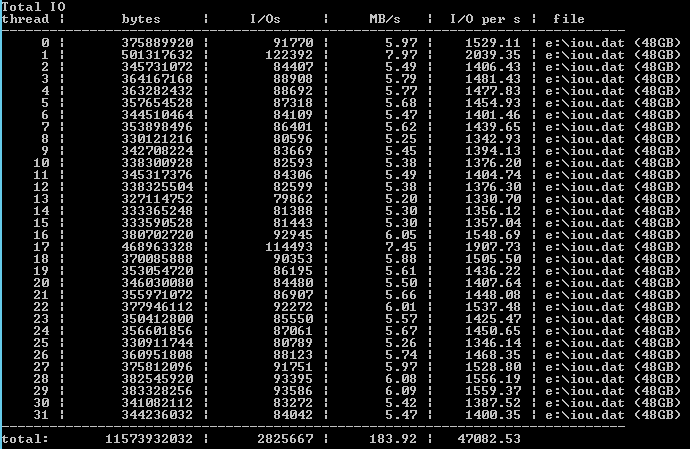

Next, I ran the test again and observed speeds between 40,000 and 52,000 IOPS in Performance Monitor. DskSpd indicated an average speed of 47,082 IOPS. It appeared to me that I had reached the maximum potential of the DS14 virtual machine.

Test with Disk Caching

At this point, I was satisfied that I could recreate the same sort of results that Mark Russinovich showed with no disk caching enabled. However, we could get more performance by enabling read caching. Note that you should always ensure that your application supports disk caching before enabling it. I decided to enable read caching on each of the 11 data disks to see how much more than 47082 IOPS I could get.

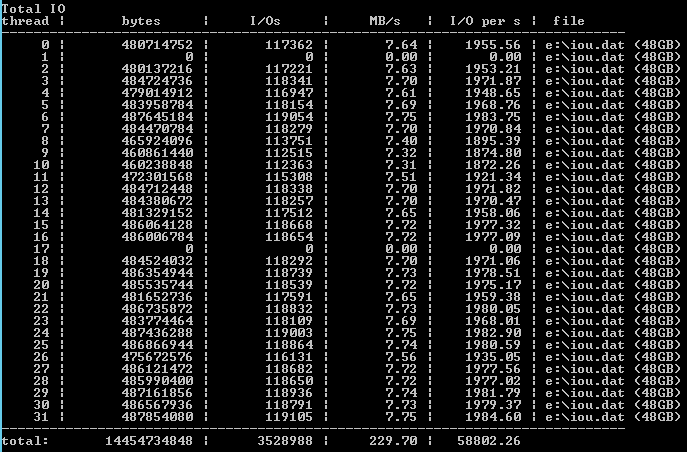

I reran DskSpd and observed Logical Disk\Disk Reads/Second in Performance Monitor, seeing spikes of up to 62,000 IOPS. DskSpd reported an average of 58,802 IOPS, which meant that enabling read caching allowed the actual performance to exceed maximum IOPS of the virtual machine specification and boosting performance by nearly 25 percent.

The Verdict

It’s easy to be sceptical when you see a keynote demonstration that features claims on performance, but I’ve proven to myself at least that the demo that Mark Russinovich did was achievable outside of the utopian world of the Microsoft demo bubble.

The configuration of the virtual machine was simple, and the default configuration of the data disks with caching enabled would have provided the best performance. In fact, the only complexity in my lab was creating a workload that could make use of the performance potential.