What’s New in Windows Server 2016 TP5 Storage?

In this post I’ll describe some of the additions and improvements that you will find in Windows Server 2016 (WS2016) Technical Preview 5 (TP5) related to storage.

Read: What’s New in Windows Server 2016 Technical Preview 5: Networking Features

Evolution

Most of what you will find in Technical Preview 5 is an evolution of concepts that started in Windows Server 2012. Not only are these features mostly improved in Windows Server 2012 R2 and inspired by industry trends, but these features were also based on customer feedback.

Storage Spaces Direct (S2D)

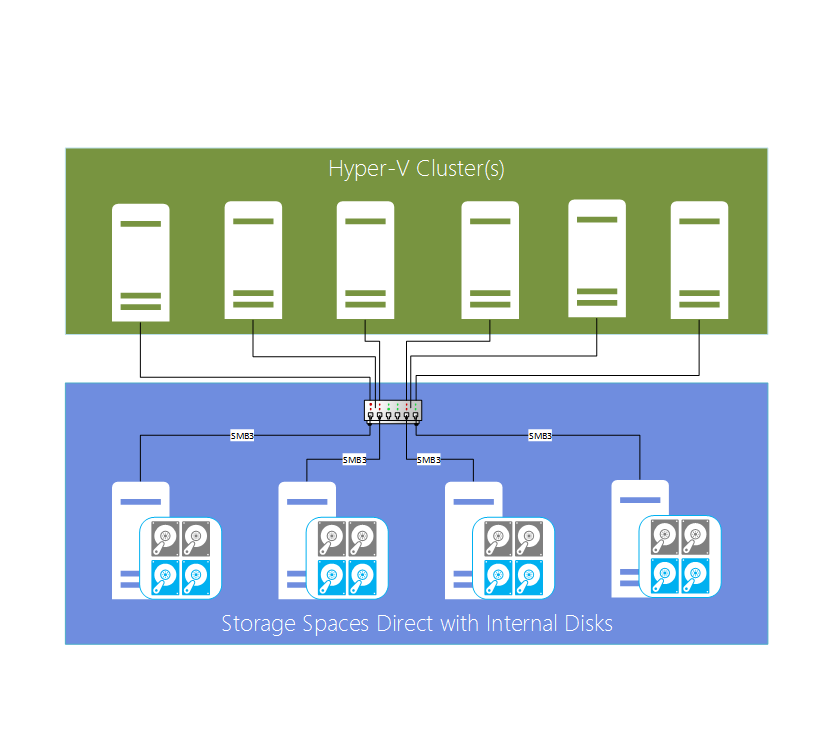

Storage Spaces has been with us since Windows Server 2012. Microsoft’s primary use case for Storage Spaces is in large scale clouds, normally deployed in “stamps,” a concept where compute (Hyper-V), networking and storage are deployed in one self-contained rack at a time. In this scenario, the storage is enabled by a Scale-Out File Server (SOFS) that uses two to four clustered servers with shared-SAS JBODs to create a continuously available file shares for storing virtual machine files using SMB 3.0 networking.

- It requires a SAS hardware layer — although this does add potentially huge scalability — we can get over 2 petabytes in raw capacity in 16Us of JBOD these days.

- Storage Spaces has been driven by shared SAS disks, which are quite expensive, but we do require a small number of servers and Windows Server licenses for the SOFS nodes.

Storage Spaces Direct (S2D) converges the SAS layer into the SOFS cluster nodes. Instead of using shared-SAS JBODs, each SOFS cluster node uses internal disks. These disks can be SAS disks, but Microsoft envisions us using more affordable SATA disks with tiered flash and HDD disks. The hardware costs could plummet with this kind of design. In this design, the SOFS nodes each contain flash (NVMe or SSD) and HDD disks, which are resilient across the nodes in the cluster and share the storage capacity with another layer of servers, such as Hyper-V hosts, via SMB 3.0.

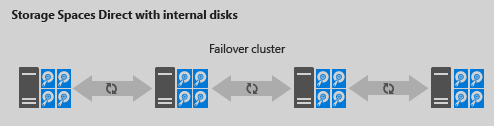

An extension of S2D is the concept of a hyper-converged cluster. This is where the cluster nodes to create CSVs from the internal disks for VM storage using S2D, and the Hyper-V role is also running on the clustered nodes — yes, that means there is only one layer of servers and each server is used for storage and compute.

And let’s put the usual question to bed: No, file shares are not being used in hyper-convergence; S2D creates CSVs, and you store the virtual machines on the CSVs.

There’s a lot more to talk about with S2D, such as licensing, hardware, networking, design, tiered storage, fault tolerance, and more, but that can all wait for future posts.

Storage Replica (SR)

A lot of customers have a need to replicate storage at the volume level for disaster recovery reasons, but they don’t; this could be because of licensing costs or organizational complications. Microsoft is adding two kinds of cluster-supported replication for volumes:

- Synchronous: for zero data loss over short distances.

- Asynchronous: Accepting minimal data loss with the ability to stretch over longer distances.

Mission critical workloads, such as Hyper-V virtual machines, can be replicated between sites using SR. This is yet another solution to use SMB 3.0 and can leverage SMB Direct. The feature is pretty easy to configure, and I expect that SR will be widely adopted in environments with high speed networking.

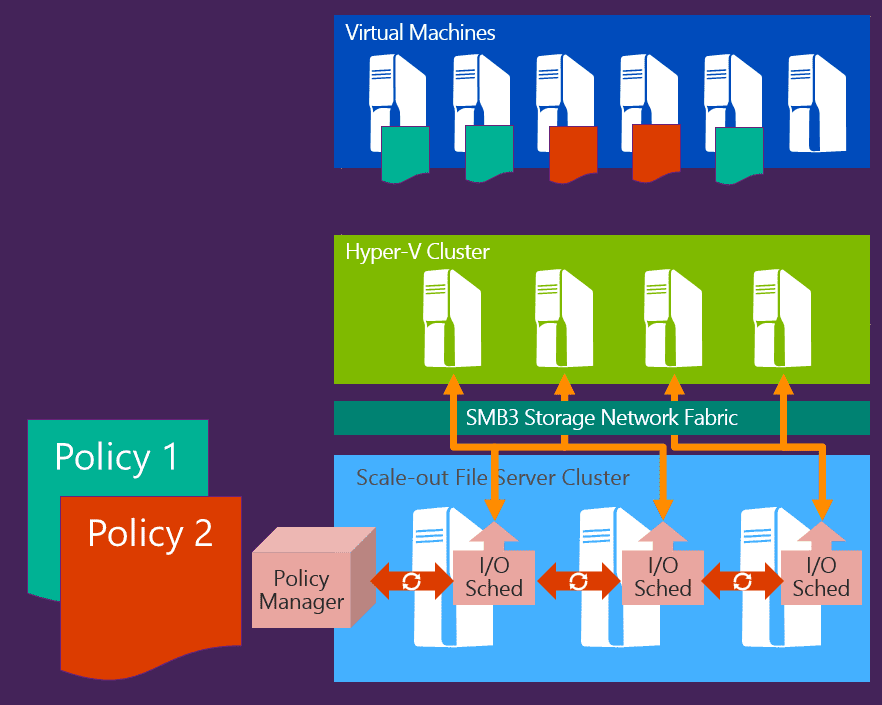

Storage Quality of Service (QoS)

We’ve had a crude form of QoS since WS2012 that was implemented and managed by the host that allowed us to set max IOPS, but only alert on minimum IOPS with difficulty. Storage QoS is a distributed solution that allows us to set minimum or maximum rules per disk, machine, or per tenant. With Storage QoS, you can take total control of storage performance with a unified view of the cluster.

Note that Storage QoS was originally written for SOFS, but it also works with CSV on block storage.

Data Deduplication

I’m still puzzled why so few people know about this money saving feature that is just a couple of mouse clicks away on any Windows 2012 server. Deduplication is useful for reducing capacity wastage for:

- At rest data, such as end user file shares or file-based backup archives.

- Storage for non-pooled Hyper-V VDI virtual machines — note the very specific description here.

- System Center Data Protection Manager supports being deployed in a virtual machine, where the data disks are stored on a deduplicated physical volume.

Microsoft has improved the performance and scalability of deduplication and added other new features:

- Support for volume sizes up to 64 TB, using multiple processors for optimization jobs.

- Support for files that are up to 1 TB in size.

- There is a pre-defined virtual backup configuration.

- Nano Server is supported.

- Cluster rolling upgrades is supported.

SMB 3.0

Microsoft continues to use SMB 3.0 in more scenarios. From the Hyper-V perspective, we can now converge SMB 3.0 NICs in the management OS and use SMB Direct (RDMA), using fewer physical NICs and switch ports.

There is a detailed listing of improvements to SMB 3.0, or SMB 3.1.1 to be precise, but the highlights are:

- Pre-Authentication Integrity protects against man-in-the-middle attacks. You can supplement this with SMB signing to prevent tampering and SMB encryption to prevent eavesdropping, both of which have some processor overhead; Microsoft recommends at least one of the additional security options.

- Encryption is now done using the better performing AES-128-GCM protocol, but can negotiate back to the original AES-128-CCM routine.

- Support for cluster rolling upgrade using a feature called Cluster Dialect Fencing, so that the SMB client and server can find a common SMB 3.0 version during the upgrade cluster-mixed mode phase.

![Classic Scale-Out File Server with Storage Spaces [Image Credit: Microsoft]](https://petri-media.s3.amazonaws.com/2016/05/3716.image_thumb_06FBEA151.png)