(Editor’s Note: This article is a guest post, which is unpaid and non-sponsored content written by an independent contributor. The views and opinions expressed here do not necessarily represent the views of the Petri IT Knowledgebase.)

Customers and partners routinely ask: “What’s next? What follows as the industry approaches 100 percent virtualization of x86 systems?”

The truth is virtualization of compute and memory at the cluster level is only the tip of the iceberg. The next step is to extend the benefits of virtualization to every domain of the datacenter – compute, storage, networking, availability, and security. The future of virtualization is the software-defined datacenter where infrastructure is virtualized and delivered as a service, and the datacenter is automated by software helping IT be more flexible, agile, and responsive to the business.

IT Challenges

The problem almost every IT organization faces is that the IT infrastructure is far too complex and rigid. Equipment and associated teams are siloed. It takes too long — and it is too expensive — to deploy a new service or scale an existing one. For many customers, business demands require IT to scale the size of an existing environment while maintaining the same level of resources.

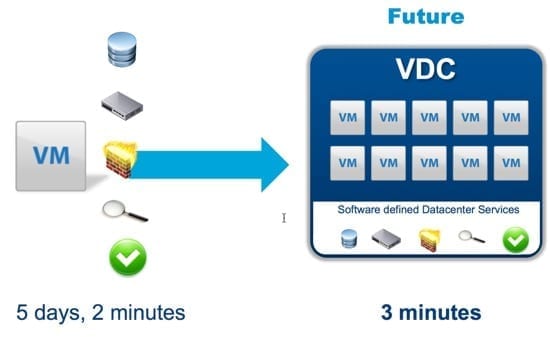

Virtualization has substantially reduced complexity and increased IT efficiency, but only a portion of the overall IT stack has benefited from those efficiencies. To illustrate, let’s examine the deployment of a new mobile application in a virtualized environment. First, deploy a new virtual machine (VM). In theory, this step should take two minutes, but in many instances it takes days or weeks. Why? Because the virtualization administrator must tie that VM to physical hardware. This means manually selecting a cluster, an array, a virtual local area network (VLAN), and then deploying a range of services against them. Each of those decisions requires intimate knowledge of both the capabilities and utilization of that hardware, but also knowledge of the potential future needs of the application. Making the wrong choice often means significant rework planning and executing a migration.

Every customer wants to find a way to dramatically simplify and automate this process. By recasting every layer of infrastructure as software services that can be abstracted from physical systems, pooled and delivered to support the needs of any application, the software-defined datacenter makes it possible to automatically provision and scale infrastructure to meet the needs of the business.

Moving to the Software-Defined Datacenter: What Lies Ahead?

The virtualization principles of abstraction, pooling and automation are no longer limited to compute and memory. Industry shifts in networking, storage, and systems management are underway helping to pave the path for the software-defined datacenter.

One of the most interesting component technologies to enabling the software-defined datacenter is network virtualization, which takes aim at a critical customer pain point: the cost and complexity of managing networks and network services in support of cloud architectures. Network virtualization decouples virtual networks from network hardware just like VMs are decoupled from underlying server hardware. This approach enables the rapid provisioning of networks, full application mobility across clouds, and the implementation of unique network configurations regardless of underlying hardware helping customers gain operational efficiencies.

Equally compelling are the changes underway in storage. It’s commonplace for an IT organization to aggregate its storage resources into pools of storage for both cost and Service Level Agreement (SLA) reasons now that technologies exist to load balance across like-arrays. The advent of flash memory can enable almost any array to deliver performance akin to the fastest arrays on the market, and at a fraction of the cost (albeit, not for every workload). Additionally, high-end arrays continue to deliver increased value as greater communications between arrays and virtualization platforms allow for increased efficiency, scalability, and performance in support of the most demanding workloads.

The pooling of datacenter resources is also driving noteworthy change in systems management. Today, changes in IT environments require tickets and manual processes. In the software-defined world, the number of workloads per IT administrator is expected to grow to levels where humans won’t be able to carry out every change in IT systems. The flipside is that increasingly, they won’t have to, as the use of advanced analytics is enabling greater automation across systems. More and more administrators are turning to analytics to proactively avert potential performance issues or determine when to add capacity to individual applications, clusters or even entire datacenters.

Compute and memory virtualization is only the beginning of the virtualization of the datacenter. As the abstraction, pooling, and automation principles are applied beyond compute and memory to storage, networking, security, and availability, the software-defined datacenter stands to break down existing siloes of IT resources leading to less IT complexity and inefficiency and help enable IT organizations to achieve greater levels of responsiveness to satisfy business demands.