Microsoft recently announced a new Microsoft-sold cloud-in-a-rack solution called Cloud Platform System (CPS) based on Dell hardware. Jonathan Hassell wrote an article for the Petri IT Knowledgebase in October that gave us a first look at CPS. In this article, I am going to review CPS – I saw ‘review’ because I, like the vast majority of Microsoft customers and partners, will never even get my hands on a CPS deployment. But as you will find out soon, neither will many CPS customers! So everything I write in this article is based on materials presented at TechEd Europe in November 2014, namely:

- Architectural Deep Dive into the Microsoft Cloud Platform System

- Using Tiered Storage Spaces for Greater Performance and Lower Costs

What is Microsoft’s Cloud Platform System?

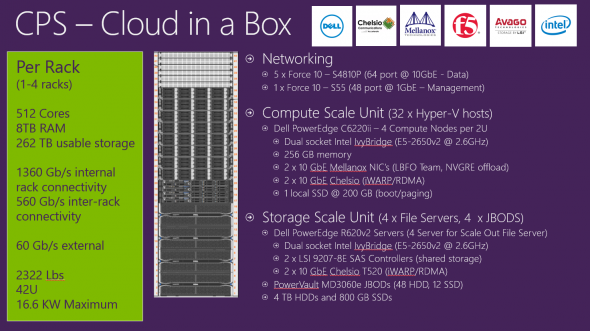

Let’s get this out of the way first: CPS is not, as many in the media have dubbed it, Azure-in-a-box. That’s because you will find the real Azure in Microsoft’s data centers and possibly the two partner-operated regions that are in China. CPS actually takes components that you can purchase for yourself and bundles them up into a package that is sold directly by Microsoft. Those components are:

- Dell hardware: Storage, switches and servers

- Chelsio NICs: For iWARP, 10 GbE Remote Direct Memory Access (RDMA) enabling SMB Direct storage networking

- Mellanox NICs: Adding more 10 GbE with NVGRE offload for optimized virtual networking

- F5 load balancers: Two VIPRION 2100 modules are included in each rack

- Windows Server 2012 R2: Providing Hyper-V and the Scale-Out File Server (SOFS) for storage

- System Center 2012 R2: Deployment, monitoring, automation, and backup.

- Windows Azure Pack: Enabling service management and providing a cloud service to tenants.

Each rack provides a stamp of storage, networking, compute and management, with the CPS solution scaling out to four racks, before you start all over again.

The solution is based almost entirely on Dell hardware. This includes JBODs and R620 servers that are used to make a SOFS, Force 10 switches, and two chassis of blade servers that are used as the Hyper-V hosts. The storage is divided into three Storage Spaces pools for performance domains, where each offers an isolated block of potential IOPS:

- Compute pool 1: Half of the guest storage

- Compute pool 2: The other half of the storage

- Backup pool: Storing VHDX files for virtual DPM servers to backup to

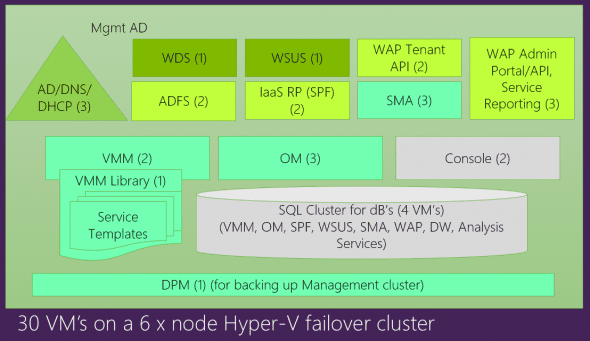

A sizeable deployment of System Center and Windows Azure Pack is deployed as a part of the solution in the first of four racks, which is comprised of around 30 virtual machines:

Cloud Platform System Benefits

There are some good things to say about CPS.

Pre-Packaged Solution

CPS might be made up of components that you can buy directly and can even be emulated using components from alternative sources. But what you get from Microsoft is a pre-packaged cloud solution that is ‘service ready’ soon after delivery. Microsoft has invested quite a bit of time in the solution by:

- Working with Dell on the arrangement of hardware and delivery of the equipment

- Automating the deployment of the entire software solution

- Training Premier Field Engineering (Microsoft’s support consultants) to deliver and customize the final product

Pre-packaged solutions are great for customers that just want to run with it, and there are many larger clients, such as government entities, that struggle with lengthy and over-budget IT projects. According to Philip Moss of NTTX Select, his company was able to was “able to build a next-generation IaaS solution in three days instead of nine months.”

Tested Solution

Microsoft worked on this project for quite a long time, which resulted in Dell improving their drivers and firmwares. It also resulted in Microsoft discovering over 500 issues in their own software and fixing them — we’ve already seen improvements such as Update Rollup 3 for System Center Data Protection Manager (DPM) 2012 R2 that added:

- Deduplication: You can enable deduplication on the volume that contains VHDX files that are used as DPM backup volumes as long as the host that is running the DPM VMs is accessing that volume via SMB 3.0.

- More scalable backup: DPM added support for file-based backup and this increased the scalability and reliability of Microsoft’s Hyper-V backup solution.

We also learned at TechEd that a Windows Server 2012 R2 rollup is due for release in December would improve the experience of Storage Spaces’ parallelized restore.

So Microsoft improved CPS to give customers a stable product, and as a result, those of us deploying non-CPS Microsoft cloud solutions have also gotten improved products.

The Microsoft Cloud OS

With this Microsoft software-defined compute, storage, and networking solution, you get a public or private cloud package that enables hybrid cloud computing across Azure, hosting partners, and on-premises deployments. I’ve gone on record before, stating the advantages of Microsoft’s software package over the competition. Quite simply, Microsoft has left the competition behind when it comes to hybrid cloud computing.

Cloud Platform System Disadvantages

All is not rosy with CPS, in my opinion.

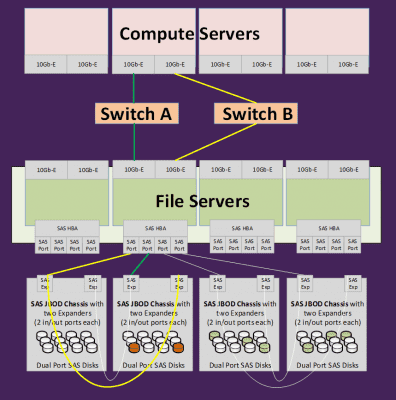

The SAS Design

There are some issues with the design of the SAS connectivity between the SOFS cluster nodes and the JBOD trays. I expect comments from Microsoft evangelists who will disagree with me and the entire SAS connectivity industry.

There are two issues I have with the SAS design, both of which are based on limitations of Dell’s support for large deployments of Storage Spaces.

Six Gbps SAS adapters reach their performance limitations when we exceed 48 physical disks in a Storage Spaces design. Best practice is to deploy 12 Gbps SAS cards for larger deployments of Storage Spaces to enable best performance, even with 6 Gbps JBODs. Dell’s R620 server is used for the nodes of the SOFS, and this server does not have support for LSI’s 12 Gbps SAS cards.

As you can see in the above cabling diagram, Dell has not followed best practice for connecting the SOFS nodes to the JBODs. The Dell R620 cannot support the necessary number of direct SAS connections to the JBODs. Normally we will connect each SOFS node with two cables to each JBOD. In a 4 node and 4 JBOD SOFS, that means that each SOFS node will require 8 SAS cables. This direct connectivity offers two benefits:

- Peak performance: Each cable offers 24 Gbps of throughput (6 Gbps * 4 ports per cable) with each server having 192 Gbps of direct connectivity to the disks. Dell cannot match this because they have just four connections instead of eight per SOFS node.

- Failover performance: Each SOFS node should have dual MPIO connections with a Least Block policy. Even if a connection fails between a node and a JBOD, that node will still have a single cable offering direct 24 Gbps of bandwidth through to the disks in that tray. In the Dell design, daisy chaining will mean that a more latent hop via another JBOD will be traversed in the event of the single normal connection failing.

The Price

Why won’t I ever see a CPS in the real world? That’s easy because according to a whitepaper by Value Prism Consulting the cost of CPS can be broken down as follows:

- Appliance (hardware from Dell only): $1,635,600

- Deployment: $7,200

- Maintenance (including Software Assurance): $154,400

- Support: $77,300

The total cost is $2,609,000. I’m not sure how the hardware in this solution could have cost quite as much as $1.6 million. Normally storage is the big cost in a deployment, and I’m pretty sure I could offer a best-practices SOFS JBODs and SAS system for under $300,000, with 6 TB cold tier disks instead of 4 TB drives. 10/40 GbE top-of-rack switches from one of the HPC networking vendors would have cost half as much as the Force 10 gear from Dell. And that leaves lots of budge for some very nice hosts and NICs.

Microsoft Services versus Partner Alienation

CPS is a two stage deployment: Dell sets up the hardware and a team from Microsoft sets up the software. From that point, Premier Field Engineering (PFE) take over administration of the system.

In most cases, a consulting company (a Microsoft partner), designs and deploys solutions of this scale for customers. Once installed, there’s a handover and training period where the customer takes ownership of the system. CPS is a direct-sale and a direct-implementation by Microsoft. Microsoft is leaving no room for their partners to operate in the upper reaches of the enterprise/government market, a market that derives a lot of profits for Microsoft’s partners.

Microsoft cannot expect partners to remain calm and unaffected by the launch of CPS. CPS puts Microsoft in direct competition not just with the likes of hardware vendors such as HP, Cisco, Hitatchi, EMC, IBM, NetApp, and more, but it also puts them up against their services partners that have evangelized their products, convinced customers, and given Microsoft billions in profits in the past few decades. Microsoft should expect some of these partners to defect to competitor platforms and use their deep relationships with their clients and knowledge of their sites to fight against and beat Microsoft.

It Might Be Yours – But It Isn’t!

Imagine you walked into your local PC store, bought a laptop, brought it home and were told that you may not have administrator rights on your shiny new appliance — welcome to CPS! Customers will be delegated administrators, managing their cloud-in-a-box solution via the WAP administrator portal.

When CPS is deployed, the Microsoft engineers will handle the networking configuration, domain joins, and so on. On-going maintenance is handled directly by Microsoft – for some this is a good thing, but most IT organizations will hate this. We have a precedent – Windows Server 2003 Datacenter was handled in this fashion when it was first released. Maintenance was handled by OEMs. That proved unpopular and eventually Datacenter was released like the other SKUs of Windows Server.

Backup Placement

CPS uses a virtual DPM installation to back up the production systems. Production systems are stored on two storage pools (three-way mirroring virtual disks) in the SOFS. Backup uses another storage pool (dual-parity virtual disks) in the same SOFS. I would not recommend this design, whether I was using SOFS or a SAN, Hyper-V or vSphere. Storage systems of all kinds fail, either for human, systemic, or hardware reasons. And if you lose one of these SOFS installations you will lose both your production systems and your back up of them. Try explain that one to the directors, shareholders and customers!

System Center Virtual Machine Placement

A diagram presented at TechEd Europe 2014 illustrated that all 32 of the System Center virtual machines would run on a single rack, even in a full four-rack deployment. In my opinion, that’s a bit of a risk. I would prefer to see an alternative:

- Once you deploy a second rack, System Center VMs should be spread out across the racks to limit risk of systems going down. Imagine this: you lose your primary rack and you no longer have access to the WAP administrative portals! There goes your service business for all four racks!

- Adding additional racks might offer a solution for my backup placement concern. Maybe Rack B should protect rack A, and vice versa? That would be a solution for mutli-rack customers – and let’s face it, at the above prices, the typical CPS customer won’t be buying just one rack.

The Verdict

If I was the CIO of a large organization that struggled with completing IT projects or bringing them in on budget, then CPS would be an excellent option.

But outside of that rarified stratosphere, CPS is a fish out of water. I would want a system that I could design for best practice, peak performance and scalability, with components of my choosing — I do like LSI, Chelsio and Mellanox, which are included, but I might have gone higher spec. I’m convinced that I could have purchased such a rack at a lower cost, and I could have engaged a consulting firm to deploy and maintain it for me in a method that I direct and still retain control over.

CPS is held up by Microsoft as a reference architecture for non-CPS deployments. There are some things that I will take from this design, but I would warn architects to beware of things such as the SAS design, the placement of all System Center components in a single rack, and any design that places the backup data in the same storage system as the production data.