Windows failover cluster is made up of lots of pieces. Figuring out this architecture or troubleshooting a cluster can be very difficult without some good nomenclature. In this article I will share what I do to standardize the naming of those Windows cluster components.

Why Have Naming Standards?

Think of all that makes a cluster:

- LUNS on SAN or SMB 3.0 shares

- Volumes in Disk Manager

- Disks in Failover Cluster Manager

- Cluster Shared Volume (CSV) mount points

- NICs in a host or server

- Networks in Failover Cluster Manager

There are a lot of pieces making up the high availability architecture. Once it’s built, you often never look at those pieces for a long time… at least not until something goes wrong. Imagine a build where:

- a LUN is created in SAN called something random

- that LUN is mounted in the hosts and formatted as New Volume 1

- the disk is attached to the cluster and called Available Disk 1

- you convert the disk into a CSV and it is left with a default (useless) name

Now imagine that there are 12 such LUNs in a 6-node Hyper-V cluster, and they’ve all got random names. Consider how complex this could be to understand if no naming standards were applied! Or imagine that you’ve gotten alert that something has happened to a year old cluster and you need to start figuring out what’s what. Maybe you’re a consultant or a new-hire visiting a cluster for the first time. Do you really need to spend half a day documenting the build before you start to tackle the issue that is causing service outages?

This is why I strongly encourage people to carefully assemble the physical and software components of a cluster architecture – including storage, networking, and servers – and to use a consistent naming standard that can scale out beyond just a single cluster.

Most issues in clusters are related to storage and networking, so that’s where I will focus my attention in this post.

Networking

Clustered servers tend to have more NICs than most servers. That number typically increases in the cause of Hyper-V hosts. Each of those NICs will be given a useless name that has no correlation to their location in the server if your hardware is older and you have a legacy (pre-2012) version of Windows Server. Since Window Server 2012 (WS2012), a feature called Consistent Device Naming (CDN) will name each interface after its location in the server. But does “Slot 1 Port 1” tell you anything about the purpose of that NIC? Not so much.

I recommend doing the following:

- Plan and document the purpose of each NIC port

- Insert one cable at a time into the servers if your hardware does not support CDN. This will allow you to identify the interfaces in Windows Server as they switch to a connected state.

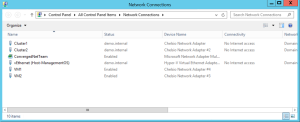

- Rename each interface in Control Panel > Network Connections according to the purpose of the NIC. See the below example from my lab at work.

- Keep this entire scheme consistent across servers

Tip: The quickest way to Network Connections is to run NCPA.CPL. That shortcut should save you a week of your life over the course of a year as an IT pro.

Storage

There are several layers to a disk in a cluster. This goes even further if you are deploying CSVs. Knowing what CSV mount point is related to what LUN in a SAN, and all points in between, will simplify your life, and make documentation via screenshot or script a lot easier.

Start with the SAN. Each LUN that is going to be created for a cluster should be named after:

- The cluster: A SAN might support more than one cluster in the life of that SAN. Make life simple and include the name of the cluster so you don’t have overlapping names.

- The disk: A SAN LUN is zoned to hosts, the volume is formatted, the disk is attached to a cluster, and that disk might be converted into a CSV where it is mounted in C:\ClusterStorage on each host, appearing as a folder. A consistent disk name that is used through each of those layers will simplify administration.

For example, I might create the following LUNs for a cluster called HVC1:

- HVC1-Witness

- HVC1-CSV1

- HVC1-CSV2

At a later time, I might need to build another cluster called HVC2. I could then create more LUNs on the SAN:

- HVC2-Witness

- HVC2-CSV1

- HVC2-CSV2

Each LUN has a unique name. I know the purpose of the LUN and I know which cluster the LUN is associated with.

Each disk would be connected or zoned to each host in the intended cluster. For example, each of the HVC1- disks would be connected to each node in the HVC1 cluster. You then need to format the disk on one of the nodes. I would then use a volume label that is based on the LUN name. For example, I would format the LUN called HVC1-CSV1 with the label of HVC1-CSV1.

The volumes are then added as available disks in Failover Cluster Manager (FCM). The default name is pretty useless, so I would edit the properties of the disk in FCM to rename it to HVC1-CSV1.

In the case of a Hyper-V or a Scale-Out File Server (SOFS) cluster, I would convert the non-witness disks into CSVs. Each CSV appears as a folder (it’s actually a mount point) in C:\ClusterStorage. Each folder gets a rather useless name that is unrelated to the FCM disk name by default. I rename each of the folders (mount points) to match their FCM disks (and hence the volume label and LUN name). This renaming is supported by Microsoft.

So in my finished deployment, there is:

- A LUN called HVC1-CSV1

- That LUN is formatted with a volume called HVC1-CSV1

- The disk is attached in FCM and renamed to HVC1-CSV1

- I convert the non-witness disk into a CSV and rename the mount point to HVC1-CSV1

Now I know the relationships between all components of storage throughout the layers of the cluster architecture. If virtual machine X fills up HVC-CSV2 then I know which LUN I need to add space to in the SAN and which volume to extend in Disk Manager (or via PowerShell).

This renaming process takes only a couple of minutes – and just a few seconds if you automate the build of your cluster using PowerShell scripting. It is worth spending a little time getting things right now, so it saves you a lot of time later when you don’t have it to spare.