Back in September I wrote an article called “What is Hyper-Convergence?” In that article, I explained what this concept was and I shared my thoughts on the positives and negatives of this kind of deployment of Hyper-V, vSphere, or other virtualization/cloud platforms. In this article, I will explain what I believe to be Microsoft’s viewpoint on hyper-convergence.

What is Hyper-Convergence?

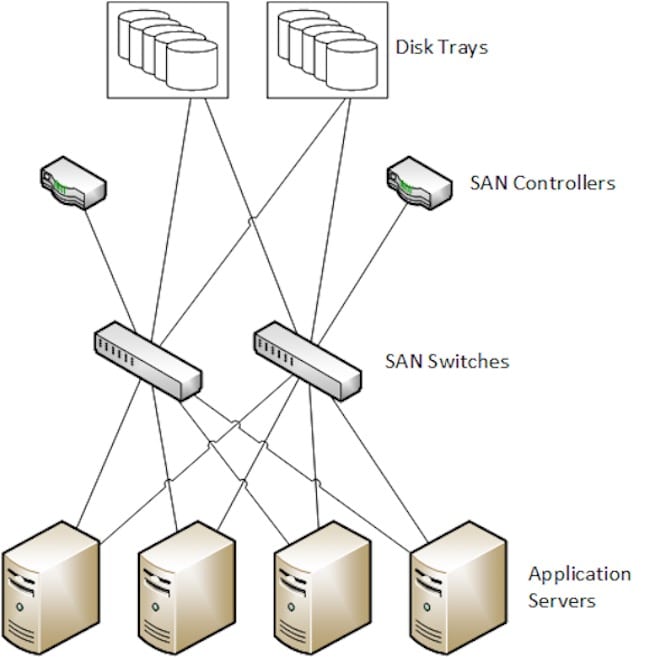

The traditional deployment of a vSphere or Hyper-V farm has several tiers connected by fabrics. The below diagram shows a more traditional deployment using a storage area network (SAN). In this architecture you have:

- Storage trays

- Switches (iSCSI or fiber channel)

- Storage controllers

- Virtualization hosts (application servers)

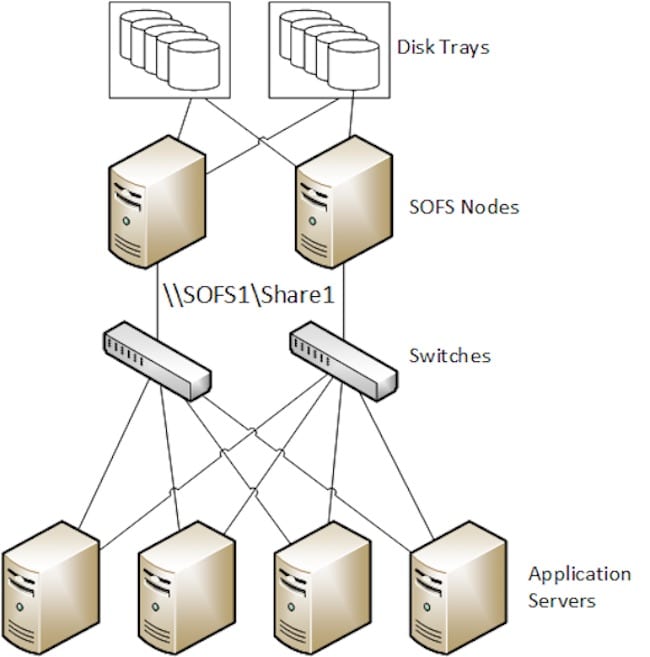

In the Hyper-V world, we are able to do a software-defined alternative to a SAN called a Scale-Out File Server (SOFS), where:

- RAID is replaced by Storage Spaces

- Storage controllers are replaced by a Windows Server transparent failover cluster

- iSCSI and fiber channel are replaced by SMB 3.0

But for the most part, the high-level architecture doesn’t really change:

In the world of hyper-convergence, we simplify the entire architecture to a single tier of servers/appliances that run:

- Virtualization

- Storage/cluster network

- Storage

I say “simplify” but under the covers, each server will be running like a hamster on a wheel … on an illicit hyper-stimulant.

What Microsoft Says About Hyper-Convergence

Hyper-convergence is a topic that has only been getting headlines for the last year or so. This is mainly thanks to one vendor that is marketing like crazy, and VMware promoting their vSAN solution. I had not heard Microsoft share a public opinion about hyper-convergence until TechEd Europe 2014 in October.

The message was clear: Microsoft does not think hyper-convergence is good. There are a few reasons:

- Scaling: The method of growing a hyper-converged infrastructure is to deploy another appliance, adding storage, memory, processor, and license requirements. This is perfect if your storage and compute needs grow at the same scale, but this is about as rare as gold laying geese. Storage requirements normally grow much faster than the need for compute. We are generating more information every day and keeping it longer. Adding the costs of compute and associated licensing when we could be instead growing an affordable storage tier makes no sense.

- Performance: Hyper-converged storage is software defined. Software-defined storage, when operated at scale, requires compute power to manage the storage and perform maintenance (such as a restore after a disk failure). Which do you prioritize in a converged infrastructure: virtual machine computational needs, or the functions of storage management, which in turn affect the performance of those same virtual machines?

This is why Microsoft continues to push solutions based on a storage tier and a compute tier, which are connected by converged networks, preferably leveraging hardware offloads (such as RDMA) and SMB 3.0. The recently launched Dell/Microsoft CPS is such a solution.

In my opinion, Microsoft is right. I don’t think hyper-convergence can scale well without making sacrifices in terms of performance and complexity. I question the economics of the concept – not just in terms of scale-out, but also the cost of some of the hardware solutions out there makes purchasing a Dell Compellent or a NetApp SAN look affordable!