Microsoft’s Changed Opinion on Hyper-Convergence

A year ago, I wrote about Microsoft’s view on hyper-convergence, a virtualization architecture where storage and compute reside in the same tier. In this post, I’ll update you on what Microsoft now thinks about hyper-convergence.

A Long Time Ago

In a conference far away, some of Microsoft’s storage leads talked publicly about hyper-convergence. Microsoft was not a fan. They looked at the solution where you needed to deploy an integrated unit of compute and storage with every required expansion of compute or storage. Most organizations have two types of growth:

- Compute: The demand for more processors and memory is relatively small

- Storage: Almost every organization has a relatively larger demand for more storage capacity

With hyper-convergence, it usually means that any organization that needs more storage must also add compute (processor, memory, and guest OS & management licenses), which would be quite wasteful. So Microsoft still recommended, as of a year ago, that customers stick with the converged or disaggregated models of:

- Compute with CPU and RAM running a hypervisor

- Connected by a network to storage controllers

- Connected by a network to disk trays containing disks

The legacy model allows compute and storage to grow independently, and therefore the costs can be tightly controlled.

A Dark Time for Legacy Storage Companies

It’s a dark time for legacy storage companies. These rigid giants of industry are stuck in their old ways of iSCSI, fiber channel, SAN controllers, licensing, and overpriced disks. Meanwhile agile customers are not only seeking out alternatives, but also jumping to the cloud where there are no SANs.

One of those alternatives is hyper-convergence from the likes of Gridstore, Simplivity, and Nutanix. These kinds of companies promise something that no-one else can: simplicity and risk aversion. Considers the vendors or tiers for single vendor involved a virtualization project:

- Top of rack switch

- Servers

- Hypervisor

- Network cards

- HBAs

- Storage system

- Disk trays and disks

The biggest project failure I’ve witnessed involved a scenario where each tier came from a different vendor. It didn’t help that the customer would let those vendors communicate with each other. Imagine an alternative where there is just a top of rack switch, hyper-converged hosts, and a hypervisor.

The risk is reduced substantially, and the installation is a matter of rack the switches and rack the hosts. Expansion is simple too: rack more hosts. And this is exactly why hyper-convergence has become a hot ticket item for mid-large businesses, and especially in those kinds of organizations where IT projects have historically been … newsworthy.

A New Hope

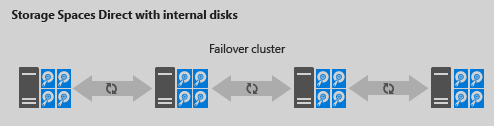

Late in 2014, Microsoft started talking about Storage Spaces Direct (S2D). With this solution, Microsoft intended to flatten the architecture of the Scale-Out File Server (SOFS) by removing the need for external SAS-based shared JBODs. Instead, we could use SATA HDD, SATA SSD, or NVME inside of the SOFS nodes, which simplified the solution and reduced the cost. One potential scenario was that we could hyper-converge this solution by removing the need for an addition tier of Hyper-V hosts; instead, the storage cluster nodes would also be Hyper-V hosts, and the virtual machines are simply stored on the stretched CSVs (not on shares).

That means Microsoft was going to start offering hyper-convergence as a part of Windows Server 2016 (WS2016). However, Microsoft stated that this was going to be a solution for smaller companies because they just saw issues with storage operations competing for processor with virtualized workloads, along with issues with storage and compute scale at different rates.

Larger companies, they said, should continue with the converged or disaggregated model with compute (Hyper-V) connecting to the SOFS via SMB 3.0.

But Microsoft listened to customer feedback, and they observed the changes in the storage market. There was clearly a market for hyper-convergence, but it’s not just in the SME world. Bigger organizations are willing to pay the price of hyper-convergence to have a turn-key deployment.

So today, Microsoft is talking a lot about hyper-convergence with WS2016 and S2D. A video was posted in August showing a 16 node S2D hyper-converged Hyper-V cluster performing over 4.2 million 4K read IOPS.

Improvements in hardware and software are making it easier for Microsoft to converge Hyper-V and storage into a single tier:

- The S2D storage bus has introduced the ability to persistently cache reads and/or writes using flash storage.

- We can tier storage using mirroring and erasure coding (parity) to optimize write performance and consumption of raw capacity.

- SMB Direct (Remote Direct Memory Access or RDMA) optimizes the performance of the east-west network and reduces the impact of network interrupts on the processor.

- Microsoft has made improvements in Windows Server to de-prioritize storage operations in favor of compute operations.

So it’s a new day. Going forward, Microsoft will see hyper-convergence as another valid option for customers, along with new and legacy models of disaggregated or converged storage.

The Empire Strikes Back

Microsoft announced their current intentions for the licensing of Windows Server 2016 after I wrote this article. Storage Spaces Direct (S2D) and therefore Windows Server’s built-in hyper-convergence, will only be available in the Datacenter edition of Windows Server (based on currently announced plans). In reality, this licensing change will not affect hyper-convergence, because hosts of this scale will probably be licensed with Datacenter edition to license the large number of virtual machines running on each host. However, it should be noted that the licensing change will probably negatively impact the argument for S2D in a disaggregated or converged (not hyper-converged) virtualization/storage scenario. I suspect that dis-aggregated storage will continue to be the most commonly adopted design because, for most customers, storage and compute still do not scale at the same rates.