Google Faces Yet Another Complaint: Deceiving Children

Google can’t seem to catch a break these days: the Internet search giant faces several antitrust investigations in Europe, and recent revelations that the US Federal Trade Commission should have pursued an antitrust case against the firm in this country have only damaged the reputations of both entities. But now Google is being accused, again, of deceptive business practices. And this time its target is children.

A coalition of child advocacy and consumer groups has asked the Federal Trade Commission to investigate Google for the “unfair and deceptive” YouTube Kids app, which they say is nothing more than a vehicle for the sort of ads that were outlawed on television over 30 years ago. The coalition includes the Center for Digital Democracy, the Campaign for a Commercial-Free Childhood, American Academy of Child and Adolescent Psychiatry, Center for Science in the Public Interest, Children Now, Consumer Federation of America, Consumer Watchdog, Consumers Union, Corporate Accountability International, and Public Citizen.

“It’s just one, long, uninterrupted ad,” says Democratic Media’s Jeff Chester. “It turns back the clock 30 years in terms of the role that advertising plays in kids programming.”

“YouTube Kids takes unfair advantage of the trusting nature and lack of experience of children,” said the Institute for Public Representation at Georgetown Law’s Angela Campbell. Ms. Campbell advised the coalition to pursue legal action against Google.

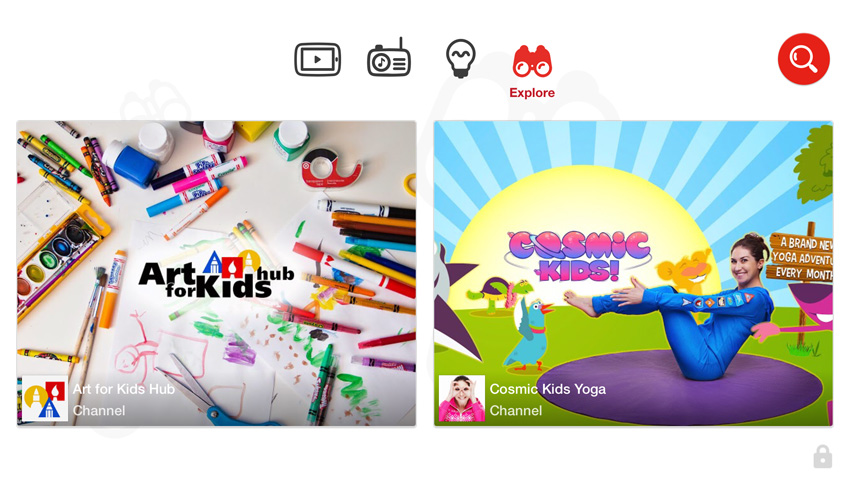

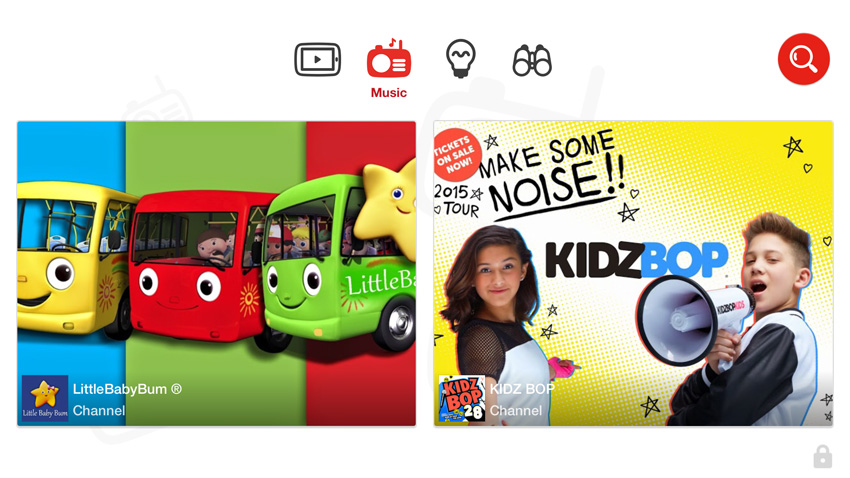

YouTube Kids is a mobile app for Android and iOS that purports to offer a family-friendly frontend to the often randy online video service. In many ways, YouTube today parallels TV, with a mix of adult and less controversial content. “Parents can rest a little easier knowing that videos in the YouTube Kids app are narrowed down to content appropriate for kids,” Google explained at the time of the app’s launch.

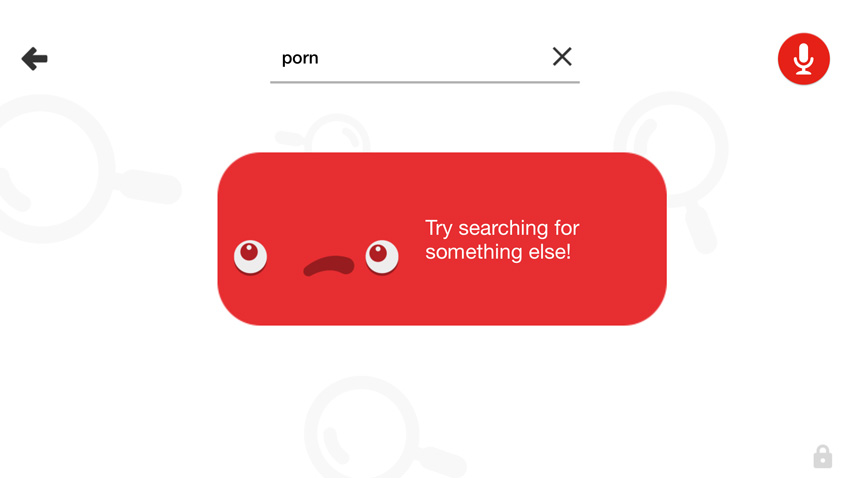

That claim is at least supportable. As a fully-grown adult with teenaged-children, I hadn’t tried YouTube Kids, which was released only recently. But curious about the app, I did so this morning and proceeded to perform a few simple searches to see what came up. I didn’t find anything troublesome, at least from an adult content perspective.

After dispensing with the first-run parental control request—so easy even a child could do it—I spent considerably more time futilely trying to turn off the annoying jingle that plays constantly in the background of the app. (I don’t think it’s possible. How annoying.) But searches for TV characters like “Barney” and “Elmo” turned up nothing but the intended content, which is indeed aimed at children. And searches for explicit terms brought up the desired blocking message.

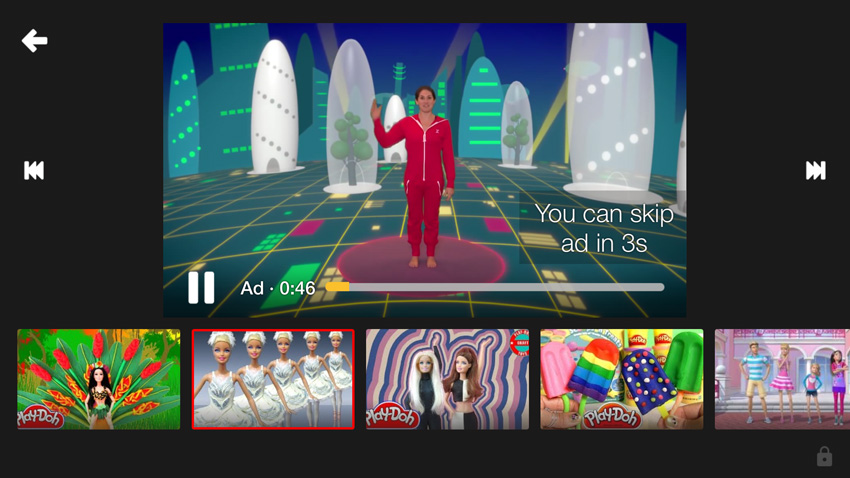

Of course the central complaint from the coalition is that the YouTube Kids app is essentially just a frontend for advertising. And sure enough, many of the videos you see in the app are simply infomercial-style ads for products like candy and Play Doh. Other videos contain explicit advertisements with the “you can skip this ad in xx seconds” link you see in many videos on the YouTube web site.

The videos I watched were also heavy with overt “subscribe” and “comment” requests, since the video makers earn money from YouTube page views. This is a questionable practice for videos aimed at kids: They contain advertising, are often reviews for products kids will want their parents to buy, and are in fact themselves commercial ventures for the video makers. (“We do toy reviews!” one creepy video series advertises with animated versions of the adult couple who narrate them. “We make videos for girls and boys!” Yikes.)

The issue, of course, is that while children’s programming on television is prevented by law from containing this kind of advertising, the same laws do not apply—yet—to digital entertainment services like YouTube. “YouTube Kids uses numerous tactics that have already been ruled illegal on TV,” University of Arizona professor of communications Dale Kunkel told PC World. “It’s astonishing that a major company like this would be so completely out of touch with the special protections typically afforded to children in electronic media.”

Google says it has done nothing wrong.

“When developing YouTube Kids, we consulted with numerous partners and child advocacy and privacy groups,” a Google statement notes. “We are always open to feedback on ways to improve the app.” Google also pointed out that the app does not collect personal information.