In “Managing SMB Multichannel in SMB 3.0,” I discussed managing SMB Multichannel and explained how SMB chose NICs for data transfer if there was more than one option between the SMB client and the SMB server. Sometimes SMB 3.0 might choose NICs that you didn’t want it to use or it might use too many NICs.

In this article, I’ll explain how to control SMB multichannel and avoid those potential issues throughout design. I’ll also show you how to limit the bandwidth that SMB 3.0 can use when NICs are shared.

Controlling SMB Multichannel through Smart Design

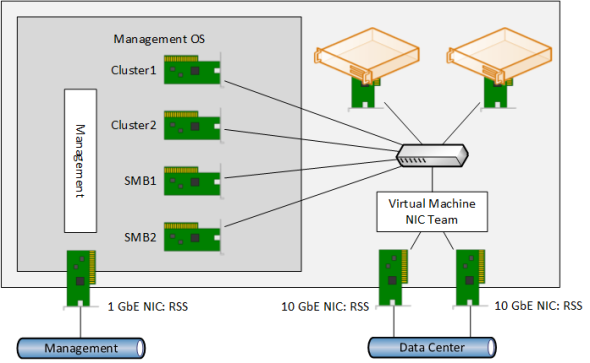

In the first article of this series, I showed a problematic example design. You can review this example design in the image below. The design was deployed by System Center Virtual Machine Manager (SCVMM) administrators that thought they needed a physical management NIC. When the hosts were tested, the administrators found that all SMB 3.0 storage traffic went through the management NIC, instead of the intended, management OS virtual NICs.

Note: Refer to the first article in this series to learn how SMB 3.0 chooses available paths from the SMB client and the SMB server, along with learning detailed information on NIC ordering.

Incorrectly designed SMB Multichannel storage. (Image: Aidan Finn)

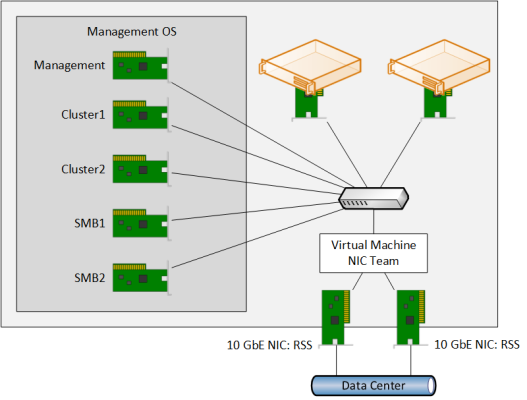

The best way to prevent SMB 3.0 from using the physical management NIC is to design the host correctly. When designing the logical switch, you can actually designate a virtual NIC as the management NIC. It’s a bit tricky to do in the SCVMM console, but it can be done. You can even dispense with the physical NIC that was being used for host management. So not only will you get higher bandwidth storage networking, but you will also have a cheaper and simpler design.

A Hyper-V host with converged management virtual NIC. (Image: Aidan Finn)

SMB 3.0 Multichannel Constraints

The above all-converged design is nice because it is much simpler and software defined, which includes fewer drivers and firmware dependencies. But there is a catch! What will happen if the management, clustering, and SMB NICs of the hosts are all on the same VLANs as the file server’s respective management, clustering, and storage networks?

SMB 3.0 will determine that there are multiple paths between the hosts and the storage, namely every management OS virtual NIC, and it will use every single one of them! Yes — SMB Multichannel will aggregate the management, clustering, and SMB virtual NICs to access the file server’s storage!

We do not want this. We need to apply controls to guarantee cluster communications and management accessibility of a host, even if the storage resources are being maximized. This is why Microsoft gave us a control called SMB Multichannel constraints, which is managed using PowerShell. The New-SMBMultichannelConstraints cmdlet is used to add a new restriction on different NICs that are used by the local server to access a designated file server. You can have different rules for different servers. SMB Multichannel is used in two ways in the above design:

- Storage: SMB1 and SMB2 are intended to be used for accessing SMB 3.0 storage.

- Redirected IO: Cluster1 and Cluster2 should be used whenever the Hyper-V cluster needs to redirect storage IO for whatever reason.

Using this requirement, I would create two SMB Multichannel constraints:

New-SmbMultichannelConstraint -ServerName Host2,Host3,Host4 -InterfaceAlias Cluster1,Cluster2

New-SmbMultichannelConstraint -ServerName SOFS1 -InterfaceAlias SMB1,SMB2

SMB 3.0 Bandwidth Limits

SMB 3.0 has evolved quite a bit since the release of Windows Server 2012 (WS2012). Windows Server 2012 R2 (WS2012 R2) can use SMB 3.0 for storage, redirected IO, and Live Mirgration on 10 GbE or faster networks.

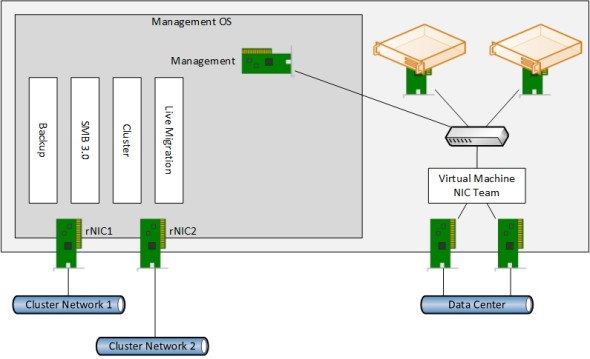

This convergence of roles on the SMB 3.0 protocol lets us introduce higher speed networks, possibly even with Remote Direct Memory Access (RDMA,), to make the most of the investment by limiting the costs of top-of-rack (TOR) switch ports. A design that I really like is shown below:

A converged network design leveraging SMB 3.0. (Image: Aidan Finn)

Two non-teamed RDMA capable NICs (rNICs) are used for multiple roles:

- Cluster communications, including redirected IO

- Storage

- Backup

- And SMB Live Migration

Normal QoS rules cannot stop Live Migration from starving storage of bandwidth or vice versa because there’s no way to differentiate SMB 3.0 from SMB 3.0. This is why we have Set-SmbBandwidthLimit, which requires the SMB Bandwidth Limit feature to be enabled in Server Manager. You can configure three types of traffic with this cmdlet:

- VirtualMachine: Hyper-V over SMB storage traffic.

- LiveMigration: Hyper-V Live Migration based on SMB.

- Default: All other types of SMB traffic.

With the following example, I can limit Live Migration and SMB storage traffic consumption from the NICs:

Set-SmbBandwidthLimit –Category LiveMigration –BytesPerSecond 8 GB

Set-SmbBandwidthLimit –Category VirtualMachine –BytesPerSecond 10 GB

Now you should have the ability to control which NICs are used by SMB 3.0, along with the ability to control the bandwidth consumption of the protocol in its various use cases.