Anatomy of a Microsoft Azure Virtual Machine

I find it important to understand the basic makeup of an Azure virtual machine. Understanding the basics not only helps you understand how to design and price virtual machines, but the knowledge also lends itself to troubleshooting machines that go wrong. In this post, I’ll explain at a high level how a virtual machine is made up in Azure.

Understanding that Microsoft Azure is Hyper-V

The first thing to understand is that Azure is based on Windows Server 2012 Hyper-V, and no, I did not say Windows Server 2012 R2. Virtual machines in Azure are essentially the same as the ones you get with Hyper-V and aren’t that similar in concept as to what you get with vSphere.

If you understand Hyper-V, then some concepts will be familiar. For example, it’s required that any virtual machine running in Azure that will be a domain controller has a data disk to store the Active Directory database and SysVol; veteran Hyper-V administrators will be familiar with this advice from Microsoft.

Virtual Disks

If you’ve ever worked with a virtual machine with on-premises virtualization, then you will be familiar with the concept of virtual disks.

Instead of physical disks, which then make LUNs, a virtual machine uses virtual hard disks. In the world of Hyper-V, we have VHD and VHDX formats that can be either fixed-size, dynamic, or differential. Azure supports only fixed-size VHD format disks.

Note that dynamic disks are converted if you upload them manually from Hyper-V, and Azure Site Recovery converts VHDX to VHD on the fly during replication.

A virtual machine always has an OS disk. In the case of Windows, that’s the C: drive. Any virtual machine that you deploy from the Azure Marketplace will have a 127 GB C: drive – this is despite the pricing Azure virtual machine pages incorrectly stating that a Basic A2 has a 135 GB disk (I’ll explain that soon). Remember: any virtual machine deployed from the Azure Marketplace has a 127 GB OS drive.

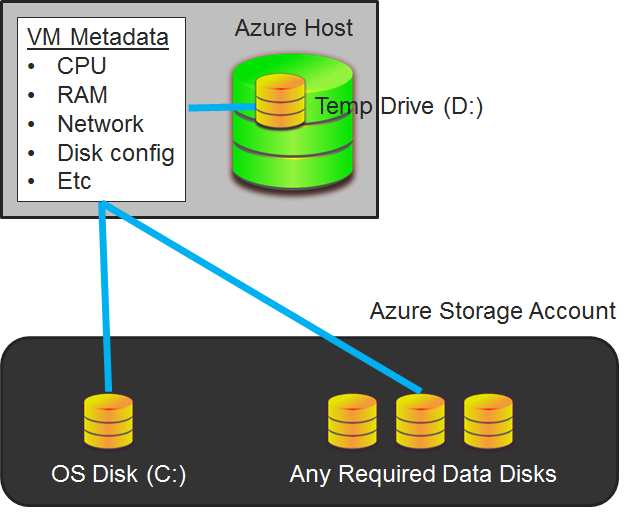

The OS disk of the virtual machine is stored on either Standard Standard (all series of virtual machines) or Premium Storage (DS- or GS-Series). If you want to visualize it, think of this as being like storing the disks of a virtual machine on a SAN. In fact, there are no SANs in Azure; instead, commodity hardware is deployed using software-defined storage, and it plays the role of storage that is shared between Hyper-V nodes in Azure.

The virtual machine has a second drive. A Windows virtual machine labels this as the D: drive by default. This second disk is known as the temp drive. The disk resides on host-local storage instead of the shared Standard Storage or Premium Storage. The purpose of this disk is to store temporary data, which includes the paging file and cached data.

A benefit of using a host local drive for the temp disk is low latency. Note that the D-, DS-, G-, and GS-Series virtual machines use SSD on the host to store the temp drive. This makes paging really fast, and can make caching very fast too.

How big is the temp drive? That depends on the spec and size of the virtual machine. When you look at the pricing for Azure virtual machines, you will see a column called Disk Sizes; the implication is that this is the total size of disk of the virtual machine. The Disk Sizes column actually shows the size of the temp drive. Remember that the size of the OS disk is 127 GB if you deployed the virtual machine from the Azure Marketplace.

You are warned not to store anything of value in the temp drive. This is because you are not guaranteed the same temp drive between reboots of the virtual machine. If you want to store data in an Azure virtual machine, then the correct place to do that is in data disks, which are stored on persistent Standard Storage or Premium Storage.

Metadata

Have you ever gone browsing the storage of vSphere or Hyper-V? Hyper-V virtual machines have an XML file (pre-Windows Server 2016) that describes the configuration of a virtual machine. vSphere uses a VMX file to accomplish the same task.

These metadata files describe the configuration of the virtual machine. Memory, CPU, network connections, storage controllers and more are described. Those storage controllers describe what disks are connected to the virtual machine. For example, the OS disk is the boot device. When you start a virtual machine, it consumes resources from the host and connects to the defined disks.

Pricing a Virtual Machine in Microsoft Azure

There are two primary costs to a virtual machine in Azure:

- Per-Minute Running: You are charged for the amount of time that a virtual machine is running. If you don’t need a virtual machine 24 x 7, then you can save 50 percent of a potential charge by using Azure Automation to power down a virtual machine every night for 12 hours.

- Storage: You are charged for the amount of standard or premium storage consumed, whether the virtual machine is running or not.

Removing Metadata

Lots of virtualization administrators have deleted a virtual machine, while opting to keep the disks. They then create a new virtual machine and attach the old disks. This is an old technique to resolve an issue of metadata corruption.

We can do something similar in Azure too. Imagine that you create a virtual machine that was connected to “Virtual Network A.” You spend ages working on the machine and then realize that it should have been connected to “Virtual Network B.” You cannot change the virtual network connection of an Azure virtual machine. But what you can do is delete the Azure virtual machine, choosing to retain the virtual hard disks, and then create a new virtual machine from the existing OS disk and data disks, and select the correct virtual network.

This is a classic example of where understanding the difference between metadata and disks is important, and how Azure virtual machines aren’t all that different to what we’ve been working with on-premises for many years.