New Features in Windows Server 2012 R2 Failover Clustering

Failover clustering is the feature in Windows Server that gives us high availability (HA) – that is, it allows us to make single instance services highly available. Possible implementations include clusters of Hyper-V hosts and traditional active/passive SQL clusters. Today I’ll go over the number of improvements that have been added to failover clustering in Windows Server 2012 R2.

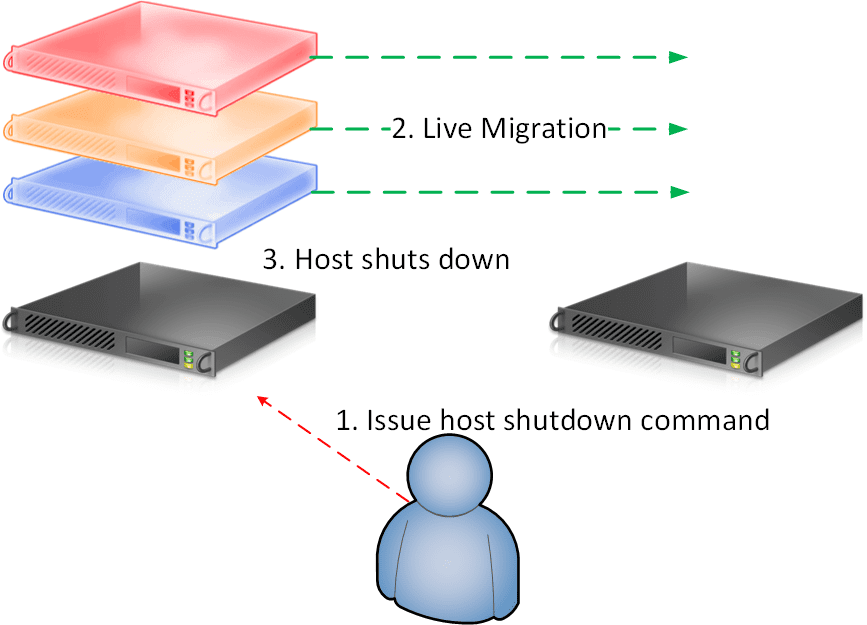

VM Drain on Host Shutdown

In Windows Server 2012, the correct process to shut down a host was to (a) pause the host, which would cause the VMs to move via Live Migration (or Quick Migration for low priority VMs by default) and (b) shut down the host. Some would just shut down the host and get an unexpected and unpleasant result – all of the VMs would use Quick Migration to move to other hosts in the cluster. In Windows Server 2012 R2, a clustered node will use Live Migration to drain the VMs before shutting down. This can be slower than Quick Migration on densely populated hosts, but it gives administrators the experience they are expecting. Failback will not happen by default, but it can be configured.

Proactive Server Service Health Detection

Clustering will check the health of a destination host before moving a VM to another host.

Proactive VM Network Health Detection

A cluster will check the health of a virtual switch network connection on a destination host before moving virtual machines to that host. This will prevent movement of VMs to a host that cannot connect services to the network.

CSV Balancing

A cluster will automatically balance ownership of CSVs across all nodes in the cluster.

Improved Logging

There is much more recorded information when adding or removing a cluster node, and when the state of a clustered resource changes.

CSV Storage Feature Support

A number of storage features are now supported by CSV:

- ReFS: A resilient next-generation file system that has built-in protection against corruption and bit rot (thus it does not use CHKDSK).

- Tiered Storage Spaces: You can create a CSV from a virtual disk on Storage Spaces. The storage pool can include two speeds of drive – SSD and HDD.

- Storage Spaces Write-Back Cache: Hyper-V requires write-through to persistent storage. Instead of write-caching to RAM, a storage pool can use Write-Back Cache to write to an SSD tier and demote cold data to the HDD tier.

- Parity virtual disks: This form of virtual disk fault tolerance was unsupported for CSV in WS2012, but it is supported in WS2012 R2.

- Deduplication: You can enable deduplication on volumes that contain just operating system virtual hard disks, such as VDI. This disables the use of CSV Block Cache, but deduplication actually provides faster boot up times because of the nature of the read process.

Increased Network Heartbeat Resiliency

By default, a failover cluster (all kinds) in WS2012 will perform a heartbeat once per second, with a five second timeout. Some felt that occasional glitches in networking could lead to a heavy response: automated failover of virtual machines that were working just fine. In WS2012 R2, a failover cluster will change this when you first add a VM as a highly available resource. The cluster heartbeat timeout will be automatically increased to ten seconds, meaning that brief issues with packet loss on the cluster networks will not failover virtual machines. This Hyper-V cluster timeout will be 20 seconds when the cluster nodes span more than one subnet.

Cluster Validation

The validation process has been improved in several ways:

- The validation will now ping each cluster node using the cluster protocols across the various networks. This should give better deployments and diagnostics.

- Storage validation is faster.

- Replicated storage (multi-site clusters) is identified and tested.

- You can select specific disks for storage validation.

- Specific tests for Hyper-V are run by the wizard.

Global Update Manager (GUM) Updates Process

The GUM manages the process of synchronizing settings across all nodes in a cluster. Changes are sent out to all nodes; prior to WS2012 R2, every node must acknowledge the change before it is implemented. In very large (up to 64 nodes in a cluster) or multi-site clusters this change process could be interfered with by cluster network congestion. In WS2012 R2, the GUM requires only a majority of nodes to acknowledge the update. Updates to application clusters are typically uncommon. Therefore this feature change is implemented only in Hyper-V clusters – that is, when you add the first virtual machine resource to a cluster of Hyper-V nodes.

Dynamic Witness

In all previous versions of clustering, you had to manually change the use of a witness based on the number of nodes (odd or even) in the cluster to break the quorum vote (used to determine if a fragment of the cluster continue operating). Now with WS2012 R2 you will always configure the witness disk. The cluster will decide if it should use the witness or not based on the odd/even count of nodes to ensure that there are an uneven number of quorum votes.

Multi-Site Quorum Improvements

Multi-site clusters are often split 50/50 across two sites. This can cause an issue when half of the cluster goes offline. WS2012 R2 clusters can survive losing 50% of the cluster at once. One site will automatically lose (non-authoritative site), using a LowerQuorumPriorityNodeID cluster quorum property. This will be useful where the company needs a multi-site cluster and cannot deploy a file-share witness in a third site.

A new ForceQuorum setting will allow you to set an authoritative site on a site in a multi-site cluster for when a split-brain situation happens – that is, when communications break down between sites, and the cluster nodes must decide which site should remain active.

SQL Server Cluster Simplification

A SQL server cluster can be deployed without the use of Active Directory computer objects. This assumes the SQL Server databases will use SQL authentication, and not Windows authentication.

Shared VHDX

A guest cluster allows you to make services highly available within the guest OS of 2 or more virtual machines. These virtual machines typically need access to some kind of shared storage. Initially this required complicated networking for iSCSI communications. Windows Server 2012 allows us to use SMB 3.0 or virtual fiber channel. This was a great step forward but it introduces a challenge in cloud computing. The boundary between the virtual layer (the tenant) and the physical layer (the service provider) is blurred. This blurring is particularly challenging because facilitating shared storage with iSCSI, SMB 3.0, or fiber channel requires infrastructure engineering and therefore contradicts the self-service requirement of a cloud.

Windows Server 2012 R2 adds a virtual alternative to offer shared storage. Up to 8 virtual machines can be connected to shared VHDX files. This gives us an all-virtualization solution that is suitable for self-service in a cloud. The shared VHDX must be stored on an SMB 3.0 share or a CSV. The hosts that are running the virtual machines must be clustered. The clustered hosts must not necessarily in the same cluster; this scenario requires storing the shared VHDX file(s) on an SMB 3.0 share that is used by all of the involved host clusters.