Botched Microsoft Update to Teams Retention Causes Customer Heartburn

No Data Compromised but Bad Day for Teams Retention

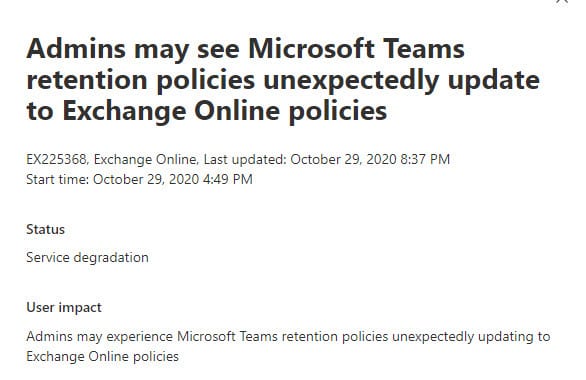

Alarm bells went off on Thursday, October 29 when administrators in several tenants noticed that retention policies created to process Teams chat messages were apparently applied to Exchange mailboxes. Microsoft subsequently issued incident EX225368 “Admins may see Microsoft Teams retention policies unexpectedly update to Exchange Online policies.” The incident started at 16;49 UTC and finished at 23:15 UTC after Microsoft had deployed a fix.

Microsoft said that the problem was caused by “a recent service update was causing Microsoft Teams retention policies to unexpectedly update to Exchange Online policies.” In other words, someone screwed up by making a code change which apparently had the effect of making the retention policies for Teams apply to the Exchange Online mailboxes of the accounts coming within the scope of those policies.

Oddly, incident TM225382 at roughly the same time reported that “Admins are unable to modify or add Microsoft Teams retention policies within the Microsoft Teams admin center.” Leaving aside the fact that retention policies are managed through the Microsoft 365 compliance center, the incident described an error apparently related to a non-existing entityMetadata command parameter. Overall, October 29 was a bad day for Teams retention processing.

Teams and Other Workloads Don’t Mix

Retention policies separate Teams chats and channel conversations from other workloads. In other words, you can’t create a single all-embracing policy covering Exchange Online, SharePoint Online, OneDrive for Business, and Teams. There are good reasons for this, including:

- Some organizations use very short retention periods for chats (one day is the minimum). While possible, it’s uncommon to apply such a short retention period to email and files.

- Retention processing isn’t done against the actual Teams data store. Instead, Teams uses the Exchange Managed Folder Assistant to process compliance records captured by the Microsoft 365 substrate and stored in user and group mailboxes. Microsoft recently changed the location of the compliance records to a folder in the non-IPM part of mailboxes. The Exchange Managed Folder Assistant (MFA) applies the Teams retention policies to the compliance records and synchronizes any deletions back to Teams for application against data in its Azure Cosmos DB store.

Microsoft chose to leverage the tried and trusted Exchange Online MFA for Teams retention processing because it avoided the need to create a new framework for Teams. So far, the mechanism has worked well.

It’s easy to see how a bug which caused the MFA to apply a restrictive 1-day Teams deletion policy to user mailboxes could wreak havoc. Although slow because of the amount of processing needed to remove almost every item in every mailbox, MFA would eventually finish, and everyone would have a very clean mailbox. The chaos that can flow from an error in a retention policy was illustrated in August when an incorrect policy update resulted in the loss of personal chat data for 145,000 users in the KPMG tenant.

GUI Error is Root Cause

The root causes reported in EX225368 and TM225382 both point to the problem being a GUI issue: “A recently deployed Exchange Online Protection (EOP) update contained an issue that caused Teams delete-specific retention polices updated on or after October 27, 2020 to be misclassified in the admin center User Interface (UI) and show up as Exchange Online delete-specific retention policies.”

In other words, the Compliance Center displayed a Teams retention policy as if it seemed to apply to Exchange Online, but no action occurred to make the MFA process mailboxes erroneously. Microsoft closed off with the flat statement that “We are reviewing our update and QA procedures to better understand how this issue was missed to help prevent similar impact in the future. This is the final update for the event.”

Poor Microsoft Performance

What is clear is that this incident demonstrated some poor performance in Microsoft quality assurance and testing. Even if it’s just a GUI problem, how a bug like this managed to get through testing, assuming testing happened, is quite inexplicable. The dark side of cloud systems is the loss of control organizations have over the systems they depend on. The implicit understanding is that Microsoft will ensure that everything works right inside Microsoft 365 and they have the people, tools, and resources to live up to that commitment. Seeing something like this sneak through into production takes some of the gloss off Microsoft’s vaunted DevOps approach to Microsoft 365 operations. It also begs the question if Microsoft pushes through updates into production faster than they should.

There’s no excuse for a bug which potentially compromises user data. Proponents of third-party backup and archiving solutions are already making the most of the problem to point out just how valuable an independent store is.

It seems unlikely that any user data was affected by this problem, but if a retention policy did go haywire and remove everything more than one day old from user mailboxes, could you really get everything back? The process to restore mailboxes for an entire Office 365 tenant using inbuilt or third-party tools is a debate worth having. I’ll leave that to another day.