The Proximal Data AutoCache is a product designed to boost read performance of virtualization storage for Hyper-V and vSphere. I first found out about this product at the Petri IT Knowledgebase author meet and greet with readers at TechEd North America 2014. While there, I met some folks from Proximal Data. They described AutoCache, and I was intrigued. I was offered a trial of the software, so I decided to play with it a bit. What follows is my review of the product.

What Does the Proximal Data AutoCache Offer?

Normally your hypervisor (vSphere or Hyper-V) will read virtual machine files directly from your storage. There might be some form of caching in your storage path, which might be offered by your storage controller that is relatively small and offers minimal benefits for read performance. Or maybe you implemented tiered storage. This requires lots of SSD capacity in your storage, and it is at the far end of a network connection.

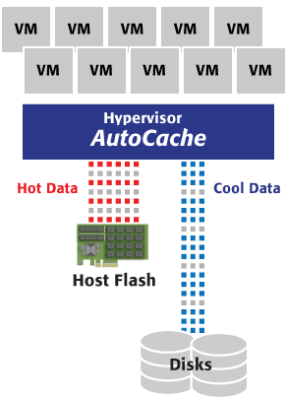

Proximal Data AutoCache offers something different. You place some form of high-speed storage, such as an SSD, into your host servers. This storage will be a local read cache. A small AutoCache driver is installed into your hosts, and it splits the I/O for your virtual machines. Writes go directly to your storage as usual. However, reads can be dealt with in a few ways, including:

- Used to populate the read cache

- Read from the read cache instead of the remote storage

A visualization of what AutoCache does. (Image: Proximal Data)

This AutoCache-managed local cache should offer two things:

- Increased read IOPS: The speed of the local cache storage, such as an SSD, should offer vastly increased read speeds for commonly accessed data.

- Reduced latency: No matter what you do with storage networking, a local SSD will always offer faster access speeds than remote storage. I haven’t validated this with some of the extreme “SSD in a DIMM slot” options, but the speeds offered there might be incredible.

Installing AutoCache

The software download is tiny; I think it was around 6MB. This driver is installed in each host that will be licensed for AutoCache. A fast drive, such as an SSD, is installed in each of the licensed hosts. You then install an add-on to System Center Virtual Machine Manager (SCVMM). You will use the SCVMM console to configure and manage AutoCache. Some extra icons are added into the SCVMM console ribbon to provide this functionality.

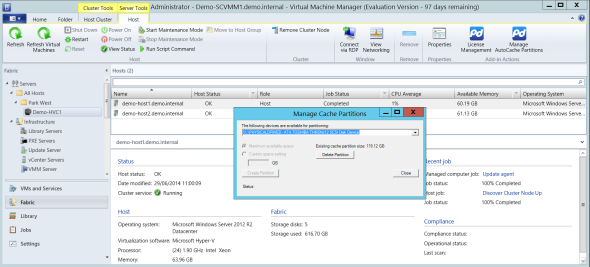

Don’t worry; the documentation provided by Proximal Data provides clear step-by-step instructions, and there’s little effort required to get started. There are two basic operations you need to do in the Fabric view of SCVMM:

- License AutoCache

- Configure cache drives on each host

After that, the magic happens all by itself. You can monitor the performance of AutoCache using a live performance widget in SCVMM.

Configuring a cache partition in Proximal Data AutoCache. (Image: Aidan Finn)

Testing AutoCache

Everything sounded great, in theory, about AutoCache. But I wanted to test it out. I don’t have a production workload, but I do have a pretty nice lab at work.

My AutoCache Test Lab

I decided to install AutoCache on one of two Windows Server 2012 R2 Hyper-V hosts in my lab. Each host is a Dell R420. The hosts are connected by dual iWARP (10 GbE RDMA) network connections to a small Scale-Out File Server (SOFS). One host, Host1, was configured with AutoCache. A consumer-grade 128 GB Toshiba Q Series Pro SATA SSD (554 MB/s and 512 MB/S write) was inserted into Host1 using a third-party 3.5″ to 2.5″ caddy conversion kit. An AutoCache cache partition was creating in SCVMM. An unmodified Host2 was used to allow me to compare before and after AutoCache performance statistics.

I deployed a single generation 1 virtual machine that I ran stress tests in. The guest OS was Windows Server 2012 R2. I added a second fixed-size VHDX on the SCSI controller. SQLIO was installed into the guest OS; it’s a well-known tool, and it creates a realistic and configurable workload. My stress test used a 10 GB file in the data drive. The test was a script ran a series of 10 sequential jobs. Each job lasted two minutes, performing 4K random reads from the test file. Random reads seemed more realistic and read operations are what AutoCache is designed to improve.

My Hyper-V cluster’s back-end storage is a SOFS. The SOFS is made up of two HP DL360 G7 servers, each connected to a single DataOn DNS-1640 JBOD via dual 6 Gbps SAS cables (2 x 6 Gbps x 4 channels = 48 Gbps of storage throughput). The Storage Spaces virtual disk is tiered with 8 x Seagate Savvio 10K.5 600GB drives, 4 x SanDisk SDLKAE6M200G5CA1 200 GB SSDs, and 2 x STEC S842E400M2 SSDs. The virtual disk is a three column, two-way mirror volume and is formatted with NTFS 64 K allocation unit size. I made sure to pin the files of the virtual machine to the HDD tier of my Storage Spaces virtual disk so I could ensure that any acceleration was in fact being done by AutoCache.

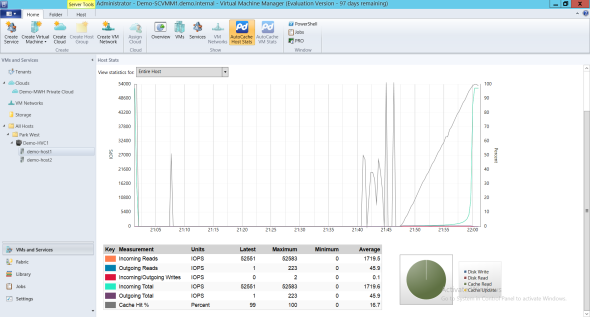

Monitoring AutoCache performance in SCVMM. (Image: Aidan Finn)

There were two executions of the benchmark test. The virtual machine was placed on Host1 where AutoCache was installed and the test script was run (10 passes, each lasting 2 minutes). Then the virtual machine was live migrated to Host2, with the files residing in their original location on the SOFS. The test script was run once again.

Proximal Data AutoCache Test Results

When you install a tiny driver and it takes a few minutes to get something working, quite honestly, from a company that you’ve never heard of before, then one might not expect much. Was I wrong! And to be honest, I swore quite loudly when I saw the test results. I was stunned by the difference that AutoCache made to my SQLIO benchmark.

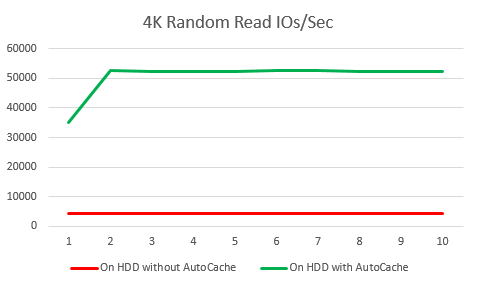

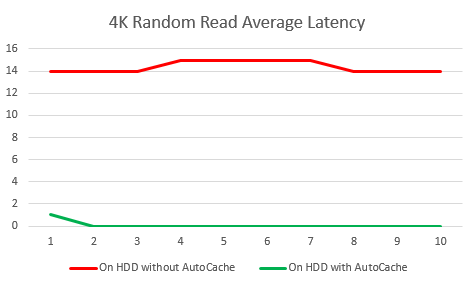

As expected, each of the 10 jobs in the stress test performed on the virtual machine gave similar results with little variation while it was placed on the unmodified Host2. The SQLIO job averaged 4143 random 4K read IOs/second with an average latency 14.4 milliseconds.

After the virtual machine was live migrated to the enhanced Host1, the tests produced near instant improvements. I monitored the AutoCache metrics in SCVMM and observed how the driver worked. Initially, there was a lot of disk read and cache write activity, with very little cache read activity. The ratios changed quickly during the first job and there was a fast increase in cache reads and storage performance. Read IOs/second peaked at 52,638 and latency was reported as 0 by SQLIO.

SQLIO read IO/sec metrics from 10 jobs with and without AutoCache. (Image: Aidan Finn)

The increase in data reads is incredible, but I think database administrators will be as, if not more, impressed with the drastic reduction in latency that could improve regular query performance.

SQLIO latency metrics with and without AutoCache. (Image: Aidan Finn)

I have been blown away by what Proximal Data AutoCache has done in my rather simplistic tests. I’m left wondering what I could do with 2 x SSDs as cache drives in a host. What happens if my VMs are on tiered or SSD storage? What if I could use an SSD mounted in a DIMM slot? Even with my basic configuration, I’ve proven what AutoCache can do in Hyper-V, and probably as well in vSphere.

I’ll qualify my findings by reiterating that I do not have a production workload. Obviously my cache is bigger than my total data which is very unrealistic. But if you size your cache for your amount of “work set data,” then I think AutoCache could be a game changer for any kind of remote storage.