How Would Microsoft Design a Scale-Out File Server: Disks, Networking, and More

- Blog

- Networking

- Post

In part one of this series, I discussed how Microsoft (or you) could use SANs or Storage Spaces with JBODs to implement a Scale-Out File Server (SOFS). In this second part, I will look at disks, networking, servers, and the concept of Storage Bricks in the decision making process of designing a SOFS.

Scale-Out File Servers and Disks

When Storage Spaces was released with Windows Server 2012, all I ever heard from people I briefed was “Does it support tiered storage?” It didn’t then, but Windows Server 2012 R2 does now. The SSDs are not the kind you put in a PC or laptop, not even the “enterprise” kind. A SOFS requires dual-channel SAS disks, and the kind of SSDs that support those don’t cost hundreds of dollars – they cost thousands of dollars, just like the ones in a SAN do.

In the small/medium enterprise space, I don’t expect to see many tiered Storage Spaces implementations. Instead, I expect to see lots of two-way mirroring with 10K or 15K HDD traditional spinning disks. Companies of this size rarely need the peak performance offered by SSDs.

Where tiered storage really can be of benefit is when you need lots of capacity. Consider this: You can put in 200 x 1 TB 10K drives at a medium price, or you can put in 50 x 4 TB 7.2K drives. Those 50 slower drives will be a lot cheaper to purchase and power. What if we supplemented those 50 drives with 8 x SSDs? The heap map optimization process of Storage Spaces would promote the hot blocks to the SSD tier, and we would have Write-Back Cache to absorb brief spikes in write activity. That’s what Microsoft envisioned with tiered Storage Spaces: to mix the best of big, slow, and economical with small, fast, and expensive.

Some JBOD manufacturers will sell you disks and test them for you. Not all disks are made equal, however, and things happen during shipping. Test the disks before you implement your Storage Spaces. It’s also a good idea to check the firmware level on the disks. This can impact performance, especially with Windows Server MPIO, where the Round Robin (RR) algorithm has been found by some of us to perform poorly compared to the Failover Only (FOO) one.

Networking

Although you can do SMB 3.0 over 1 GbE networking, I never see Microsoft talk about this. They don’t appear to consider this speed of networking for their data center storage protocol when blogging, writing, or presenting. The minimum you will normally see is unenhanced 10 GbE networking. Most real-world implementations (see the Build team whitepaper above) are in this speed.

A SOFS with lots of connecting Hyper-V hosts will place pressure on the CPUs of those SOFS nodes. Don’t forget that the SMB 3.0 networking of the hosts could consume precious CPU from the hosts! Remote Direct Memory Access (RDMA) offloads most of that processing to alleviate the pressure that could have been caused by SMB 3.0 storage networking. There are three options:

- iWARP: This is a 10 GbE option with RJ45 and SPF+ switch/NIC support. It’s just like implementing 10 GbE networking, and it’s very straight forward.

- RDMA over Converged Ethernet (RoCE): This one is very complex, as has been documented by Didier Van Hoye over the months.

- InfiniBand: Operating at speeds up to 56 Gbps (with 100 Gbps on the way) this is the one you see in keynote presentations, and in rarified altitudes in the real world.

Chelsio is the only brand still making iWARP NICs. Mellanox make NICs for RoCE and InfiniBand, with chipsets appearing in various server brands as add-on cards. It is expected that people implement RDMA will go for either iWARP or Infiniband. RoCE is not an option for those who want a simple, or even just a difficult, way to go. And my impression is that InfiniBand is not much more expensive than RoCE. In the presentations that I have attended, the networking is always either plan 10 GbE, iWARP, or Infiniband.

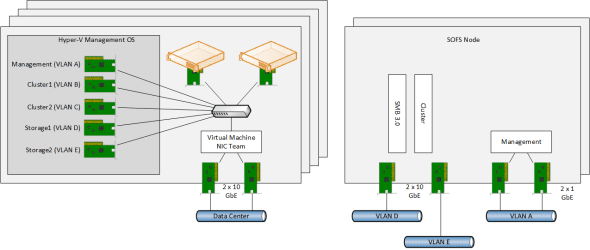

As for the server networking, that’s a great big old “it depends.” I have seen two designs in Microsoft presentations. The first of those designs is one that I first blogged about on my own site in June of last year. In that design, a pair of rNICs (NICs capable of RDMA) is placed into the SOFS cluster nodes and Hyper-V hosts. Some converged networking is used to merge cluster and SMB 3.0 networking on those NICs. Management is done on the physical NICs.

A second option, and one I’m not sold on, is to use converged virtual NICs for the SMB 3.0 storage communications. There are a few considerations with this option:

- RDMA is not possible: RDMA cannot be virtualized so a virtual NIC, as shown in the Hyper-V hosts below (Storage1 and Storage2) cannot offload the pressure from the CPU. That means you have no SMB Direct.

- No vRSS in Management OS Virtual NICs: Receive Side Scaling allows you to get SMB Multichannel across just a single NIC, providing you with the ability to fill a 10 GbE or faster pipe with SMB 3.0 traffic. You cannot do this with a Management OS Virtual NIC in WS2012 R2; vRSS is only available in virtual machines. To be fair, if you are converging NICs, then do you really expect to fill the 10 GbE pipes with storage traffic?

- Round Robin: Note that there is no way to assign Storage1 and Storage2 to different physical NICs in the team. They could end up on the same physical NICs and compete with each other for incoming bandwidth. In theory, using dynamic mode load balancing in WS2012 R2 NIC teaming in the hosts and the SOFS nodes should negate the impacts of that risk.

I have heard a possibility of using more than just two virtual NICs to get more parallel channels of networking for SMB Multichannel (single channel across multiple virtual NICs). I really hate that idea in the real world because it adds a lot of complexity.

I recommend keeping the network design simple for SMB 3.0 storage and using the design that I blogged about last year, whether you are using RDMA or not with 10 GbE or faster networking.

Server Requirements

Microsoft always tries to remain neutral when it comes to servers. My suggestion is that you always use 2U workhorse servers. This is because they are easily maintained and expanded. Note that you might need many expansion cards:

- 10 GbE, iWARP, or InfiniBand NICs

- One or more dual port or quad port SAS adapters

Make sure that the expansion cards meet the speed requirements of the cards.

Regarding memory, note that you cannot use CSV Cache with tiered storage spaces, so there’s no point in loading the SOFS nodes with RAM if you are going to mix SSDs with HDDs. On the other hand, if you are using just a single tier, then add some RAM to improve the read performance of the SOFS’s CSVs with CSV Cache.

Storage Bricks

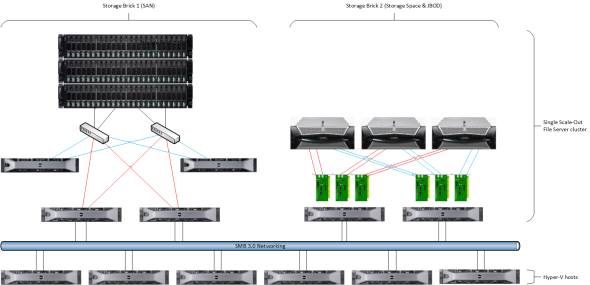

It is possible to build a single SOFS cluster that contains isolated storage footprints. For example, we could build a SOFS cluster using an existing SAN and some servers. Then we could add some more servers, but instead of connecting them to the SAN, we would add some JBOD trays. It would be a single cluster, a single active/active (actually, active/active/active/active) File Server for Application Data role or namespace, but with CSVs and file shares that do not span storage systems. The below diagram depicts how we could implement such a design with two of these “storage bricks.”

Remember that in this design, the SOFS cluster is still restricted to eight nodes (a support limitation by Microsoft). That implies that you could have 4 x 2-node storage bricks or 2 x 4-node storage bricks in a single cluster.