Over the past few weeks I have documented the various elements used to make up converged networks in Windows Server 2012 (WS2012) and Windows Server 2012 R2 (WS2012 R2). In this article I plan to give you some guidance on designing converged networks: That is, how to design your servers and hosts to use this networking concept, how you can make use of larger bandwidth networking at a lower cost, and how to make more flexible and easy to configure deployments. There is no single correct design, as the level of correctness is relative to your requirements. However, there are incorrect designs in which certain features will not work, and there are some that are incompatible with others.

Designing Converged Networks: The Process

You must do some homework before you can design converged networks. There are a number of questions that you should ask yourself when designing converged networks.

What Will Be My Physical Storage?

In Hyper-V or Windows Server, you have a number of options for physical storage. The following storage connection protocols are not candidates for convergence because they are not TCP/IP based:

- Direct Attached Storage

- Fiber Channel (FC)

- SAS

Fiber Channel over Ethernet (FCoE) is sometimes used in blade servers to converge fiber channel storage connectivity with network connections. However, this is done using expensive hardware and Windows Server cannot replicate this.

If you plan to use iSCSI or SMB 3.0 as a storage connection protocol then you can converge these networks with the other networks of your server or host. Very often, the storage network is one of the first to adopt more capable network adapters. If you plan on using 10 Gbps or faster for your storage networks then this offers you the opportunity to make that bandwidth available to other networks – under the control of QoS, of course. Similarly, if you are going to use RDMA for SMB 3.0 storage, then you might like to also use the offload that SMB direct is giving to offload the processing of live migration from the processors.

Define the Networks Required by the Server or Host

It’s hard to converge networks if you don’t know what networks you want to or even can converge. In the case of a clustered Hyper-V host you might have the following.

- Management: For host configuration management, remote desktop, and monitoring.

- Virtual machines: Allowing VMs to communicate via the physical network.

- Cluster: Used by the cluster heartbeat for host downtime detection, and redirected IO for metadata operations on Cluster Shared Volumes (CSVs).

- Live migration: This is the network identified for copying and synchronizing virtual machines between hosts. This network is also a secondary network for cluster communications.

- Backup: Recommended in larger environments, this will be the network used for backup and restore operations.

In the cause of a server participating in a Scale-Out File Server (SOFS) cluster, you would expect to see management, cluster 1, and cluster 2. There are two cluster networks to provide fault tolerance for the critical heartbeat operation between cluster nodes.

What Bandwidth Capacities Are Required?

Every network will have a minimum bandwidth requirement. Identify those requirements and calculate your totals. This will indicate how much bandwidth you need to service those needs. If you require NIC teaming (which you will more often than not) then you need to double that capacity to allow for failure of NICs or switches.

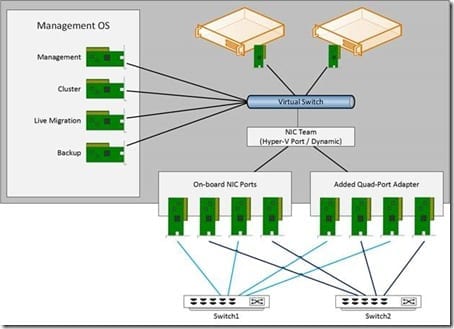

For example, if you require four * 1 Gbps networks in a Hyper-V cluster then you should ensure that you always have a minimum of 4 Gbps of bandwidth available in the converged solution. One possible way to do that is to create a NIC team with eight * 1 GbE NICs, as shown below. In this example, the four on-board NICs (commonly supplied by manufacturers in rack servers) are joined by a quad-port NIC card that is inserted into one of the expansion slots. This gives two sets of 4 Gbps NICs that are connected to two different access or top-of-rack (TOR) switches in alternating order.

Converged Hyper-V host using 1 GbE NICs.

With the above design you will have a total of 8 Gbps potential bandwidth, and you will drop down to the minimum required 4 Gbps if one of the following happens:

- A network adapter, such as the expansion card, fails

- There is a TOR switch failure

Bear in mind that with Hyper-V Port NIC teams, a single virtual NIC, including those in the management OS, is limited by the bandwidth of a physical NIC. This means that the live migration network in the above design is limited to 1 Gbps at most. An alternative would use the more expensive 10 Gbps option (NIC team of two adapters) that offer more burst capacity and use fewer total switch ports. This cost could be offset in larger environments by lower required administrative effort, network infrastructure, and power consumption.

What Hardware Optimizations Are Required?

Here are some of the hardware optimizations that you might consider and what impact they will have:

- Single-Root IO Virtualization (SR-IOV): If you want to use SR-IOV for your virtual machine networking, then you cannot use the NICs that will provide the physical functions (PFs) for convergence. These NICs must be dedicated to virtual machine connectivity.

- Remote Direct Memory Access (RDMA): You cannot pass RDMA (SMB Direct) traffic through the virtual switch. You cannot team NICs if you plan on using RDMA.

- Receive Side Scaling (RSS) and Dynamic Virtual Machine Queue (dVMQ): These two features cannot be used on the same NICs. If you want to use RSS for the physical networking of a host (SMB 3.0 for storage, live migration, and CSV redirected IO), then you need NICs that won’t be used to connect a virtual switch. Note that virtual RSS (added in WS2012 R2) only works for virtual machines whose virtual switch is connected to NICs with dVMQ enabled.

Keep in mind that SMB multichannel does not require NIC teaming; in fact, if you are going to use a Scale-Out File Server (SOFS) for storage, then each NIC involved in SMB 3.0 storage must be on a different subnet/VLAN.

Start Designing Hosts

There are two ways to start designing hosts for those who are new to the process. It all starts by taking out a blank sheet of paper or by using a whiteboard.

Method 1: Draw out the networking of a host as if convergence was not possible. Use the above process to identify the requirements of each network. Identify the compatible networks. Take out another sheet of paper and redraw the host, starting with the NIC teams (where applicable) and working your way up the stack.

Method 2: Start by drawing a completely converged host, possibly using management OS virtual NICs, as you have seen repeatedly in these articles on converged networking. Then you can start to specialize the networks by determining bandwidth and hardware offload requirements, each time creating a new drawing of the host until everything is compatible and meets your needs.

Designing a converged network server or host might seem challenging at first, but after you do this enough times you will eventually get to the point where you automatically jump to a design as soon as you know what the possible NICs and storage will be. Then you will be able to quickly specialize that design for the particular circumstances, without using any paper or whiteboards.