Deploy Active Directory and Certificate Services in Azure Using Infrastructure-as-Code — Part 1

In this three-part series, I’ll discuss how I deployed an Active Directory (AD) forest with 2 domain controllers, and a member server running certificate services, in Microsoft Azure. In the first part, I’ll walk you through the JSON Azure Resource Manager (ARM) templates used to provision the three virtual machines (VMs) and required infrastructure. In the second part, Deploy Active Directory and Certificate Services in Azure Using Infrastructure-as-Code — Part 2, I’ll show you how to add a PowerShell Desired State Configuration (DSC) resource to the template for deploying AD Certificate Services (ADCS) in Windows Server. And in the final part, I’ll show you how to use Visual Studio to provision the solution in Azure.

Azure Resource Manager (ARM) is a deployment model that allows organizations to provision Azure resources using JavaScript Object Notation files (JSON). Microsoft has a collection of templates in JSON format that can be used to provision Azure resources. If you need a primer on using Visual Studio and JSON templates to provision Azure resources, read Microsoft Azure: Use Visual Studio to Deploy a Virtual Machine on Petri.

The solution is based on two JSON templates, which I downloaded from Microsoft’s Quickstart gallery and PowerShell Desired State Configuration (DSC). The first template deploys two domain controllers in a new Active Directory forest with a high availability configuration. The second template deploys a virtual machine and joins it to the domain. Finally, I added some additional PowerShell DSC code to install Active Directory Certificate Services (ADCS) on the member server.

For a primer on working with JSON templates and Azure resources, see Aidan Finn’s articles on Petri:

If you don’t want to use readymade templates as the basis for your own project, Azure lets you download the JSON code required to deploy resources. For more information, see Creating JSON Templates From Azure Resource Groups on Petri.

Import the Project into Visual Studio

Before I start describing how the solution works, you can download the entire project (Petri_ADLAB5.zip) here and import it into Visual Studio (VS). If you don’t have a Visual Studio license, install the Community edition with the Azure SDK.

To import the project template into VS, you’ll need to put it in the My Exported Templates folder in \Documents\Visual Studio 2017\. Open the template by clicking CTRL+SHIFT+N in VS. In the New Project dialog, expand Installed on the left, and then click DeploymentProject. You can then select the project template and click OK.

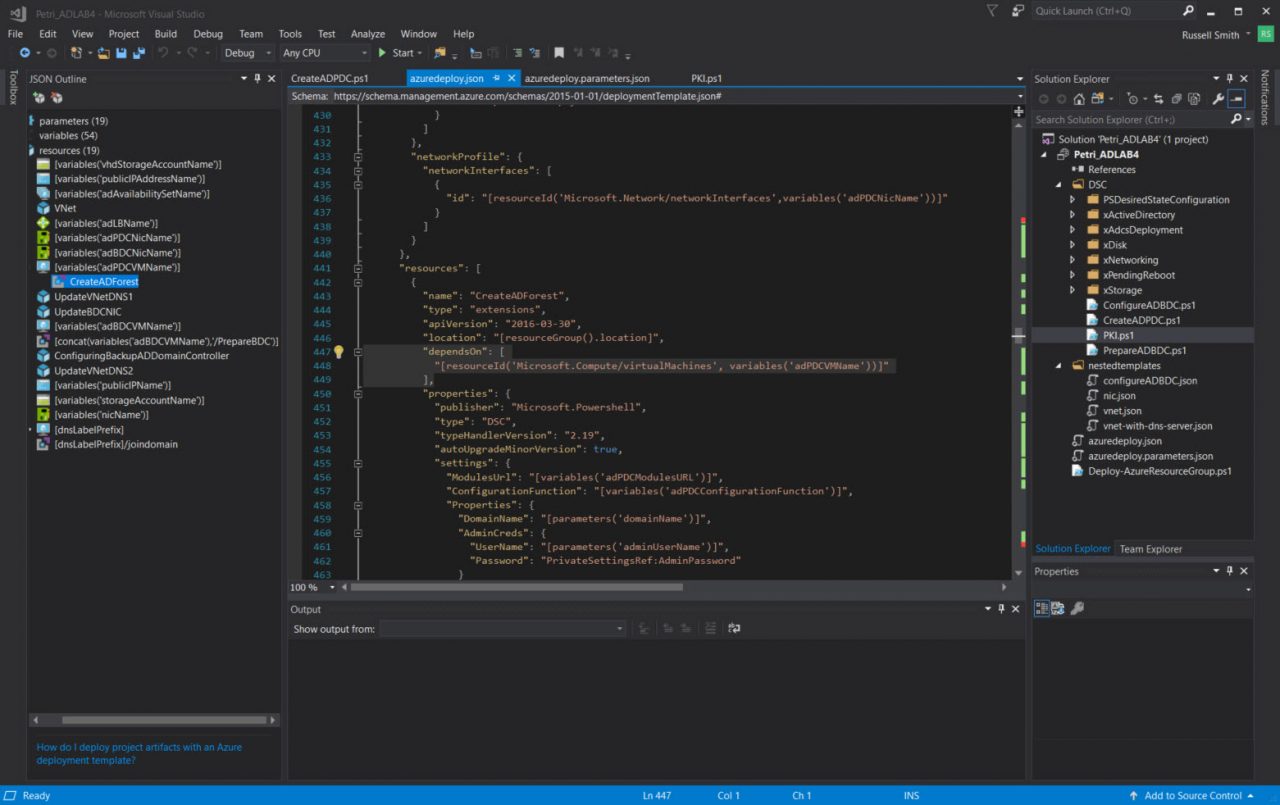

On the right of VS, you will see the Solution Explorer pane. If you can’t see it, click CTRL+ALT+L. Here are all the project files and folders. Double click azuredeploy.json. This is the main deployment code. The file will open in the central pane. On the left you should see the JSON Outline pane. If you can’t see it, click View > Other Windows > JSON Outline. In the JSON Outline pane you can quickly jump between different parts of the code. Expand Resources to see a list of Azure resources that the template provisions.

Quickstart Templates and Other Files

The first template I used was Create an New AD Domain with 2 Domain Controllers. It provisions two domain controllers with load balanced public IP addresses. The second template, Join a VM to an Existing Domain, provisions a third VM and joins it to the domain. Each template also includes parameters in azuredeploy.parameters.json. Just as I did with the azuredeploy.json files, I combined the contents of these files. There is one more key file: Deploy-AzureResourceGroup.ps1. Visual Studio automatically generates this file. It is used to provision the Resource Group for the resources you will provision in Azure and it sets the artifacts path. The file can be edited if necessary. We’ll learn more about artifacts in the third part of this series.

Managing Dependencies

In this project, I combined the two templates and added PowerShell DSC code to deploy ADCS. But in retrospect, it might have been better to link the second template to the first. There are already several nested (linked) templates in the project. For more details on using linked templates, see Microsoft Azure: Using Linked ARM Templates on Petri.

When orchestrating the deployment of a complex application, it’s important to note that the order in which code appears in the template doesn’t necessarily reflect the order in which it is executed. The order of execution is vital because a second domain controller can’t be installed until the first is provisioned, a member server until at least one domain controller is available, and ADCS can’t be installed until the member server has been joined to the domain.

The code looks complex because you need to deploy the infrastructure to support the virtual machines, not just the VMs themselves. For example, a virtual network (Vnet), network interfaces (NICs), and load balancer are required.

Resource manager determines what code should be executed when using the dependsOn parameter. For example, perhaps I want to deploy the second domain controller only after the first has been provisioned. You can see in the code that the forest isn’t created until the virtual machine, on which the first domain controller will be deployed, has been provisioned.

"dependsOn": [

"[resourceId('Microsoft.Compute/virtualMachines', variables('adPDCVMName'))]"

],

The dependsOn parameter requires a resourceId. But as shown in the code above, it’s possible to enumerate the resourceId on the fly from a variable. The two VMs hosting the domain controllers can be provisioned in parallel. But we must wait for the first DC to come online before we can configure Active Directory Directory Services (ADDS) on the second domain controller.

"dependsOn": [

"[concat('Microsoft.Compute/virtualMachines/',variables('adBDCVMName'),'/extensions/PrepareBDC')]",

"Microsoft.Resources/deployments/UpdateBDCNIC"

],

The code above waits for the network interface card (NIC) that’s attached to the second domain controller to be updated with the IP address information of the first DC. In turn, UpdateBDCNIC waits for UpdateVNetDNS1, which waits for the forest to be installed on the first DC.

"dependsOn": [

"[concat('Microsoft.Compute/virtualMachines/', variables('adPDCVMName'),'/extensions/CreateADForest')]"

],

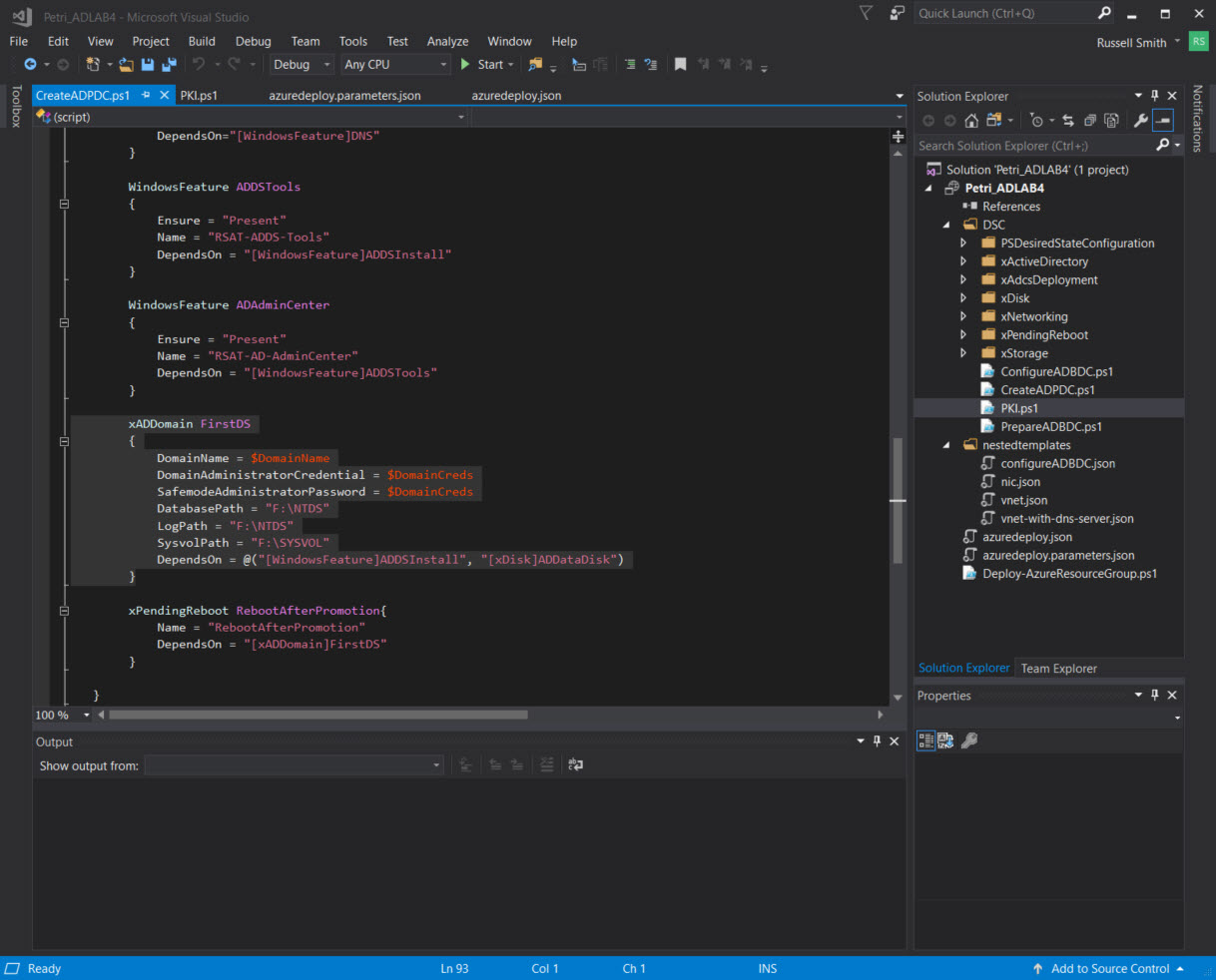

The ADDS server role is installed and the server promoted to the first domain controller in a new forest using PowerShell DSC. The CreateADForest resource calls a PowerShell DSC script (CreateADPDC.ps1) that uses Microsoft’s Active Directory DSC resources. If you open CreateADPDC.ps1 in VS, you’ll see that the file contains a set of declarations about how Windows Server should be configured. The xADDomain resource is used to determine how the ADDS bits should be installed. For more information on working with PowerShell DSC, see How Do I Create a Desired State Configuration? on Petri.

xADDomain FirstDS

{

DomainName = $DomainName

DomainAdministratorCredential = $DomainCreds

SafemodeAdministratorPassword = $DomainCreds

DatabasePath = "F:\NTDS"

LogPath = "F:\NTDS"

SysvolPath = "F:\SYSVOL"

DependsOn = @("[WindowsFeature]ADDSInstall", "[xDisk]ADDataDisk")

}

The DNS server address of the VNet is updated after the installation of the first domain controller with the domain controller’s IP address because it also hosts DNS. As you can see above, not only can we wait for a resource to be deployed but also for Azure VM extensions to complete.

The concat string function combines multiple string values or multiple arrays and returns a concatenated string or array. You can see it used in the previous two code examples where dependsOn waits for a VM and PowerShell DSC to complete on the VM. For a complete list of available sting functions, see String functions for Azure Resource Manager templates on Microsoft’s website.

Once the forest has been configured, PowerShell DSC is used to promote the second VM to a domain controller (PrepareADBDC.ps1 and CreateADBDC.ps1). After the third VM has been provisioned, the provisionvm1.ps1 (later renamed to PKI.ps1) script uses the PowerShell DSC xAdcsDeployment resource to install ADCS and configure it as a root certification authority (CA). Because I combined two templates for this solution, provisioning of the certificate services VM appears as [dnsLabelPrefix] in JSON Outline panel in Visual Studio. As you can see in the Solution Explorer panel in VS, all the PowerShell DSC scripts must be uploaded to Azure artifact storage as zipped files.

Unlike the two domain controllers, where AD is configured using PowerShell DSC scripts, the member server is joined to the domain using the domain join ARM extension. Virtual machine extensions are small applications used for post-deployment configuration and automation tasks. For more information on the domain join extension, see Microsoft’s website here. Once the VM has been joined to the domain, ADCS is configured using PowerShell DSC.

Reviewing the Situation

There’s no doubt that Infrastructure-as-Code can be complicated. While it is not compulsory to understand exactly how each template works and orchestrates the provisioning process, if you want to deploy complex apps or combine existing templates, an understanding of this process can help you troubleshoot any issues you might come across.

In this article, I showed you how I went about using Infrastructure-as-Code to start a project to deploy an Active Directory domain with a certification authority. In the second part of this series, I am walking you through how I added my own PowerShell DSC code to the project to configure AD Certificate Services on the third VM.